Hans Jonas on Responsibility in the Age of Artificial Intelligence

The advent of powerful technologies in Artificial Intelligence and their release to the public (e.g., ChatGPT, Bard, and so on) bring about hard questions about the ethical implications of using them. The increasing power of human beings to significantly alter reality and civilization needs to be examined with care. Following the philosophy of Hans Jonas, we overview his suggestion of considering the negative consequences of technology before joyfully welcoming it.

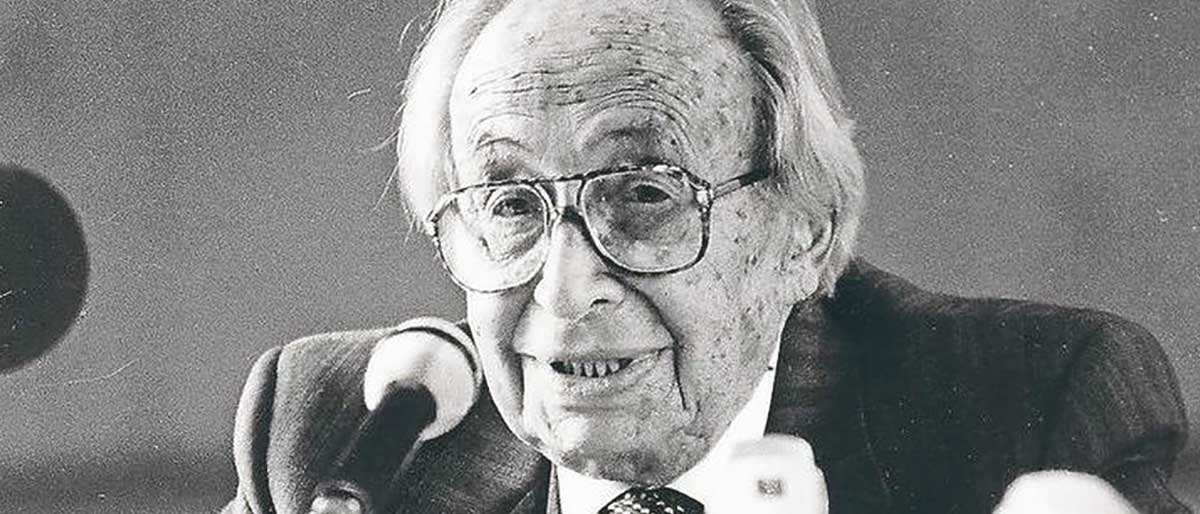

Hans Jonas and the Need for New Ethics

Hans Jonas was a German-Jewish philosopher born in 1903. He studied under notable philosophers like Martin Heidegger and Edmund Husserl and became a close friend of the political theorist Hanna Arendt. In 1979, he published The Imperative of Responsibility: In Search of Ethics for the Technological Age. There, Jonas argued in favor of a new ethical imperative that can respond to the challenges posed by new technologies. He had seen firsthand how the world was rapidly changing: the Manhattan Project—that would lead to the first use of the atomic bomb in Hiroshima and Nagasaki—and the US moon landing of Apollo 11 in 1969.

Prior technologies, even if powerful in the eyes of humans, left Nature and its elements untouched and its powers undiminished (1985, p. 3). Battering rams, siege towers, catapults, and trebuchets, none of them could by any stretch of the imagination endanger Nature as a whole. This was reflected in the main ethical systems of the past. For Jonas, Nature was not an object of human responsibility¹. This entails that ethics was mainly anthropocentric, meaning that it focused on human interaction. Moreover, in assessing the morality of an action, previous ethical ideas were focused on the consequences near the praxis. There was no need for remote planning (Jonas, 1985, p. 5).

According to Jonas, the dawn of new technologies dramatically challenges these ethical frameworks. Nuclear power and genetic modification (then only a prospect) could change nature as a whole. The “vulnerability of nature” is characteristic of these changes (Jonas, 1985, p. 6). The long-lasting consequences of technology pose the additional difficulty of determining with clarity what could happen once they are deployed. Hans Jonas writes: “The gap between the ability to foretell and the power to act creates a novel moral problem” (1985, p. 8). Never has humanity been so powerful and, at the same time, so unaware of the effects that yielding that power has. In the face of new questions, Hans Jonas proposes a renewed perspective on ethics and defends the importance of a new imperative: responsibility.

Get the latest articles delivered to your inbox

Sign up to our Free Weekly Newsletter

The Imperative of Responsibility

Before formulating his new principle, Jonas recalls Kant’s first formulation of his categorical imperative: “Act only in accordance with that maxim through which you can at the same time will that it become a universal law.” Without getting into scholarly details, this formula is “a decision procedure for moral reasoning” (Johnson & Adam, 2022). The intuition behind it is that one should only act on principles that can be universally applied without contradiction. Before deciding on a maxim, one must consider if everybody could act under the same principle; if a contradiction arises, the action is morally impermissible. A common example is lying—if everybody did it constantly, social trust would crumble. In this sense, the categorical imperative does not have a specified content. Rather, it is a way of testing a maxim that guides action.

Now, let us formulate the Imperative from Hans Jonas: “Act so that the effects of your action are compatible with the permanence of genuine human life.” The author also shares a negative formulation, “Act so that the effects of your action are not destructive of the future possibility of such life” (1985, p. 11).

While Kant’s formulation is logical in nature, Hans Jonas deals with the predictable future as a dimension of responsibility. The imperative of responsibility is not about searching for contradictions within the maxim but about testing the extent to which genuine human life can flourish in the future under that maxim. This means that agents are responsible for future generations of humans and the now vulnerable environment. He is worried that actions can be taken without considering the type of world we want to leave to those to come: is it one in which a genuine life is threatened or nonexistent?

Applications of the Imperative

Of course, we must now ask, what does Hans Jonas mean by genuine? Is he defending an essentialist conception of humanity? What does it mean to safeguard the continuity of genuine human life? He has in mind, among other things, any type of technological advancement that can change the condition of human life and its inherent dignity. For example, the transhumanist idea of avoiding death goes against inherent human notions of natality and mortality that give meaning and structure to human life (Coyne & Hauskeller, 2019). But there are other examples more relatable to our current development: should we allow parents to engineer their offspring? Should gene editing be used to cure diseases or to deal with invasive species? Again, what is central to Jonas is that any action must preserve genuine humanity.

Jonas contends that even if some possible outcomes are envisioned, scientists do not have the right to decide over the use of technologies that can forever change human life (1985, pp. 11–12). For him, the unintended consequences of technology need to be carefully considered. Bad scenarios must be prevalent in those discussions because there are innumerable ways in which things can go wrong.

He compares human innovation to the mechanisms of evolution; in evolution, Nature proceeded slowly, making small changes in a vast span of time. In contrast, human changes take place abruptly. He writes, “The big enterprise of modern technology, neither patient nor slow, compresses as a whole and in many of its single projects the many infinitesimal steps of natural evolution into a few colossal ones and forgoes by that procedure the vital advantages of nature’s ‘playing safe’” (Jonas, 1985, p. 31).

Human impatience can lead to risking everything in the promise of a better life. For that reason, a result of his imperative of responsibility is that techno-positive philosophies cannot justify total stakes. Otherwise, the motivation behind deploying those technologies is more arrogance than necessity (1985, p. 36). The question is, can we apply the principle of responsibility to artificial intelligence now?

Responsibility in the Age of AI

On March 22, 2023, an open letter published on The Future of Life Institute’s website called to halt AI development. The letter reads, “Advanced AI could represent a profound change in the history of life on Earth and should be planned for and managed with commensurate care and resources.” Then, a couple of lines later, the perturbing question arises: “Should we risk loss of control of our civilization?” The open letter was signed by many powerful and influential CEOs and scholars like Elon Musk, Yuval Noah Harari, Steve Wozniak, and Max Tegmark.

Another example is Sam Altman’s remarks when testifying before the US Congress about the risks of AI; he recommended: “We need to spend some time talking about how we are going to confront those challenges.”

Indeed, both the open letter and Altman’s recommendations align with what Hans Jonas was—already in 1978—arguing for, namely, that “the prophecy of doom is to be given greater heed than the prophecy of bliss” (1985, p. 31). There are plenty of aspects in which AI systems (e.g., Large Language models) can be helpful to humanity, and renowned thinkers like Bill Gates have pointed out those benefits. Nevertheless, multiple potential pitfalls are associated with implementing AI and its exponential capabilities. Some may sound dystopian and distant, like the eradication of human beings. In contrast, others are much more palpable: job displacement, biases and discrimination through AI systems, and privacy and security concerns.

For some, like Mo Gawdat (former chief business officer for Google X), we are already late, and the genie is out of the bottle. In an interview with Steven Bartlett, Gawdat claims, “We have given too much power to people which didn’t assume the responsibility (…) we have disconnected power from responsibility.” This echoes Jonas’s observation that the gap between the ability to foretell and the power to act was central to new moral problems. Is it too late then to be responsible?

Part of the difficulty in answering lies in determining who is responsible. With the Manhattan Project, some lead scientists were responsible for the consequences of developing the bomb: Robert Oppenheimer, Enrico Fermi, and Leo Szilard are just some of the names. But responsibility goes further. As some historians would point out, when Leo Szilard drafted the letter in 1939 and asked Albert Einstein to sign it, the intention was to persuade President Franklin D. Roosevelt that the development of such a weapon by Nazi Germany was a potential threat. Was the president the one responsible? Was it an inevitable consequence of an arms race at the dawn of war?

Concluding Remarks

A similar logic can be used in the case of the open letter asking to pause the development of AI: there will always be someone with ill intentions that will continue. Therefore, we should not stop. In the case of AI, halting its research is more complicated than imagined. Different from enriching uranium, Large Language Models can be trained in the basement of any house. Therefore, everyone is responsible to various degrees, from governments that could opt to tax the use of AI to deal with job loss to the solo developer deciding to consider the long-term effects of any software being coded.

Lastly, a more intriguing question is: does Artificial Intelligence impair the future of genuine life? Does having a sentimental relationship with the AI of a Snapchat influencer work to the detriment of a genuinely human connection? It is hard to say. Is there anything natural or genuine about living in a city, carrying smartphones wherever we go, and having Zoom meetings across time zones? The whole imperative of responsibility seems to hinge on the meaning of “genuine.”

In any case, the most important lesson is that humanity should be aware of the actual long-term consequences of artificial intelligence. To end with Hans Jonas, we should be on “the side of moderation and circumspection, of ‘beware!’ and ‘preserve!’” (1985, p. 204).

Literature

Coyne, L., & Hauskeller, M. (2019). Hans Jonas, Transhumanism, and What it Means to Live a «Genuine Human Life.» Revue Philosophique De Louvain, 117(2), 291–310.

Johnson, R., & Adam, C. (2022). Kant’s Moral Philosophy. In The Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/archives/fall2022/entries/kant-moral/

Jonas, H. (1985). The Imperative of Responsibility: In Search of an Ethics for the Technological Age. University of Chicago Press.

¹ This claim from Jonas seems to refer specially to ethical systems in philosophy. It is clear that in a handful of proto religious and religious ideas Nature plays a protagonist role.