Will Fearless and Tireless Robots Lead to More Terrifying Wars?

While developing the nuclear bomb, Robert Oppenheimer and his colleagues expressed concerns about the possibility of igniting the Earth’s atmosphere. Today, with the emergence of autonomous weapons, we are faced with a similar risk of causing catastrophic damage by unleashing weapons that can kill without feeling fear. The consequences of unleashing such fearless weapons on the battlefield could be far more devastating than we can imagine. Indeed, humanity may come to miss the restraining and mitigating effects of fear, fatigue, and stress on the horrors of combat.

The proliferation of autonomous weapons will affect the future conduct of warfare. But we do not know how. After all, while new technologies are produced with instruction manuals, they do not come with strategy, doctrine, or tactics. Throughout military history, warfare has been wedded to humans who kill under the shadow of primordial danger and fear. People behave differently when they think they have a chance of dying. The combined psychological stressors of combat can aid in producing friction, which can impede the most intricately drawn “blue arrows” on any battle plan from coming to fruition. With this in mind, it is critical to consider how supplementing humans with autonomous weapons will impact the future face of battle.

Military technology may be on the cusp of a revolution that will forever change the face of warfare. Autonomous weapons, immune to the psychological factors of combat, are on the horizon and will usher in a new era of lethality. They will influence offensive and defensive operations and provide novel strategic options. The deployment of autonomous weapons has the potential to make warfare more efficient, but it also has the potential to make it more gruesome and terrible.

Eliminating Fear, Fatigue, Stress, and Hesitation?

Fear, fatigue, stress, and hesitation have long been the engineers for impeding war plans. But in the age of autonomous warfare, machines will be invulnerable to them. Many of us have seen or perhaps even produced those beautifully drawn blue arrows on a battle plan that moves unflinchingly toward an objective. However, a stark difference exists between planning in the operations center and contact with the enemy. Battle plans can quickly fall apart for many reasons, but it comes down to the fact that humans are imperfect vessels of plans.

Through investments in rigorous training, modern militaries have developed ways to sensitize soldiers to the stress and shock of combat. Still, no training can replicate the actual dangers of war. By contrast, autonomous machines will not need live fire training to fabricate courage under fire. Instead, their courage will be programmed into their code.

Fatigue and stress, which have always impacted human armies, will be mitigated by autonomous weapons. The effectiveness of a human unit can decrease and require rest the longer it is exposed to combat. Even in remote warfare, we have seen drone pilots still subject to the stresses of watching their targets for endless hours as well as the toll of killing — which can affect them in several ways, including post-traumatic stress disorder. Autonomous “warbots” will not need time to rest away from the vortex of combat. Their endurance will not be limited by a body that requires rest or therapy. Instead, they will only be limited by the availability of fuel and by the wear of their hardware.

Those of us who have personally experienced combat know that people can freeze or hesitate during combat. Freezing, or what is medically known as Acute Stress Reaction, can take soldiers out of a fight in a varying timeframe, from lasting seconds and minutes to even the duration of the action. Autonomous weapons, immune to stress, will not suffer from these psychological reactions inhibiting their performance. There will likely be little hesitation and an absence of freezing for our future autonomous comrades. Instead, the autonomous warriors will kill enemy combatants with the same ease as a speed camera taking a photo of a speeding vehicle.

Strategy, Offense, and Defense in Autonomous Warfare

Autonomous armies have the potential to influence the conduct of offensive and defensive operations as well as strategic options forever. Wider use of killing autonomously is sure to open a Pandora’s Box, offering commanders and policymakers a tool whose ramifications we can only attempt to prophesize, including on its lethality. A 2017 Harvard Belter Center report stated that lethal autonomous weapons may prove “as disruptive as nuclear weapons.” Platforms immune to reason, bargaining, pity, or fear will possess the ability to eliminate the psychological and physical stressors that have long prevented the most ingenious of plans from coming to fruition.

Human attacks can stall, break down, or quit during offensive operations — long before their overall capabilities do. On the other hand, autonomous units on the offense will not stop after incurring massive casualties. Instead, they will advance until their programming orders otherwise. Lethal autonomous weapons will achieve what planners want consistently: giving the “blue arrows” their victory. They won’t be bogged down by the whizz of incoming bullets or by casualties. Autonomous weapons will not have to pause their attacks to establish medical evacuations. They will be able to sail, drive, or fly past the flaming hulks of their fellow platforms — and continue to deliver death on an industrial scale.

The same factors should also be considered for defensive operations. Human units have historically surrendered or withdrawn long before their total capability to resist has dissolved. The human heart fails before a unit’s combat effectiveness. The power of autonomous platforms in defense may be even more lethal than machine guns and artillery during World War I. On the defense, holding to the “last man” has long been the anomaly, such as in the storied accounts of Thermopylae or the Alamo. However, with autonomous platforms, fighting to the last machine will not be an exception but the norm.

Another aspect to consider in this new age of warfare is besides the possibility of removing some of the fear and risk for the combatants — it may do the same for policymakers when considering strategic options. Perhaps policymakers will be less cautious about employing the military instrument of national power when lives are not at risk. The proliferation of autonomous weapons may also give states more staying power, maintaining popular will with a lack of human casualties, especially during small wars. There will likely not be protests to bring “our machines” home.

Can Regulation Save Us?

There are no international regulations for autonomous systems. In the U.S. military, soldiers are thoroughly trained on the law of armed conflict and are aware they do not have to follow unlawful orders. However, autonomous weapons will not disobey orders or succumb to humanitarian sentiment. Machines will kill whatever or whoever they are programmed to destroy, making them an attractive tool for would-be advocates of war crimes, authoritarian regimes, and architects of genocide. Authoritarian regimes will not have to worry about their forces hesitating to kill crowds of protestors. Instead, autonomous forces will destroy uprisings with a cold efficiency. The architects of genocide will not have to rely on highly radicalized troops or special facilities to commit mass atrocities.

Given the risks to international and humanitarian law, nation-states should seriously debate regulating and controlling the distribution of autonomous weapons internationally. One of the key challenges that we have in autonomous weapons regulation is that technology is proceeding at a pace faster than we are able to control. Currently, autonomous weapons are not regulated by International Humanitarian Law treaties. U.N. Secretary-General António Guterres has, for years, advocated the prohibition of lethal autonomous weapon systems and has called for a legally binding instrument to ban them. Currently, U.S. policy does not prohibit the development or employment of autonomous weapons. However, the United States is involved in an international discussion group known as the “Group of Governmental Experts,” which has considered proposals to regulate autonomous weapons. The Convention on Certain Conventional Weapons may have some helpful precedents to follow, such as its regulations seeking to limit the indiscriminate damage of landmines. However, in lieu of any international agreements on the control or regulation of autonomous weapon technology, there are currently no safeguards to prevent the spread of this technology to nation-states and non-state actors alike.

Conclusion

By limiting or altogether removing the elements of fear, fatigue, stress, and hesitation, many of our attacks and defenses will achieve our bloody objectives with cold efficiency and speed never before seen on the field of battle. Of the many things in war that we should be wary of is when killing becomes too easy.

When the atomic bomb was tested, it turned out it did not ignite the Earth’s atmosphere. That fear ended up being misplaced or at least wrong. Are the dangers I point to here destined to be judged similarly? Perhaps. But only if we grapple with a core question and come up with real answers in the form of policy, regulation, and technological controls. That core question is this: Are we ready for this new revolution of warfare, which may unleash a new era of lethality — making warfare even more efficient, grotesque, and terrible?

Antonio Salinas is an active-duty Army officer and Ph.D. student in the Department of History at Georgetown University. Following his coursework, he will teach at the National Intelligence University. Salinas has 25 years of military service in the Marine Corps and the U.S. Army, where, in this capacity, he led soldiers in Afghanistan and Iraq. He is the author of Siren’s Song: The Allure of War and Boot Camp: The Making of a United States Marine.

The views expressed are those of the author and do not reflect the official position or policy of the Department of the Army, the Department of Defense, or the U.S. government.

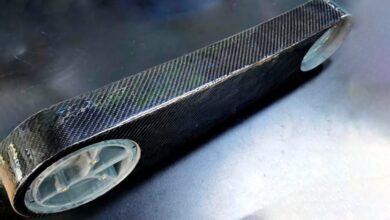

Image: Midjourney