Researchers at ServiceNow Propose a Machine Learning Approach to Deploy a Retrieval Augmented LLM to Reduce Hallucination and Allow Generalization in a Structured Output Task

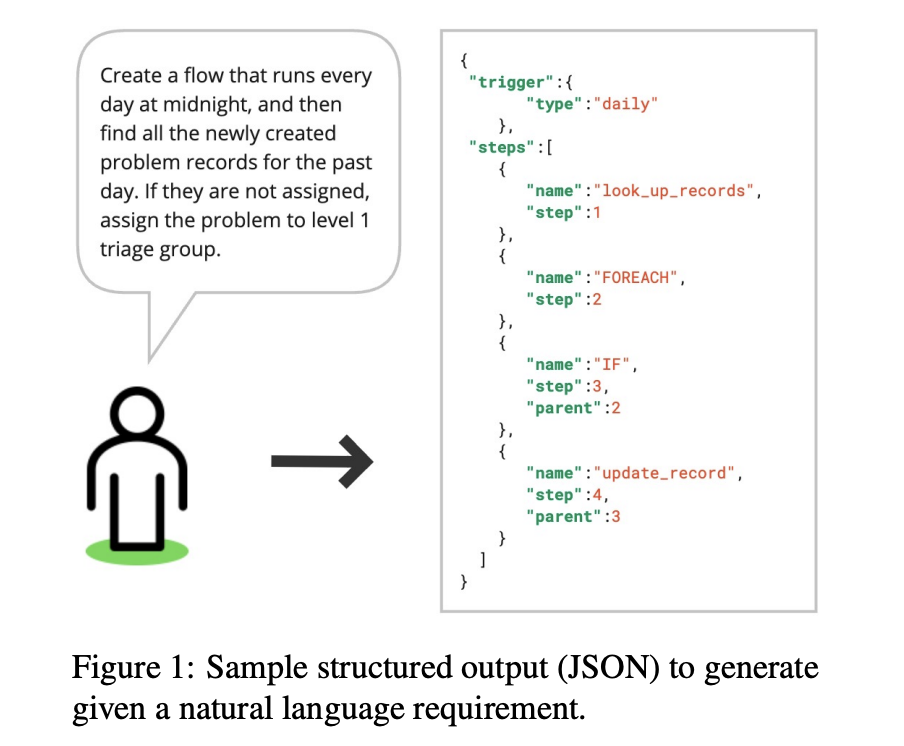

Large Language Models (LLMs) have made it economically possible to perform tasks involving structured outputs, such as converting natural language into code or SQL. LLMs are also being used to convert natural language into workflows, which are collections of actions with logical connections between them. These workflows increase worker productivity by encapsulating actions that can run automatically under certain circumstances.

Particularly in tasks like producing natural language from prompts, Generative Artificial Intelligence, or GenAI, has demonstrated impressive capabilities. However, one major disadvantage is that it often produces false or absurd outputs, which are called hallucinations. In order to achieve universal acceptability and usage of real-world GenAI systems, solving this restriction is becoming increasingly important as LLMs acquire importance.

In order to address hallucinations and to implement an enterprise application that translates natural language requirements into workflows, a team of researchers from ServiceNow has created a system that makes use of Retrieval-Augmented Generation (RAG), a method that is known to improve the caliber of structured outputs produced by GenAI systems.

The team has shared that they were able to significantly reduce hallucinations by including RAG in the workflow-generating program, which enhanced the dependability and usefulness of the workflows that were produced. The method’s capacity to generalize the LLM to non-domain contexts is a great benefit. This increases the system’s adaptability and usefulness in a variety of situations by enabling it to process natural language inputs that diverge from the standard patterns on which it was trained.

The team was also able to show that the accompanying model may be efficiently shrunk without compromising performance by utilizing a small, well-trained retriever in conjunction with the LLM. This was made possible by the successful implementation of RAG. Because of this decrease in model size, LLM-based system deployments use fewer resources, which is important to take into account in real-world applications where computing resources could be scarce.

The team has summarised their primary contributions as follows.

- The team has demonstrated how RAG can be applied to activities other than text production, showing how well it generates workflows from plain language requirements.

- It has been found that applying RAG reduces the number of false outputs or hallucinations to a significant level, and helps produce more organised, higher-quality outputs that faithfully reflect the intended workflows.

- The team has demonstrated that it is possible to use a smaller LLM in conjunction with a compact retriever model without compromising performance by including RAG in the system. This optimization lowers resource needs and improves the deployment efficiency of workflow generation LLM-based systems.

In conclusion, this approach is a big step forward in resolving GenAI’s hallucination constraint. The team has developed a reliable and effective method for creating workflows from natural language requirements by using RAG and optimizing the corresponding model size, opening the door for wider use of GenAI systems in enterprise settings.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.

She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.