The health risks of generative AI-based wellness apps

Catsaros, O. Generative AI to become a $1.3 trillion market by 2032, research finds. Bloomberg https://www.bloomberg.com/company/press/generative-ai-to-become-a-1-3-trillion-market-by-2032-research-finds/ (1 June 2023).

Kanagaraj, M. Here’s why mental healthcare is so unaffordable & how COVID-19 might help change this. Harvard Medical School Primary Care Review https://info.primarycare.hms.harvard.edu/review/mental-health-unaffordable (2020).

Terlizzi, E. P. & Schiller, J. S. Mental health treatment among adults aged 18–44: United States, 2019–2021. National Center for Health Statistics https://www.cdc.gov/nchs/data/databriefs/db444.pdf (2022).

Lavingia, R., Jones, K. & Asghar-Ali, A. A. A systematic review of barriers faced by older adults in seeking and accessing mental health care. J. Psychiatr. Pract. 26, 367–382 (2020).

Barney, L. J., Griffiths, K. M., Jorm, A. F. & Christensen, H. Stigma about depression and its impact on help-seeking intentions. Aust. N. Z. J. Psychiatry 40, 51–54 (2006).

Kakuma, R. et al. Human resources for mental health care: current situation and strategies for action. Lancet 378, 1654–1663 (2011).

De Freitas, J. & Tempest Keller, N. Replika AI: monetizing a chatbot. Harvard Business School Case 523-016 (2022).

Sung, M. Blush, the AI lover from the same team as Replika, is more than just a sexbot. TechCrunch https://techcrunch.com/2023/06/07/blush-ai-dating-sim-replika-sexbot/ (7 June 2023).

US Food and Drug Administration. How to determine if your product is a medical device. FDA https://www.fda.gov/medical-devices/classify-your-medical-device/how-determine-if-your-product-medical-device (2022).

21 U.S.C. § 360c — Premarket Approval https://www.law.cornell.edu/uscode/text/21/360e# (2023).

Office of Product Evaluation and Quality Template. FDA Summary of Safety and Effectiveness Data template. FDA https://www.fda.gov/media/113810/download (2023).

Sherkow, J. S. & Aboy, M. The FDA de novo medical device pathway, patents and anticompetition. Nat. Biotechnol. 38, 1028–1029 (2020).

21 U.S.C. § 360c — Classification of Devices Intended for Human Use https://www.law.cornell.edu/uscode/text/21/360c (2023).

Simon, D. A., Shachar, C. & Cohen, I. G. Skating the line between general wellness products and regulated devices: strategies and implications. J. Law Biosci. 9, lsac015 (2022).

Center for Devices and Radiological Health, US Food and Drug Administration. General wellness: policy for low risk devices. FDA https://www.fda.gov/regulatory-information/search-fda-guidance-documents/general-wellness-policy-low-risk-devices (2019).

De Freitas, J., Agarwal, S., Schmitt, B. & Haslam, N. Psychological factors underlying attitudes toward AI tools. Nat. Hum. Behav. 7, 1845–1854 (2023).

Vaidyam, A. N., Wisniewski, H., Halamka, J. D., Kashavan, M. S. & Torous, J. B. Chatbots and conversational agents in mental health: a review of the psychiatric landscape. Can. J. Psychiatry 64, 456–464 (2019).

Abd-Alrazaq, A. A. et al. An overview of the features of chatbots in mental health: a scoping review. Int. J. Med. Inform. 132, 103978 (2019).

Abd-Alrazaq, A. A., Rababeh, A., Alajlani, M., Bewick, B. M. & Househ, M. Effectiveness and safety of using chatbots to improve mental health: systematic review and meta-analysis. J. Med. Internet Res. 22, e16021 (2020).

Sweeney, C. et al. Can chatbots help support a person’s mental health? Perceptions and views from mental healthcare professionals and experts. ACM Trans. Comput. Healthc. 2, 25 (2021).

Kretzschmar, K. et al. Can your phone be your therapist? Young people’s ethical perspectives on the use of fully automated conversational agents (chatbots) in mental health support. Biomed. Inform. Insights 11, 1178222619829083 (2019).

Boucher, E. M. et al. Artificially intelligent chatbots in digital mental health interventions: a review. Expert Rev. Med. Devices 18, 37–49 (2021).

Gould, C. E. et al. Veterans Affairs and the Department of Defense mental health apps: a systematic literature review. Psychol. Serv. 16, 196–207 (2019).

Bendig, E., Erb, B., Schulze-Thuesing, L. & Baumeister, H. The next generation: chatbots in clinical psychology and psychotherapy to foster mental health—a scoping review. Verhaltenstherapie 32, 64–76 (2019).

Johansson, R. et al. Tailored vs. standardized internet-based cognitive behavior therapy for depression and comorbid symptoms: a randomized controlled trial. PLoS ONE 7, e36905 (2012).

Norcross, J. C. & Wampold, B. E. What works for whom: tailoring psychotherapy to the person. J. Clin. Psychol. 67, 127–132 (2011).

Alkaissi, H. & McFarlane, S. I. Artificial hallucinations in ChatGPT: implications in scientific writing. Cureus 15, e35179 (2023).

Eysenbach, G. The role of ChatGPT, generative language models, and artificial intelligence in medical education: a conversation with ChatGPT and a call for papers. JMIR Med. Educ. 9, e46885 (2023).

Babic, B., Gerke, S., Evgeniou, T. & Cohen, I. G. Beware explanations from AI in health care. Science 373, 284–286 (2021).

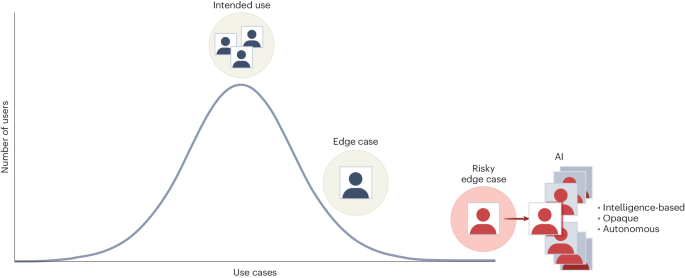

De Freitas, J., Uğuralp, A. K., Uğuralp, Z.-O. U. & Puntoni, S. Chatbots and mental health: insights into the safety of generative AI. J. Consum. Psychol. 00, 1–11 (2023).

Walker, L. Belgian man dies by suicide following exchanges with chatbot. The Brussels Times https://www.brusselstimes.com/430098/belgian-man-commits-suicide-following-exchanges-with-chatgpt (28 March 2023).

Atillah, I. E. Man ends his life after an AI chatbot ‘encouraged’ him to sacrifice himself to stop climate change. euronews.next https://www.euronews.com/next/2023/03/31/man-ends-his-life-after-an-ai-chatbot-encouraged-him-to-sacrifice-himself-to-stop-climate- (31 March 2023).

Haupt, C. E. & Marks, M. AI-generated medical advice—GPT and beyond. J. Am. Med. Assoc. 329, 1349–1350 (2023).

Medtronic, Inc. v. Lohr, 518 U.S. 470 (1996).

Riegel v. Medtronic, Inc., 552 U.S. 312 (2008).

Opel, D. J., Kious, B. M. & Cohen, I. G. AI as a mental health therapist for adolescents. JAMA Pediatr. 177, 1253–1254 (2023).

Corker, E. et al. Experiences of discrimination among people using mental health services in England 2008–2011. Br. J. Psychiatry Suppl. 202, s58–s63 (2013).