How Do AI Chatbots Work? Northeastern Researchers Take a Look

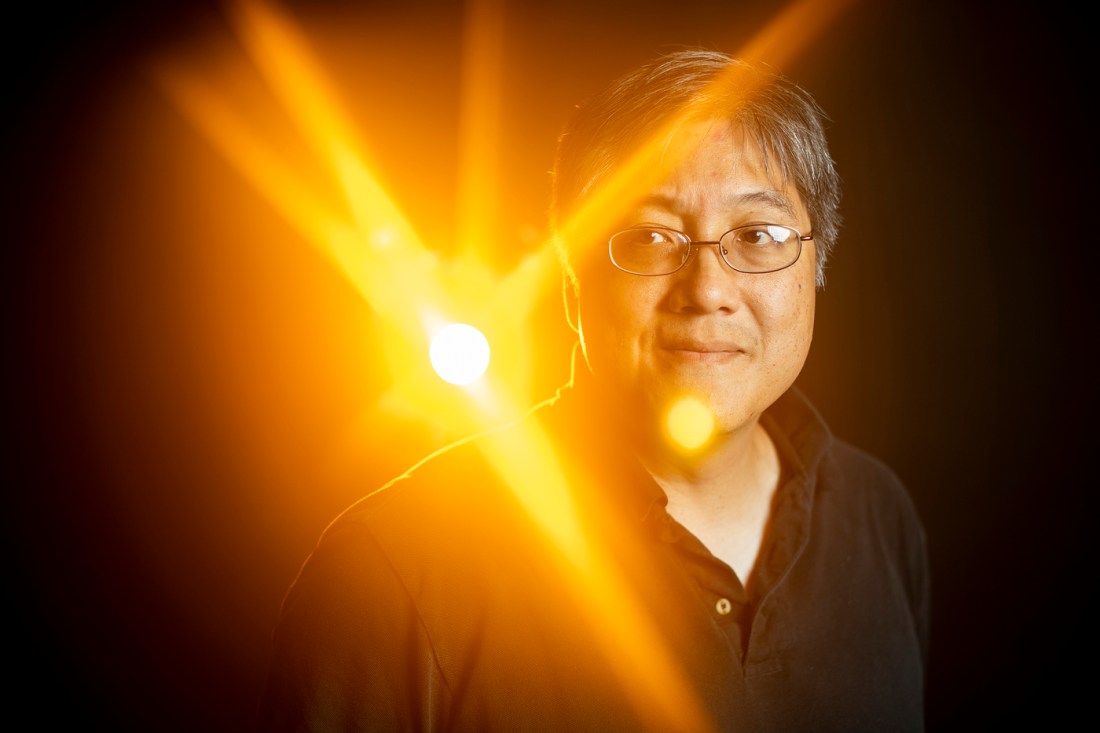

Computer science professor David Bau is the lead principal investigator of National Deep Inference Fabric, a revolutionary new project involving industry and academic partners aimed at unlocking the secrets of AI.

Groundbreaking research by Northeastern University will investigate how generative AI works and provide industry and the scientific community with unprecedented access to the inner workings of large language models.

Backed by a $9 million grant from the National Science Foundation, Northeastern will lead the National Deep Inference Fabric that will unlock the inner workings of large language models in the field of AI.

The project will create a computational infrastructure that will equip the scientific community with deep inferencing tools in order to develop innovative solutions across fields. An infrastructure with this capability does not currently exist.

At a fundamental level, large language models such as Open AI’s ChatGPT or Google’s Gemini are considered to be “black boxes” which limits both researchers and companies across multiple sectors in leveraging large-scale AI.

Sethuraman Panchanathan, director of the NSF, says the impact of NDIF will be far-reaching.

“Chatbots have transformed society’s relationship with AI, but how they operate is yet to be fully understood,” Panchanathan says. “With NDIF, U.S. researchers will be able peer inside the ‘black box’ of large language models, gaining new insights into how they operate and greater awareness of their potential impacts on society.”

Even the sharpest minds in artificial intelligence are still trying to wrap their heads around how these and other neural network-based tools reason and make decisions, explains David Bau, a computer science professor at Northeastern and the lead principal investigator for NDIF.

“We fundamentally don’t understand how these systems work, what they learned from the data, what their internal algorithms are,” Bau says. “I consider it one of the greatest mysteries facing scientists today — what is the basis for synthetic cognition?”

David Madigan, Northeastern’s provost and senior vice president for academic affairs, says the project will “help address one of the most pressing socio-technological problems of our time — how does AI work?”

“Progress toward solving this problem is clearly necessary before we can unlock the massive potential for AI to do good in a safe and trustworthy way,” Madigan says.

NDIF aims to democratize AI

In addition to establishing an infrastructure that will open up the inner workings of these AI models, NDIF aims to democratize AI, expanding access to large language models.

Northeastern will be building an open software library of neural network tools that will enable researchers to conduct their experiments without having to bring their own resources, and sets of educational materials to teach them how to use NDIF.

The project will build an AI-enabled workforce by training scientists and students to serve as networks of experts, who will train users across disciplines.

“There will be online and in-person educational workshops that we will be running, and we’re going to do this geographically dispersed at many locations taking advantage of Northeastern’s physical presence in a lot of parts of the country,” Bau says.

Research emerging from the fabric could have worldwide implications outside of science and academia, Bau explains. It could help demystify the underlying mechanisms of how these systems work to policymakers, creatives and others.

“The goal of understanding how these systems work is to equip humanity with a better understanding for how we could effectively use these systems,” Bau says. “What are their capabilities? What are their limitations? What are their biases? What are the potential safety issues we might face by using them?”

Putting AI through an MRI machine

Large language models like Chat GPT and Google’s Gemini are trained on huge amounts of data using deep learning techniques. Underlying these techniques are neural networks, synthetic processes that loosely mimic the activity of a human brain that enable these chatbots to make decisions.

But when you use these services through a web browser or an app, you are interacting with them in a way that obscures these processes, Bau says.

“They give you the answers, but they don’t give you any insights as to what computation has happened in the middle,” Bau says. “Those computations are locked up inside the computer, and for efficiency reasons, they’re not exposed to the outside world. And so, the large commercial players are creating systems to run AIs in deployment, but they’re not suitable for answering the scientific questions of how they actually work.”

At NDIF, researchers will be able to take a deeper look at the neural pathways these chatbots make, Bau says, allowing them to see what’s going on under the hood while these AI models actively respond to prompts and questions.

Researchers won’t have direct access to Open AI’s Chat GPT or Google’s Gemini as the companies haven’t opened up their models for outside research. They will instead be able to access open source AI models from companies such as Mistral AI and Meta.

“What we’re trying to do with NDIF is the equivalent of running an AI with its head stuck in an MRI machine, except the difference is the MRI is in full resolution. We can read every single neuron at every single moment,” Bau says.

But how are they doing this?

Significant computational power required

Such an operation requires significant computational power on the hardware front. As part of the undertaking, Northeastern has teamed up with the University of Illinois Urbana-Champaign, which is building data centers equipped with state-of-the-art graphics processing units (GPUs) at the National Center for Supercomputing Applications. NDIF will leverage the resources of the NCSA DeltaAI project.

NDIF will partner with New America’s Public Interest Technology University Network, a consortium of 63 universities and colleges, to ensure that the new NDIF research capabilities advance interdisciplinary research in the public interest.

Northeastern is building the software layer of the project, Bau says.

“The software layer is the thing that enables the scientists to customize these experiments and to share these very large neural networks that are running on this very fancy hardware,” he says.

Northeastern professors Jonathan Bell, Carla Brodley, Bryon Wallace and Arjun Guha are co-PIs on the initiative.

Guha explains the barriers that have hindered research into the inner-workings of large generative AI models up to now.

“Conducting research to crack open large neural networks poses significant engineering challenges,” he says. “First of all, large AI models require specialized hardware to run, which puts the cost out of reach of most labs. Second, scientific experiments that open up models require running the networks in ways that are very different from standard commercial operations. The infrastructure for conducting science on large-scale AI does not exist today.”

NDIF will have implications beyond the scientific community in academia. The social sciences and humanities, as well as neuroscience, medicine and patient care can benefit from the project.

“Understanding how large networks work, and especially what information informs their outputs, is critical if we are going to use such systems to inform patient care,” Wallace says.

NDIF will also prioritize the ethical use of AI with a focus on social responsibility and transparency. The project will include collaboration with public interest technology organizations.