Notice To Employers: Be Careful – Your Employees Might Be Using Generative AI In The Workplace – New Technology

Introduction

Artificial intelligence holds the promise of delivering new

waves of efficiency and productivity in the workplace, but it also

carries risk for employers who don’t mitigate operational,

reputational, and legal risks associated with unauthorized use.

Generative AI platforms such as ChatGPT, Google Gemini, and

Microsoft Copilot are free tools at the fingertips of employees.

Surveys conducted by KPMG and Salesforce have demonstrated that

generative AI is already widely used in Canadian workplaces, often

without employer knowledge.

Without a comprehensive policy governing the internal use of AI,

unauthorized use of AI by employees could jeopardize an

organization’s data security, intellectual property, and create

issues of liability and reputational damage.

Generative AI Use in the Workplace

KPMG recently carried out a Generative AI Adoption survey of over

4,500 Canadians which identified that one in five Canadians are

using generative AI daily with 61% percent of generative AI users

using the technology multiple times per week for work purposes.

Salesforce also recently carried out a survey collecting data from 14,000

global workers, including over 1,000 in Canada. The findings show

that 28% of professionals are using generative AI at work, while

55% of these professionals are using AI without approval from their

employers.

While the use of generative AI tools offers significant benefits

such as enhanced efficiency, creativity, and problem-solving

capabilities, it simultaneously introduces a plethora of challenges

and uncertainties, particularly when these tools are integrated

into the workplace.

Employer Concerns with Generative AI

Despite the growing buzz around generative AI, many

organizations remain on the fringes and are contemplating its

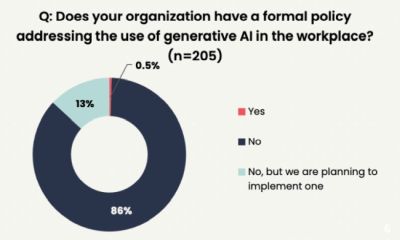

integration. In 2023, the Human Resources Professional Association

(HRPA) ran a pulse survey of its membership to

gain insights into their attitudes and behaviours regarding the use

of generative AI technology in the workplace. 205 respondents

across numerous industries participated in the survey.

While 67% of respondents indicated their organizations had no

plans to use generative AI technology, 47% of respondents were

either concerned or potentially concerned about employees using

generative AI in an unauthorized way to carry out their work

tasks.

Source: Generative AI in the

Workplace – HRPA Survey Report, 2023

While many organizations may not have plans to implement

generative AI, the results of the KPMG and Salesforce surveys

demonstrate that employees may have different ideas.

With the rapidly expanding landscape of artificial intelligence,

one term has increasingly gained attention: Democratized

Generative AI. This term represents a shift from AI being a

tool of the few to a resource of the many. This poses a particular

challenge for employers as free and easy access generative AI tools

means those without technical backgrounds and training have access

to a resource which presents significant risks if misused.

The HRPA reported that those respondents who did have concerns

about unauthorized use of generative AI in the workplace listed

privacy and security risks, lack of originality and plagiarism, and

poor output from the generative AI tools as common reasons.

Risks with Generative AI

Misuse of generative AI by employees can bring about

consequential legal, ethical, and operational risks. A few

illustrative examples include:

- Intellectual Property and Data Security:

Without explicit guidelines, employees might unwittingly compromise

sensitive information, leading to legal, financial, and

reputational damage. - Ethical and Legal Concerns: The absence of

ethical guidelines could result in the creation of content that

infringes on copyright laws or perpetuates bias, attracting legal

scrutiny and eroding public trust. - Operational Inefficiency: A lack of coherent

AI use policy may result in inconsistent and inefficient

operations, diminishing the potential benefits of generative AI

technologies.

Despite 47% of respondents expressing concerns about employees

using generative AI in the workplace, only 0.5% of respondents

reported that their organizations had a formal policy in place

addressing the use of generative AI in the workplace.

Source: Generative AI in the

Workplace – HRPA Survey Report, 2023

Regardless of whether an organization aims to use generative AI

in their operations, the vast number of tools currently available

are particularly appealing to employees, meaning that organizations

may have exposure to the risks presented by generative AI without

realizing it. This presents a critical blind spot in organizational

strategies.

Implementing Generative AI in the Workplace

Implementing a comprehensive Generative AI Workplace Policy not

only mitigates legal and operation risks but ensures ethical use,

protecting the organization’s intellectual property and data

security. It offers a framework for maximizing the benefits of

generative AI while maintaining control over its deployment and

usage.

The planned implementation of Canada’s Artificial

Intelligence Data Act (AIDA) underscores the urgency for employers

to recognize and address the use of generative AI in the workplace.

AIDA is anticipated to introduce a risk-based regulatory framework,

mandating that employers assess and mitigate risks associated with

high-impact AI systems – which could include generative AI

tools employed by staff. With requirements for transparency, data

anonymization, and robust accountability frameworks, AIDA will

likely significantly sharpen the legal and ethical responsibilities

surrounding AI. Notably, it is planned that the Act will carry

substantial penalties for non-compliance, emphasizing the legal and

financial imperatives of managing AI use.

The discrepancy between an employer’s perceived and actual

risk exposure is particularly relevant here; even if a business

does not officially deploy generative AI, it may still be liable

for its employees’ use of such technologies. Therefore,

developing comprehensive AI governance policies is not just prudent

– it is necessary to navigate the intricate landscape AIDA

will soon establish.

The content of this article is intended to provide a general

guide to the subject matter. Specialist advice should be sought

about your specific circumstances.