UK Unveils First-Ever State-Backed AI Safety Tool

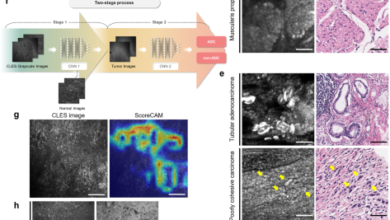

The U.K. has debuted what it calls a landmark toolset for artificial intelligence (AI) safety testing.

The new offering, announced Friday (May 10) by the country’s AI Safety Institute, is dubbed “Inspect” and is a software library that lets testers such as startups, academics and AI developers to international governments assess specific capabilities of individual AI models and then generate a score based on their results.

According to an institute news release, Inspect is the first AI safety testing platform overseen by a state-backed body and released for wider use.

“As part of the constant drumbeat of U.K. leadership on AI safety, I have cleared the AI Safety Institute’s testing platform — called Inspect — to be open sourced,” said Michelle Donelan, the U.K.’s secretary of state for science, innovation and technology.

“This puts U.K. ingenuity at the heart of the global effort to make AI safe, and cements our position as the world leader in this space.”

The announcement comes a little more than a month after the British and American governments pledged to work together on safe AI development, agreeing to collaborate on tests for the most advanced AI models.

“AI continues to develop rapidly, and both governments recognize the need to act now to ensure a shared approach to AI safety which can keep pace with the technology’s emerging risks,” the U.S. Department of Commerce said at the time.

The two countries also agreed to form alliances with other nations to foster AI safety around the world, with plans to hold at least one joint test on a publicly accessible model and “tap into a collective pool of expertise by exploring personnel exchanges” between both organizations.

The partnership follows commitments made at the AI Safety Summit in November of last year, where world leaders explored the need for global cooperation in combating the potential risks associated with AI technology.

“This new partnership will mean a lot more responsibility being put on companies to ensure their products are safe, trustworthy and ethical,” AI ethics evangelist Andrew Pery of global intelligent automation company ABBYY told PYMNTS soon after the collaboration was announced.

“The inclination by innovators of disruptive technologies is to release products with a ‘ship first and fix later’ mentality to gain first-mover advantage. For example, while OpenAI is somewhat transparent about the potential risks of ChatGPT, they released it for broad commercial use with its harmful impacts notwithstanding.”

For all PYMNTS AI coverage, subscribe to the daily AI Newsletter.