The Big Inworld AI Q&A

Shortly after generative AI became the hottest topic in pretty much every industry, Inworld started making headlines in 2023 with its Character Engine technology that was first implemented in a series of PC games (Mount and Blade II: Bannerlord, The Elder Scrolls V: Skyrim, and Grand Theft Auto V) by modder Bloc.

A few months later, Inworld announced a new round of funding from big investors (including Microsoft, Samsung, and LG) that brought the company’s total valuation to $500 million. The gaming industry’s attention toward their dynamic NPCs technology was confirmed when Microsoft revealed a partnership to develop a multiplatform AI-powered toolset for game creators. Then, at the GDC 2024 event in San Francisco, California, Inworld stole the limelight with three demos created with NVIDIA, Microsoft, and Ubisoft.

Following that event, I contacted Inworld to set up an interview to cover these high-profile partnerships, the future roadmap of their technology, and potential issues for studios and end users, such as costs. I talked to Nathan Yu, General Manager of Inworld’s Labs, for around half an hour; you can read the whole transcript of our conversation below.

At GDC 2024, I wrote that Inworld AI ‘blew up’, which was not at all an exaggeration from my point of view. You pretty much stole the show by presenting three demos made with big partners like Microsoft, NVIDIA, and Ubisoft. What was it like?

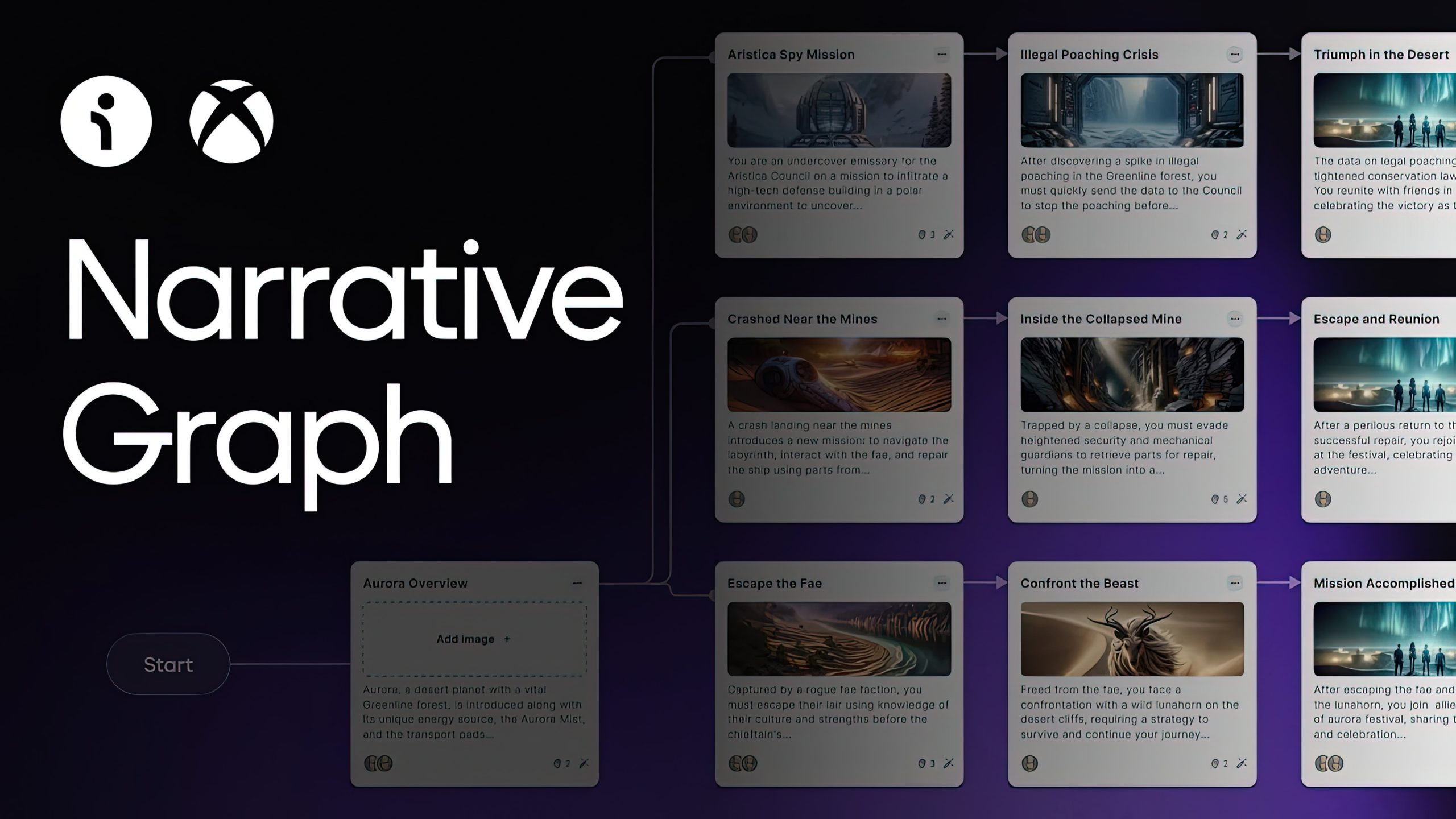

Overall, it was incredible. Under the hood, we’ve been working on all three fronts with Ubisoft, NVIDIA, and Microsoft for a while now. It was great to see them all land solidly at GDC. They all push the boundaries of our technology in different areas, with Ubisoft specifically going beyond dialogue as a key theme that we’ve been focusing on at Inworld. How does AI play a more integral impact on core gameplay mechanics and player progression? We really started to see a lot of those nuances come out with NEO NPCs. Microsoft is obviously a huge partner working on the Narrative Graph and design tools with our Mists of Aurora demo. So that was great to see a different type of angle, not all focused on just the runtime generation, but also the design time and narrative branching tools we can have.

And then finally, with NVIDIA and Covert Protocol, experimenting with how modular Inworld technology can be with NVIDIA Microservices such as Audio2Face, the Riva ASR, etc. It’s all been really fantastic to see from a high level.

How many employees do you currently have at Inworld AI? I’m guessing most of them are engineers.

At Inworld, we’ve grown not just from a product perspective exponentially in the past year but in the team size as well. We’re almost at 100 staff members now, most of which are engineers.

I lead our Labs organization, where we actually have dedicated resources both on the PM (partner management) and engineering side to support these efforts. This is early technology, right? It’s not robust standard off-the-shelf technology that you can buy and run with it on your own.

There’s a lot of co-development that Inworld actively engages in with these partners, so we’re still hiring even today for these roles. But yeah, we’ve been preparing for it for a long time, and we’ll continue to grow to meet those needs.

What are your roadmap’s key goals for 2024 and beyond?

There’s so much to unpack there. We actually did a product webinar in the town hall just a few weeks before GDC, where we touched on some of the upcoming themes that we’ll be working on in 2024 and beyond. You’ll see maybe three core buckets on how our product will evolve. First and foremost, and this actually aligns nicely with the three pillars at Inworld, which we call the Inworld engine, the Inworld studio, and then Inworld core. The Inworld engine is what people think of when they think of Inworld today: what powers runtime character dialogue, their cognition. We’ll see a lot of evolution beyond just the dialogue itself, so taking into consideration the perception of the environment, the context of what the actual game actions are, giving Inworld characters the proactivity to take actions on their own based off of what a developer specifies and to push player progression forward. That’s a key major theme we’re pushing on there. Within the dialogue, you’ll see a lot of continued improvements just with improved realism, naturalism, expression, dialogue controls of nuance, length, and whatnot.

On the studio side, we’re gonna see an official launch of all of our narrative graph tools, with developers able to iterate quickly on different character and plot designs to see how stories or gameplay loops might unfold and use that either as a part of the design process or even as a part of the actual gameplay flow.

Finally, when it comes to the core of the technology, there’s a lot of deep investments we’re making in lowering costs, optimizing latency, enabling fine-tuning for all partners, not just our closed enterprise customers. That’s it from a high level,

Internationalization is a big one that’s touched on a lot, too. We have early support already for Mandarin and Korean and we will be rolling out Japanese just in the next month or two here for early access as well. By the end of the year, we’re aiming to support most of the top languages.

One of the main drawbacks of some of the implementations we’ve seen so far of generative AI for NPCs is that they are just surface-level. What would be really interesting and potentially game-changing is to integrate the feature at a game design level to ensure dynamic interaction with NPCs actually changes the world and plot.

Yes, absolutely. We’re just scratching the surface here today, right? But that’s absolutely the vision. The analogy I like to give is – earlier, you mentioned you’ve been following Inworld by way of the Bannerlord mod, Skyrim mod, and GTA V mod. The latter was a bit different because we did actually structure gameplay around it.

But the first two, you can look at it and think, yeah, this is cool. You can chat with the characters in Skyrim and ask the guard about what happened when he took an arrow to the knee, but that’s fun for maybe five minutes. It doesn’t really make it a better game at the end of the day.

Right now, we’re at this position where we realized this, and it’s also kind of like a chicken and egg problem. We believe that the true transformative games are gonna come from where we actually design the gameplay around the AI capabilities from the get-go. You can’t just add a mod to a game because the game design is already baked in. You’re not necessarily introducing entirely new ways to engage with the player just by adding the NPC AI capabilities.

It’s gonna be a much longer time before we see these AAA titles launch out. But there’s smaller efforts where we’ll start to see, such as with Ubisoft’s NEO NPCs, where it goes beyond the dialogue. They have an awareness of what you’re doing, and they can take action on their own.

Another analogy I like to give is that it’s so unnatural when a character is there just almost waiting for you. Like, they stand there, but they don’t do anything. They just t-pose and then when you go to talk to them and only when you talk to them, they respond to you. That’s not how it should work.

You’ll see where we’re giving more lifelike behavior to the characters where they are absolutely doing things on their own. If you’re not talking to them, they’re gonna be like, okay, man, I gotta go, this is awkward. We’re just standing here while you’re thinking of what to say. There’s a lot of teachers we’re building out to support that better.

Is your goal with Inworld AI to be a product that developers can leverage on their own without your direct help?

That’s a good question. Long term, for sure, it’s gonna be an off-the-shelf tool that developers can take and customize on their own. Even today, you can.

I’d say there’s maybe two different aspects of it. One is just a knowledge gap concern. If you were an Inworld expert and knew how to leverage all of the beta features that we unlocked, you could get very, very, very far.

But this technology is still early, and not many people understand the nuances around it or how to set it up the best way. That’s where partner engineering solutions come in. But I do believe it’s gonna be democratized a lot more where people will be able to get 90% of the way by themselves. Also, for large AAA companies with bespoke needs, we’ll continue to work closely with them in a co-development fashion.

Usually, triple-A companies wait a bit before diving into new technologies. However, it seems like they are experimenting with generative AI NPCs even at this early stage. Why do you think that is?

Everyone sees the potential of generative AI. It’s a little bit different from the Web 3 crypto craze, where there’s tangible value today, and everyone gets it. Even at the large Triple A’s, there’s executive mandates and general excitement about how to lean into this technology and explore opportunities for collaboration and innovation there.

I’d say, of course, we don’t see any great ship use cases today. Maybe there are a few small ones I could point to. But yeah, it’s gonna be a bit of a chicken and egg. I don’t think there’s gonna be a ton of R&D that’s allocated from these studios as well. Maybe it depends on the innovation capacity and appetite. But we all know it takes such a long time to make these big games. It also differs from studio to studio.

But I would say there are some that are still waiting for those reference use cases to be built up by either another AAA or indie developer. But there are also others that are ready to engage today just given the confidence from what they’ve seen already.

The partnership with Microsoft is peculiar because they are crafting a whole copilot-like tool based on Inworld AI for game designers, which suggests they’re looking to use it themselves first and foremost.

That would be the intention. I can’t share too much there, but stay tuned to see which studios we’ll be working with. The word copilot is so broad, and I don’t like to use it. But of course, there are a lot of benefits we see from helping designers iterate. One key learning that we’ve had actually and was mentioned by the narrative writers that we work with is that it’s a different type of work that’s needed now. It’s not AI replacing writing at all. We know it’s not capable of creating good gameplay and the act of even generating a character is more of like a director type of job as opposed to a writer.

You’re not just responsible for writing the four lines of dialogue that get integrated into the game, but you have to create a brain that’s then capable of generating those four or 400 lines of dialogue in the best way. So it’s very interesting. But it’s both, right? We’ve seen a lot of productivity ideas sparked by those tools in a meaningful way already.

Can you talk a bit about your work with partners and developers?

Sure. Absolutely. I’d say the true magic comes again with a lot of customization and client-side logic built out on top of Inworld. Even within our tech, it’s not just generative AI. We have a combination of orchestration of models, like large language models and small models, and the way you configure and optimize them is where the magic happens.

Covert Protocol is a great example. It’s a very vertical slice technical demo. I wouldn’t say we spent a whole too much time on it. It was actually a very short project that came together, but we’re gonna be open-sourcing it in the next month and a half, so developers can take a look out there, and they’ll see a skeleton for what they could drop on top of more compelling gameplay loops.

To be fair, the Covert Protocol demo wasn’t very impressive, as the player still needed to pick up a disguise to progress, just like you would in a HITMAN game…

There’s two things here. Again, it was a vertical slice, a tech demo. We know it’s not a compelling experience end to end there. But really, we were demonstrating two things. One is how conversation can be used to evolve gameplay first and foremost. The other one is how we can allow the combination of characters, environmental awareness, and information about a player to change their interactions.

Diego is meant to be the stuck-up in his head, ‘I’m only gonna talk to VIPs’ type of person. The one mechanic unfortunately we didn’t build in time for GDC was that if you tried to talk to Diego without the badge, he was actually going to cut you off after a very short period of time and no longer talk to you because you’re not important and he has to work on his keynote speech.

But because we didn’t implement that, he’ll continue to entertain you and engage in some small talk, but you’ll notice they’re very short answers. They’re not really engaging, and that is by design. But when you come back and say you’re also a speaker, Diego all of a sudden goes, ‘OK, this person may be important, maybe I should open up and have a conversation, learn a bit more’. That’s the intent there.

But of course, under the hood is the fact that in order to get the room number, you’ve got to agitate Martin to some extent, and there’s a few ways that we’ve hooked that up through the branches. You could either make him angry, you could get him worried about the keynote, you could get him concerned about the room delays, and those would kind of lead into the actual script sequence of him walking up to the front desk and revealing information through that.

But yeah, I will admit that there’s definitely better flows that can be done bridging the gap between how smooth those feel. Like how nuanced interaction feels and actually pushes the player and rewards the right behaviors so that it’s clearer what the player should do.

Something else that I’ve been wondering is about having to use voice interactions with Inworld. Not only does this drive up costs, but often, it actually decreases immersion because the NPCs do not understand what you’ve said, and their own voiced responses and facial animations aren’t really good enough. Would it be possible to use Inworld just text-to-text?

At the end of the day, Inworld services are fully modular. We do provide just pure text-to-text. That’s what you get out of the box with Inworld Studio, but we understand voices obviously bring life to it. ASR does bring more immersion.

There’s a lot of ways to get around this. In NEO NPCs, Ubisoft implemented kind of like ASR confirmation which is really unique. You say your phrase, ASR captures it, and then you commit it by pressing the trigger button on the controller to the character. Obviously, that’s not realistic. It breaks real time immersion, but it actually felt very natural to me as a player. Beyond that, we’re exploring tools where we could think of the player as a character.

So the concept of the player as a character is you do not say everything yourself, but maybe you have an AI that represents you, that you control. Let’s say your AI version can generate four options of responses, and then you can commit one of those to the actual chat. That can evolve over time, and if you don’t like them, you can maybe customize them too. But I’m thinking more about console-based experiences where people don’t really want to speak into a controller.

There’s a lot of opportunities there. It’s really up to the game designer at the end of the day to craft the experience that they’re looking for. But I agree it’s not just 100% real time immersion all the time. That’s not necessary.

One of the big caveats of NPCs based on generative AI tech is monetization. As I understand it, your Character Engine currently runs on Inworld cloud servers, and there are costs attached to using your dynamic NPCs. It seems unlikely users would be willing to shoulder these costs, especially now that game prices are on the rise. How do you plan to solve this key problem?

This monetization question is such a deep topic we can get into. For everyone, this is top of mind, along with latency guard rails and hallucinations. But from a high level, my perspective is it just needs to make sense for everyone. At the end of the day, there needs to be enough value generated for a player, a game studio, and Inworld for all of this to be viable.

There are pockets of use cases where this is already demonstrated to be profitable, small indie games where the entire game is based off of this AI reasoning and has driven more purchases of the game where it clearly justifies the cost as of today.

Moving forward, the costs are going to go down significantly; we all understand that. But even then, it’s the design, right? Do you really need all secondary NPCs to be hyper-intelligent? Maybe not; maybe we use smaller models there, but for the core companion character, there is a value added.

So, there’s a balance of how much AI is used in a game. What is a game design? How does that add value? Then, we can start to talk about different monetization structures, whether that’s per usage or not. The subscription and premium feature idea is not bad, but I think it’s not appropriate to just use this in broad strokes. It needs to be taken from case to case for a specific game.

If you look at Roblox, you’ve got players paying all the time for accessories and retries and whatnot. These mechanics are baked in and understood in certain pockets already. We just have to work together as a whole to determine where it makes the most sense for this AI to be used. Ads is also a whole other topic we could dive into for short term options that are very compelling as well.

But I’m not too concerned. I think if we give it another couple of months, the conversation will be different as soon as we see viable, profitable use cases being deployed.

Do you think, at the end of the day, that game developers will have to bear the costs of running Inworld tech?

I see it both ways. I do see experiences where players will be contributing to the cost directly because there’s like a premium upsell there that makes sense for them. I do see other examples where it doesn’t make sense. Players are purchasing a flat game cost for $59.99 or other and it’ll be fully maintained just through other monetization structures, or it’s already amortized over time.

As we get to scale for AAA titles, costs will go down so that it’s no longer variable. If we’ve got millions of users, there’s lots of techniques that can be leveraged to drive down costs there. But yeah, overall, it depends on the publisher and the developer.

There’s this reluctance, of course, for revenue share models when your game is so large, and so there is a flat rate licensing that Inworld looks at as well to help manage compute either on a triple-A studio’s cloud services or if they have the infrastructure themselves.

Do you think that it will be possible to run Inworld technology on local devices in the near future? Some devices, like PCs equipped with GeForce RTX hardware, already have dedicated ML hardware, and even next-generation consoles might include something of that sort.

Absolutely. Yes. we’ll definitely get there. We saw a lot of exciting announcements even at GTC for dedicated hardware in the future for AI versus rendering today.

There’s a lot of questions, like, if we do move to on devices, will that impact graphics rendering? That becomes a nonstarter conversation for a lot of studios. But at Inworld we do support hybrid as well. Some services could run locally, some services run on cloud invention, and that can short-term optimize costs and latency. But in the future, I think we can all agree that it’s going to get there. It’s gonna run locally, offline, on devices like consoles. It’s just a matter of time. I’m excited to see that as well.

On the whole cost issue, just look at the trends. Even from a pure inferencing perspective, the models today versus the models six months ago are way smaller and have way better quality. Hardware, we all know that if you compare the graphics cards of today versus two years ago, it’s night and day. So, the baseline will see those driven to a fraction in the future. Even then, I think we’ll be able to think a lot more about the actual monetization structures of where value is generated and how to optimize capturing that across all stakeholders involved. That’s gonna be exciting.

Thank you for your time.