Collaborative artificial intelligence system for investigation of healthcare claims compliance

Formal definition of rule and similarity metrics

A rule is a logical representation of a section of text in a policy document. We formally define a rule \(R={\bigwedge }_{i=1}^{n}{C}_{i}\) as the conjunction of the set of conditions \(\mathcal{C}\left(R\right)=\{{C}_{1},{C}_{2},\dots ,{C}_{n}\}\), where each condition \({C}_{i}\) is defined by a property in our ontology, for example “hasMinAge” or “hasExcludedService”. The ontology also specifies restrictions on properties such as cardinality or expected data types. We refer to the set of values of condition \({C}_{i}\) in rule \(R\) as \(\mathcal{V}\left({C}_{i},R\right)=\{{v}_{1},{v}_{2},\dots ,{v}_{m}\}\); multiple values are interpreted as a disjunction. If \(\mathcal{V}\left({C}_{i},R\right)\) contains only one numeric value (for example “hasMinAge(12)”), then we refer to \({C}_{i}\) as a numeric condition.

Given two rules \({R}_{x}\) and \({R}_{y}\), and a condition \({C}_{i}\), we define the condition similarity metric as:

$${\mathcal{S}}_{C}\left({C}_{i},{R}_{x},{R}_{y}\right)= \left\{\begin{array}{cc}\frac{1}{1+\left|{v}_{i, x} – {v}_{i, y}\right|}& \begin{array}{c}if \,{C}_{i}\in \mathcal{C}\left({R}_{x}\right) \;and \,{C}_{i}\in \mathcal{C}\left({R}_{y}\right)\; and \,{C}_{i} \,is\, numeric\\ and \; \mathcal{V}\left({C}_{i},{R}_{x}\right)=\{{v}_{i, x}\}\; and \;\mathcal{V}\left({C}_{i},{R}_{y}\right)=\{{v}_{i, y}\}\end{array}\\ \frac{\left|\mathcal{V}\left({C}_{i},{R}_{x}\right)\cap \mathcal{V}\left({C}_{i},{R}_{y}\right)\right|}{\left|\mathcal{V}\left({C}_{i},{R}_{x}\right)\cup \mathcal{V}\left({C}_{i},{R}_{y}\right)\right|}& \text{if }{C}_{i}\in \mathcal{C}\left({R}_{x}\right)\text{ and }{C}_{i}\in \mathcal{C}\left({R}_{y}\right)\text{ and }{C}_{i}\text{ is not numeric}\\ 0& \text{ otherwise}\end{array}\right.$$

If \({C}_{i}\) is a numeric condition, and is present in both \({R}_{x}\) and \({R}_{y}\), then the condition similarity \({\mathcal{S}}_{C}\left({C}_{i},{R}_{x},{R}_{y}\right)\) is inversely proportional to the distance between \({v}_{i, x}\) (the numeric value of \({C}_{i}\) in \({R}_{x}\)) and \({v}_{i, y}\) (the numeric value of \({C}_{i}\) in \({R}_{y}\)). If instead, \({C}_{i}\) is present in both \({R}_{x}\) and \({R}_{y}\), but it is not numeric, then the condition similarity \({\mathcal{S}}_{C}\left({C}_{i},{R}_{x},{R}_{y}\right)\) is the Jaccard similarity48 between the set of values of \({C}_{i}\) in \({R}_{x}\) and the set of values of \({C}_{i}\) in \({R}_{y}\). Finally, when \({C}_{i}\) is missing in either \({R}_{x}\) or \({R}_{y}\), the condition similarity \({\mathcal{S}}_{C}\left({C}_{i},{R}_{x},{R}_{y}\right)\) is equal to 0.

Given two rules \({R}_{x}\) and \({R}_{y}\), we also define the rule structure similarity as the Jaccard similarity between the set of conditions in \({R}_{x}\) and the set of conditions in \({R}_{y}\):

$${\mathcal{S}}_{S}\left({R}_{x},{R}_{y}\right)= \frac{\left|\mathcal{C}\left({R}_{x}\right)\cap \mathcal{C}\left({R}_{y}\right)\right|}{\left|\mathcal{C}\left({R}_{x}\right)\cup \mathcal{C}\left({R}_{y}\right)\right|}$$

When \({R}_{x}\) is a ground-truth rule and \({R}_{y}\) is automatically extracted from the same paragraph of text corresponding to \({R}_{x}\), then we use a slightly modified version of condition similarity and structure similarity, where we replace the Jaccard similarity between the set of values and the set of conditions with the Sørensen–Dice coefficient36,37 between the same sets. More precisely:

$${\mathcal{S}}_{C}\left({C}_{i},{R}_{x},{R}_{y}\right)= \left\{\begin{array}{cc}\frac{1}{1+\left|{v}_{i, x} – {v}_{i, y}\right|}& \begin{array}{c}if \;{C}_{i}\in \mathcal{C}\left({R}_{x}\right) \;and \;{C}_{i}\in \mathcal{C}\left({R}_{y}\right) \;and \;{C}_{i} \;is \;numeric\\ and \;\mathcal{V}\left({C}_{i},{R}_{x}\right)=\{{v}_{i, x}\}\; and \;\mathcal{V}\left({C}_{i},{R}_{y}\right)=\{{v}_{i, y}\}\end{array}\\ \frac{2\cdot \left|\mathcal{V}\left({C}_{i},{R}_{x}\right)\cap \mathcal{V}\left({C}_{i},{R}_{y}\right)\right|}{\left|\mathcal{V}\left({C}_{i},{R}_{x}\right)\right| + \left|\mathcal{V}\left({C}_{i},{R}_{x}\right)\right|}& \text{if }{C}_{i}\in \mathcal{C}\left({R}_{x}\right)\text{ and }{C}_{i}\in \mathcal{C}\left({R}_{y}\right)\text{ and }{C}_{i}\text{ is not numeric}\\ 0& \text{ otherwise}\end{array}\right.$$

$${\mathcal{S}}_{S}\left({R}_{x},{R}_{y}\right)= \frac{2\cdot \left|\mathcal{C}\left({R}_{x}\right)\cap \mathcal{C}\left({R}_{y}\right)\right|}{\left|\mathcal{C}\left({R}_{x}\right)\right| + \left|\mathcal{C}\left({R}_{x}\right)\right|}$$

The Sørensen–Dice coefficient gives a better measure of the accuracy of the system when extracting rules from text. When \({R}_{x}\) is a ground-truth rule and \({R}_{y}\) is the corresponding automatically extracted rule, we consider \(\mathcal{C}\left({R}_{x}\right)\) and \(\mathcal{C}\left({R}_{y}\right)\) as, respectively, the set of expected and predicted conditions in the rule, and \(\mathcal{V}\left({C}_{i},{R}_{x}\right)\) and \(\mathcal{V}\left({C}_{i},{R}_{y}\right)\) as, respectively, the set of expected and predicted values for condition \({C}_{i}\) in the rule. In this scenario the Sørensen–Dice coefficient is equivalent to the F1-score, and it measures the harmonic mean of the precision and recall of Clais when extracting \({R}_{y}\).

Finally, we define the overall rule similarity \({\mathcal{S}}_{R}\left({R}_{x},{R}_{y}\right)\) and the text similarity \({\mathcal{S}}_{T}\left({R}_{x},{R}_{y}\right)\) between rules \({R}_{x}\) and \({R}_{y}\) as follows:

$${\mathcal{S}}_{R}\left({R}_{x},{R}_{y}\right)= \frac{{\mathcal{S}}_{S}\left({R}_{x},{R}_{y}\right)+\sum_{{C}_{i}\in \mathcal{C}\left({R}_{x}\right)\cup \mathcal{C}\left({R}_{y}\right)}{\mathcal{S}}_{C}\left({C}_{i},{R}_{x},{R}_{y}\right)}{1+\left|\mathcal{C}\left({R}_{x}\right)\cup \mathcal{C}\left({R}_{y}\right)\right|}$$

$${\mathcal{S}}_{T}\left({R}_{x},{R}_{y}\right) = 1 – \frac{arccos\left(\frac{{u}_{x}\cdot {u}_{y}}{\Vert {u}_{x}\Vert \Vert {u}_{y}\Vert }\right)}{\pi }$$

The overall rule similarity is the arithmetic mean of the structure similarity \({\mathcal{S}}_{S}\left({R}_{x},{R}_{y}\right)\) and the condition similarity \({\mathcal{S}}_{C}\left({C}_{i},{R}_{x},{R}_{y}\right)\) for all conditions \({C}_{i}\) in \({R}_{x}\) or \({R}_{y}\). The text similarity \({\mathcal{S}}_{T}\left({R}_{x},{R}_{y}\right)\) is the angular similarity between the embedding vectors \({u}_{x}\) and \({u}_{y}\) encoding the sections of text corresponding to \({R}_{x}\) and \({R}_{y},\) respectively. We use Sentence-BERT (SBERT)49 with the state of the art model “all-mpnet-base-v2”50 to compute the embedding vectors; similar to other work51, we convert the cosine similarity between the embedding vectors into an angular distance in the range [0, 1] using arccos and normalizing by \(\pi\).

Extraction of rules from text

Clais uses knowledge graphs to represent rules. A knowledge graph is a directed labelled graph (example in Fig. 1d), and we encode its structure and semantics using RDF52 triples (subject, predicate, object). Our ontology32 (excerpt in Fig. 1b), designed in collaboration with expert policy investigators25, formally defines the meaning of subjects, predicates and objects used in the rule knowledge graphs. The ontology also specifies restrictions on the predicates (for example, expected domain and range, disjoint or cardinality constraints), which guide our system in building semantically valid RDF triples and meaningful rules. Additionally, the ontology defines rule types, which consist of concepts-relationships templates capturing repeatable linguistic patterns in policy documents. The current version of the ontology specifies three rule types: (1) limitations on services, such as units of service or reimbursable monetary amounts that a provider can report for a single beneficiary over a given period; (2) mutually exclusive procedures that cannot be billed together for the same patient over a period; and (3) services not covered by a policy under certain conditions.

The ontology design ensures that every rule knowledge graph can be modelled as a tree (an undirected graph in which any two vertices are connected by exactly one path), where leaves are values of the conditions in the rule. The tree representation enables Clais to visualize the rule’s conditions and their values in an intuitive user interface, which simplifies editing and validation of the rule. The same user interface also supports the interactive creation of new rules: professionals compose a rule by selecting items from a library of conditions based on the property defined in the ontology; the system asks for condition values and checks their validity in accordance with the restrictions defined for the corresponding ontology predicate (for example domain, range, cardinality).

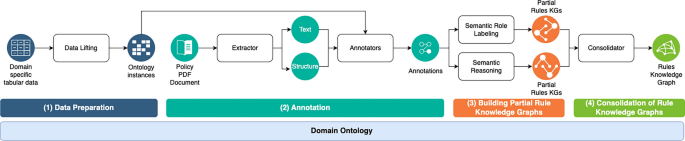

We build upon recent natural language processing (NLP) techniques24,25 to automatically identify dependencies between relevant entities and relations described in a fragment of policy text, and to assemble them into a rule. Clais uses a configurable NLP extraction pipeline, where each component can be replaced or complemented by others with similar functionalities. The configuration can be customized either manually or using hyper-parameter optimization53,54 to tune the overall performance of the extraction pipeline for a given policy, domain or geographic region (specifically, we use the Optuna55 hyperparameter optimization framework). Clais NLP extraction pipeline (Fig. 6), which does not require labelled data, consists of the following steps: (1) data preparation according to the policy domain and geography (state/region); (2) automatic annotation of policy text fragments to identify mentions of domain entities and relations in the text; (3) building of rule knowledge graphs corresponding to policy text segments using their domain entities and relations in accordance to the ontology definitions; (4) knowledge graph consolidation and filtering to produce a set of well-formed rules (necessary when different components/approaches are used to build the knowledge graphs, or when rules extend across multiple sentences in the policy text).

We automated the data preparation step with tools that use configurable mappings to translate any existing tabular data (used to define domain specific values for a target policy in a geographical region) into ontology instance values of a specific entity type. The tabular data sources contain both the surface forms (meaningful textual labels, synonyms, etc.) necessary to identify mentions in the policy text, and their mapping to ontological resources. The system uses the surface forms for entity and relation annotation in the cold-start scenario (lack of labelled data); the surface forms are usually part of existent tabular data, such as the ones describing codes for eligible places of service56 (“hospital”, “clinic”, etc.) or the Healthcare Common Procedure Coding System (HCPCS)57 published by Centers for Medicare and Medicaid.

We use state of the art PDF tools to extract the text and the structure of a policy document. The annotation of text relies on ontology-based annotators58 using dictionary-based lemma matches. In addition, we initialize the annotators with entity labels such as UMLS types59, which are useful to fill in values for some ontology properties (depending on their range definition).

The core of the NLP extraction pipeline transforms textual patterns between ontological annotations into sets of RDF triples, which constitute a partial rule knowledge graph; it uses two domain-agnostic approaches: semantic role labeling25 and semantic reasoning24. Semantic role labeling is based on a declarative information extraction system58 that can identify actions and roles in a sentence (who is the agent, what is the theme or context of the action, if there are any conditionals, the polarity of the action, temporal information, etc.). We use the ontology definitions for the domain and range of properties to reason over the semantic roles, and to identify semantically compatible relation and entity/value pairs in a sentence. Semantic reasoning transforms syntactic dependencies from a dependency tree60,61 into RDF triples. Dependency trees capture fine-grained and relevant distant (not necessarily consecutive in the text) syntactic connections by following the shortest path between previously annotated ontological entities. For each linguistically connected predicate-arguments tuple in the sentence, the dependency tree searches the ontology definitions for non-ambiguous paths that could connect the tuple elements in a semantically correct way; the search is based on parametrized templates24.

Semantic role labelling and semantic parsing may produce partially overlapping knowledge graphs from the same paragraph of text: the final stage of the extraction pipeline24 consolidates them into one final rule knowledge graph, and it also filters potential inaccuracies, based on heuristics that detect violations of ontological constraints such as disjointness (a procedure code cannot be both reimbursable and non-reimbursable in the same rule) or cardinality restrictions (there can only be one applicable ‘time period’ for each rule).

We observe that the extraction pipeline does not require training with labelled data. However, as users validate policy rules, they progressively build a reusable, shared library of machine-readable and labelled rules, which Clais gradually incorporates into two deep learning models26 (based on BERT—Bidirectional Encoder Representations from Transformers62) that complement the other components of the extraction pipeline: a classifier that identifies paragraphs of text potentially containing a rule description in new policy documents, and a model that predicts the probability that a text fragment provides conditions (and values) for a rule.

Execution of rules on claims

Clais executes rules on claims data through dynamically constructed software pipelines whose components translate the semantics of rule conditions into executable code. The workflow consists of three stages: first, the system normalizes the conditions of a rule \(R\) into a format amenable to execution; second, it transforms the normalized conditions into an evaluation pipeline \({P}_{R}\) by assembling executable components; and third, it executes instances of \({P}_{R}\) and reports the results.

Rules automatically extracted from a policy document (or manually defined by professionals) may be either compliance rules (defining conditions characterizing valid claims) or non-compliance rules (defining conditions that identify claims at-risk). When analyzing claims data, the typical task consists of identifying non-compliant claims, and therefore Clais executes only non-compliant rules. The system transforms a compliance rule into a non-compliance rule by changing one (or more) of its conditions according to their semantics (ontology definitions), and to the logic structure of the rule (logical negation). Figure 1c–e show an example of such transformation: the policy text “A periodic oral evaluation is a payable benefit once in a six-month period” is a compliance rule defining a unit limitation: “it is compliant to claim at most one unit of service within six months”. The corresponding knowledge graph models the unit limitation using the conditions “hasMaxUnits(1)” (for brevity we omit conditions defining the temporal aspect of the rule). Clais transforms the rule into a non-compliance rule by changing “hasMaxUnits(1)” into “hasMinUnit(2)”: “it is not compliant to claim two or more units of service within six months”. The preliminary transformation stage may also replace a subset of conditions with a single condition whose semantics maps directly to one of the executable components in the downstream execution pipeline. An example of such transformation is the following: rules typically have some temporal constraints, which are expressed with two conditions: one defining the amount of time (“hasAmountOfTime”), and one defining the unit of time (“hasUnitOfTime”)—see examples in Fig. 1d and Fig. 5k. This pair of conditions is replaced with a single condition that defines a time window in days (“hasSlidingWindowInDays”)—see Fig. 1e.

Clais dynamically assembles an evaluation pipeline for each rule by chaining executable components that perform operations on an evaluation context; the evaluation context consists of the temporal sequence of claim records belonging to a patient. Clais uses three types of executable components: filter, splitter, and evaluator; these are sufficient to map the semantics of the ontology properties that define normalized rule conditions. Future extensions of the ontology may require new types of executable components. The definition of filters, splitters, and evaluators uses declarative functions that query the fields of a claim record and produce some output value; we support the modular expression language SpEL63 for writing such functions. Filters apply logical conditions to limit the evaluation context only to claim records that are relevant for the rule being executed. A typical example of a condition that maps to a filter is “hasApplicableService” (Fig. 1e), which restricts the evaluation context to those claims referring to a specific service code. Splitters divide an evaluation context into a stream of evaluation contexts depending on values in the claim records, or on temporal windows; they may also perform grouping operations and compute aggregation on claim records. The condition “hasBillingCommonality(same_provider)” (see Rule \({R}_{1}\) in Fig. 5k) maps to a splitter that groups claim records by their provider identifier; the condition “hasSlidingWindowInDays(180)” (Fig. 1e) maps to a splitter that divides the sequence of claim records into sub-sequences whose temporal duration spans at most 180 days. Finally, an evaluator analyses each claim in an evaluation context using an expression that evaluates to true or false; a typical evaluator produces a true value when the cumulative sum of a specified claim field, over the claims in the evaluation context, is greater (lower) than a given threshold.

Clais uses a configurable mapping to transform conditions in a rule into their respective executable components, and to assemble them in an evaluation pipeline. In each pipeline, the final evaluator component evaluates each claim in its respective evaluation context. A claim may appear in several evaluation contexts depending on the rule conditions: the claim is non-compliant if the evaluator associates a true value with the claim in at least one evaluation context. Clais builds an evaluation pipeline \({P}_{R}\) for every rule \(R\); it deploys an instance of \({P}_{R}\) for each patient (the input consists of an evaluation context listing the claims of the patient in chronological order). Each instance of \({P}_{R}\) is executed in parallel: the parallel execution of evaluation pipelines (for different rules and for different patients) can be distributed across a computing infrastructure, thus enabling the scalability required to efficiently process very large volumes of claims (distributed execution was not necessary in our experiments).

Baseline models

We used a dataset of 44,913,580 dental claims to train our baseline models; 421,321 (0.94%) claims are labelled as fraudulent. In our experiments we used random under-sampling for each rule data with 80:20 distribution (80% normal and 20% fraudulent claims) to build the training dataset (same as previous work15). We experimented with several strategies to cope with the problem of class imbalance, including considering different balancing ratios for the dataset and re-weighting of classes in the negative log likelihood loss; the best performance was obtained with a simple random under-sampling with 80:20 distribution.

For both baseline models, we did not perform feature engineering to create additional features, because manual feature engineering is data-dependant. Different rules require different types of features and creating manual features for every rule is impractical, and as costly as manually implementing algorithms for compliance checks (similarly to what policy investigators currently do).

We used the open-source LightGBM64 implementation of gradient boosting trees with the following default hyper-parameters: n_estimator = 20,000, max_depth = 6, num_leaves = 1000, learning_rate = 0.01, bagging_fraction = 0.8, min_data_in_leaf = 5. For gradient boosting models, we have rebalanced the data with different balancing ratios ranging from 0.1 to 1.0 but we did not observe an improvement over the reported results (Fig. 3a).

The deep neural network baseline uses a Recurrent Neural Network (RNN) architecture44,45,46 to learn dependencies between elements in a sequence. The sequence used to classify a claim consists of all the preceding claims and related features in chronological order, in addition to the claim being classified. Each claim in the sequence also contains the time-delta from the previous claims. All categorical features are processed with an embedding layer, having a size equal to the square root of the feature cardinality; all numerical features are processed by a normalization layer that estimates the variance and the average during the training phase. The RNN implementation relies on a Gated Recurrent Unit46 with 3 hidden layers, trained with a learning rate of 10e-3 using negative log likelihood loss. We use a binary softmax layer as a final claims classification layer. We trained the network for up to 50 epochs (similarly to previous work21), but we did not see improvements.

User study

We organized the user study in two sessions: the first with the External Group (participants had no prior knowledge of Clais), and the second with the Internal Group (participants were also involved in the design and prototyping of Clais and in the development of the ground truth). Each session was organized as a video-conference: each participant used her/his own computer and answered the questionnaires independently. We started each session describing the purpose of the user study, the structure of PUEU and USE questionnaires, and their scope in the context of our user study. The session with the External Group included a one-hour introductory tutorial about Clais. We delivered the questionnaires on spreadsheets; we went through the questions one by one, providing clarifications if necessary, and waited for each participant to answer a question (including writing comments) before moving to the next. We collected the spreadsheets with the answers and removed any reference to the participants’ identity (except for job role and group) before processing the results.