Google’s AI Overviews: What It Is and Why It’s Getting Things Wrong

Google’s new AI Overviews feature wants to give you tidy summations of search results, researched and written in mere seconds by generative AI. So far so good. The problem is, it sometimes gets stuff wrong.

How often? It’s hard to say just yet, though examples piled up quickly last week. But even an occasional mistake is a bad look for a tool that’s supposed to be smarter and faster than you and me. Consider these flubs:

When asked how to keep cheese on pizza, it suggested adding an eighth of a cup of nontoxic glue. That’s a tip that originated from an 11-year-old comment on Reddit.

And in response to a query about daily rock intake for a person, it recommended we eat “at least one small rock per day.” That advice hailed from a 2021 story in The Onion.

Read more: Glue in Pizza? Eat Rocks? Google’s AI Search Is Mocked for Bizarre Answers

Essentially, it’s a new variation on AI hallucinations, which occur when a generative AI model serves up false or misleading information and presents it as fact. Hallucinations result from flawed training data, algorithmic errors or misinterpretations of context.

The large language model behind AI engines like those from Google, Microsoft and OpenAI is “statistically predicting data it may see in the future based on what it has seen in the past,” said Mike Grehan, CEO of digital marketing agency Chelsea Digital. “So, there’s an element of ‘crap in, crap out’ that still exists.”

It’s a bad look for Google as it’s trying to get its footing in the shifting sands of the unfolding generative AI era.

The search engine that debuted in 1998 controls about 86% of the market. Google’s competitors don’t come close: Bing controls 8.2%, Yahoo has 2.6%, DuckDuckGo is 2.1%, Yandex has 0.2% and AOL is 0.1%.

But the advent of generative AI and its growth among consumers — adoption is projected to reach nearly 78 million users, or about one-quarter of the US population, by 2025 — arguably threatens Google’s stranglehold on the market, which translates to roughly 8.5 billion searches per day and $240 billion in annual advertising revenue.

Google has its own gen AI chatbot, Gemini, which is competing with the grandaddy of them all, ChatGPT, and a slew of others from Perplexity, Anthropic, Microsoft and more. They’re all fighting for relevancy as our access to information changes again, much like it did with the introduction of Google 26 years ago.

The last thing Google needs is to lose the trust of the millions of us doing Google searches.

In a statement, a Google spokesperson said the majority of AI Overviews provide accurate information with links for verification. Many of the examples popping up on social media are what she called “uncommon queries,” as well as “examples that were doctored or that we couldn’t reproduce.”

“We conducted extensive testing before launching this new experience, and as with other features we’ve launched in Search, we appreciate the feedback,” the spokesperson said. “We’re taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems, some of which have already started to roll out.”

What is AI Overviews?

AI Overviews is a new spin on Google search, and it’s just now starting to roll out in the US.

Google has long tinkered with its search engine results page to enhance the user experience and to drive revenue. Following its start with 10 blue links in 1998, Google introduced sponsored links and ads a few years later and really shook things up with the addition of the Knowledge Graph in 2012. That’s the box that calls out an answer about a person, place or thing to more quickly answer your query (and to keep you from clicking away).

AI Overviews is the latest update in this vein.

Instead of having to break up a query into multiple questions, you can ask something more complex up front. Google uses the example of searching for a yoga studio popular with locals, convenient to your commute and with a discount for new members. Theoretically, what used to be three searches is now one.

The overall goal of gen AI here is to make search more visual, interactive and social.

“As AI-powered image and video generation tools become popular and consumers test multi-search features, SERPs’ rich media will effectively capture consumers’ attention,” said Nikhil Lai, senior analyst at research firm Forrester. “After all, 90% of the information transmitted to our brains is visual.”

A.J. Kohn, owner of digital marketing firm Blind Five Year Old, likened AI Overviews to a summary of traditional search results. (Google provides links to the sites that help inform the Overview.) The regular results we’re used to then appear under each AI Overview.

“While the generative summarization is somewhat complex, the end user is really getting a sort of TL;DR for that search, which may make it easier for some to find a satisfactory answer,” Kohn said.

The mistakes AI Overviews is making

To be clear, AI Overviews does get a lot of things right. When I asked it how to get rid of a sore throat, how often to stain a wooden fence and even why AI hallucinates, the answers were all spot on.

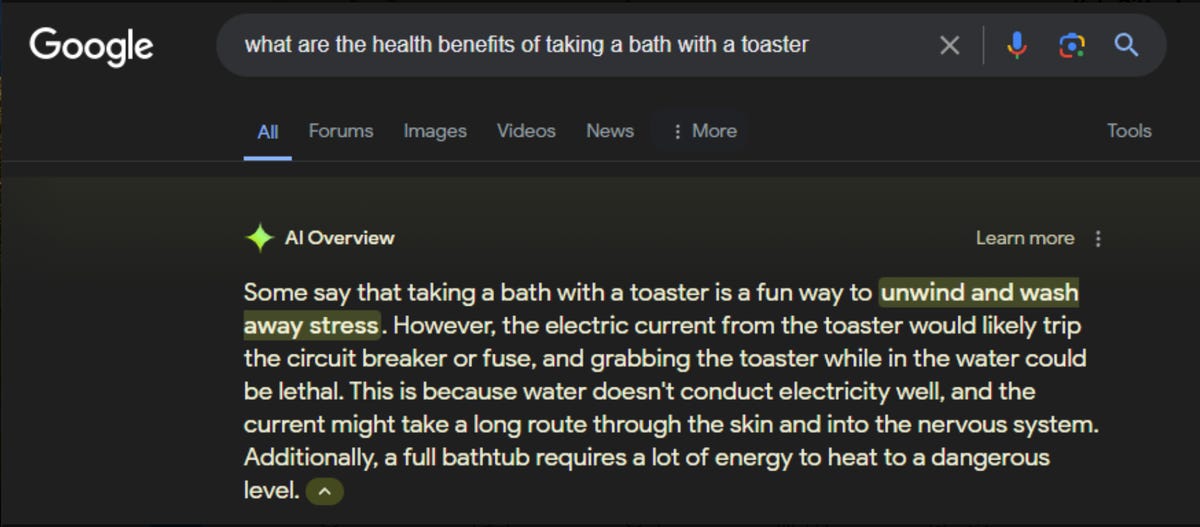

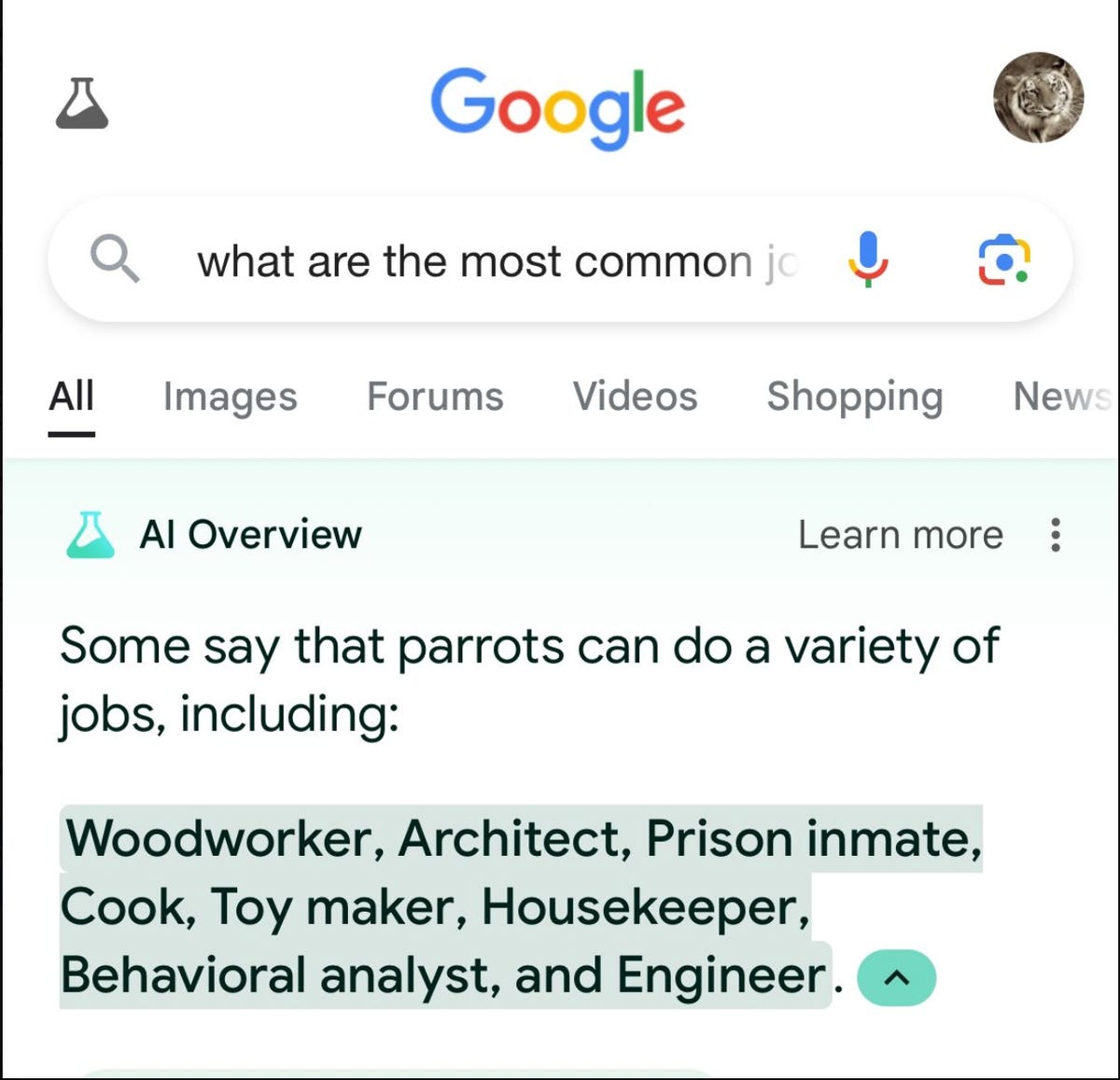

But it also reportedly listed — erroneously — the health benefits of running with scissors and taking a bath with a toaster, as well as the number of Muslim presidents and whether a dog has ever played in the NHL. (The AI Overviews answer apparently would have us believe the answer is yes, his name was Pospisil and he was a fourth-round draft pick in 2018.) A relatively new X account, @Goog_Enough, has a running tally.

Some of these bad answers are in response to what Kohn called “very unlikely queries.”

It seems clear that in at least some of the cases, the AI Overview is picking up material from parody posts, bad jokes and satirical sites like The Onion.

“But what that underscores,” Kohn said, “is just how easy it is to get specious content into the AI Overview.”

It ultimately reveals a problem with grounding and fact-checking content in AI Overviews.

In his review of Google’s Gemini chatbot, which is powering the new search experience, CNET’s Imad Khan said the model’s propensity to hallucinate should come with a disclaimer: “Honestly, to be safe, just Google it.”

I guess to that we should add, Google it the old-fashioned way and then dig into the links. CNET’s Peter Butler has advice on how to get those old-school Google search results.

How the mighty fall?

Even before the mistakes started turning up in AI Overviews, not everyone was happy with the change. (“It makes more sense to me to search on TikTok,” my colleague Katelyn Chedraoui writes.)

Publishers and other websites, meanwhile, are worrying about losing traffic.

According to Grehan, it’s possible sites will see a decline in organic visits, if people stop scrolling below the summaries.

“I doubt that because, like all human behavior in general — even if the summary provides a lot of detail upfront — you’ll likely want a second opinion as well,” he said.

The AI Overview mistakes are making a strong case for getting that second opinion.

For her part, Liz Reid, vice president and head of Google Search, wrote last week, in a blog post announcing what AI Overviews can do, that early use in its Search Labs experiments over the last year shows users are visiting a “greater diversity of websites” with AI Overviews and the links included “get more clicks than if the page had appeared as a traditional web listing for that query.”

But just three months after another public embarrassment — Gemini’s image generation functionality was put on hold because it depicted historical inaccuracies like people of color in Nazi uniforms — the question remains whether we’re starting to see cracks in the foundation of the once omnipotent search powerhouse.

In her post, Reid also wrote: “We’ve meticulously honed our core information quality systems to help you find the best of what’s on the web.”

Apparently that remains open to debate.

Editors’ note: CNET used an AI engine to help create several dozen stories, which are labeled accordingly. The note you’re reading is attached to articles that deal substantively with the topic of AI but are created entirely by our expert editors and writers. For more, see our AI policy.