Google’s AI Overview problems may be unfixable, experts say

Last week, Google’s new “AI Overviews” went viral for the wrong reasons. Hyped as the future of online search, the feature — in which Google’s software directly answers a user’s question, rather than just linking to relevant websites — spat out a slew of responses that ranged from absurd to dangerous. (No, geologists don’t recommend eating one small rock per day, and please don’t put glue in your pizza.)

Google initially downplayed the problems, saying the vast majority of its AI Overviews are “high quality” and noting that some of the examples going around social media were probably fake. But the company also acknowledged that it was removing at least some of the problematic results manually, a laborious process for a site that fields billions of queries per day.

“AI Overviews are designed to surface high quality information that’s supported by results from across the web, with prominent links to learn more,” spokesperson Ned Adriance said Tuesday. “As with other features we’ve launched in Search, we’re using feedback to help us develop broader improvements to our systems, some of which have already started to roll out.”

It’s a sign that the problems with artificial intelligence answers run deeper than what a simple software update can address.

“All large language models, by the very nature of their architecture, are inherently and irredeemably unreliable narrators,” said Grady Booch, a renowned computer scientist. At a basic level, they’re designed to generate answers that sound coherent — not answers that are true. “As such, they simply cannot be ‘fixed,’” he said, because making things up is “an inescapable property of how they work.”

At best, Booch said, companies using a large language model to answer questions can take measures to “guard against its madness.” Or they can “throw enormous amounts of cheap human labor to plaster over its most egregious lies.” But the faulty answers are likely to persist as long as Google and other tech companies use generative AI to answer search queries, he predicted.

GET CAUGHT UP

Summarized stories to quickly stay informed

Arvind Narayanan, a computer science professor at Princeton, agreed that “the tendency of large language models to generate incorrect information is unlikely to be fixed in the near future.” But he said Google has also made “avoidable mistakes with its AI Overview feature, such as pulling results to summarize from low-quality web pages and even the Onion.”

With AI Overviews, Google is trying to address language models’ well-known penchant for fabrication by having them cite and summarize specific sources.

But that can still go wrong in multiple ways, said Melanie Mitchell, a professor at the Santa Fe Institute who researches complex systems. One is that the system can’t always tell whether a given source provides a reliable answer to the question, perhaps because it fails to understand the context. Another is that even when it finds a good source, it may misinterpret what that source is saying.

This isn’t just a Google problem, she said. Other AI tools, such as OpenAI’s ChatGPT or Perplexity, may not get the same answers wrong that Google does. But they will get others wrong that Google gets right. “The AI to do this in a much more trustworthy way just doesn’t exist yet,” Mitchell said.

Still, some parts of the problem may prove more tractable than others.

The problem of “hallucinations,” in which a language model makes up something that’s not in its training data, remains “unsolved,” said Niloofar Mireshghallah, a postdoctoral scholar in machine learning at the University of Washington. But making sure the system is only drawing from reliable sources is more of a traditional search problem than a generative AI problem, she added. That issue, she said, can perhaps be “patched up” in part by adding fact-checking mechanisms.

It might also help to make the AI Overviews less prominent in search results, suggested Usama Fayyad, executive director of the Institute for Experiential AI at Northeastern University.

“I don’t know if the summaries are ready for prime time,” he said, “which by the way is good news for web publishers,” because it means users will still have reason to visit trusted sites rather than relying on Google for everything.

Mitchell said she expects Google’s answers to improve — but not by enough to make them truly reliable.

“I believe them when they say that a vast majority is correct,” Mitchell said. “But their system is being used by millions and millions of people every day. So there are going to be cases that it gets badly wrong, and there are going to be cases where that’s going to cause some kind of harm.”

Narayanan said the company’s “easiest way out of this mess” might be to pay human fact-checkers for millions of the most common search queries. “Essentially, Google would become a content farm masquerading as a search engine, laundering low-wage human labor with the imprimatur of AI.”

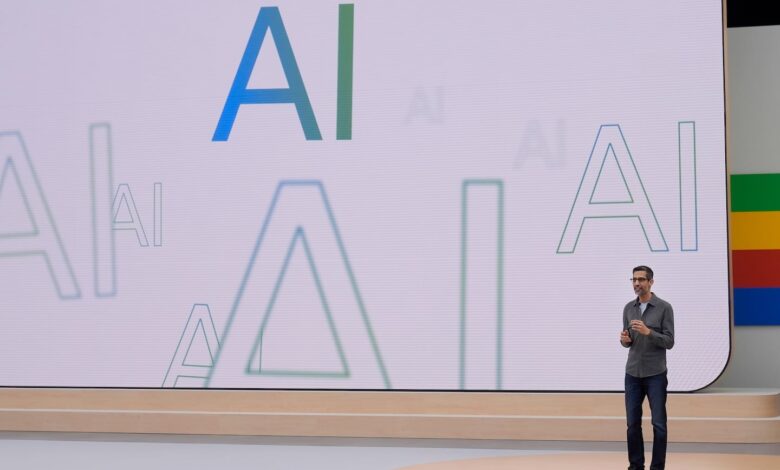

Even Google’s CEO, Sundar Pichai, has acknowledged the issue.

In an interview with the Verge last week, Pichai said large language models’ propensity for falsehoods is in some ways “an inherent feature,” which is why they “aren’t necessarily the best approach to always get at factuality.”

But he said building them into a search engine can help “ground” their answers in reality while directing users to the original source. “There are still times it’s going to get it wrong, but I don’t think I would look at that and underestimate how useful it can be at the same time.”

Biden administration took a pass on a plan to make TikTok safer (by Drew Harwell)

OpenAI starts training a new AI model while forming a safety committee (by Pranshu Verma)

Ex-OpenAI director says board learned of ChatGPT launch on Twitter (Bloomberg News)

Google won’t comment on a potentially massive leak of its search algorithm documentation (The Verge)

The media bosses fighting back against AI — and the ones cutting deals (by Laura Wagner and Gerrit De Vynck)

Microsoft launches Copilot for Telegram (The Verge)

AI career coaches are here. Should you trust them? (by Danielle Abril)

That’s all for today — thank you so much for joining us! Make sure to tell others to subscribe to Tech Brief. Get in touch with Cristiano (via email or social media) and Will (via email or social media) for tips, feedback or greetings!