New AI power teaches robots how to hammer, screw nuts, cook toast

MIT researchers have utilized artificial intelligence (AI) models to combine data from multiple sources to help robots learn better.

The technique employs diffusion models, a type of generative AI, to integrate multiple data sources across various domains, modalities, and tasks.

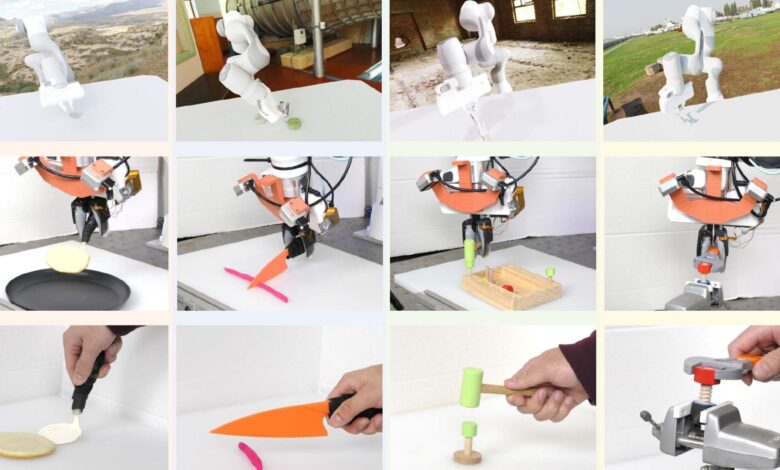

The training strategy allowed a robot to execute various tool-use activities and adapt to new tasks it was not exposed to during training, both in simulations and real-world tests.

According to researchers, the Policy Composition (PoCo) strategy improved work performance by 20 percent compared to baseline procedures.

Multi-policy integration

Accomplishing the goal of teaching a robot to utilize tools like a screwdriver, hammer, and wrench to fix household items quickly would require a substantial amount of data showing how to use these tools.

According to researchers, the current robotic datasets comprise a wide range of modalities; for example, some consist of tactile impressions, while others contain color images. Data could also be gathered in other fields, such as human demonstrations or simulations. Furthermore, every dataset might represent a different task and setting.

Many methods train robots using only one data type due to the difficulty of efficiently integrating multiple data sources into a single machine-learning model. However, robots trained with this limited, task-specific data often struggle to perform new tasks in unfamiliar environments.

MIT’s method helps to learn a method or policy for finishing a single task with a single dataset by utilizing a different diffusion model. Subsequently, the policies acquired by the diffusion models are merged to create an all-encompassing policy that facilitates a robot’s execution of numerous jobs in diverse environments.

A robotic policy is a machine-learning model that uses inputs to perform actions, like a strategy guiding a robotic arm’s trajectory. Typically, datasets for these policies are small and task-specific.

MIT researchers developed a technique, building on Diffusion Policy, to combine smaller datasets from various sources, enabling robots to generalize tasks. Using diffusion models, they train separate policies on different datasets, then combine them by iteratively refining outputs to satisfy each policy’s objectives.

“Addressing heterogeneity in robotic datasets is like a chicken-egg problem. If we want to use a lot of data to train general robot policies, then we first need deployable robots to get all this data. I think that leveraging all the heterogeneous data available, similar to what researchers have done with ChatGPT, is an important step for the robotics field,” said Lirui Wang, an electrical engineering and computer science (EECS) graduate student and lead author of the study in a statement.

Optimizing robot performance

One benefit of this approach is the ability to combine policies to achieve optimal results. For example, a policy trained on real-world data can enhance dexterity, while a policy trained on simulation can improve generalization.

As each policy is trained independently, diffusion rules can be combined and matched to improve performance on a given task. Instead of beginning the process from scratch, a user might instead add data in a new modality or domain by training an extra Diffusion Policy with that dataset.

PoCo was evaluated by the researchers both in a simulation and on actual robotic arms that carried out a range of tool operations, including utilizing a spatula to flip an object and a hammer to pound in nails. Comparing PoCo to baseline approaches, task performance increased by 20 percent.

“The striking thing was that when we finished tuning and visualized it, we can clearly see that the composed trajectory looks much better than either one of them individually,” said Wang.

The researchers hope to employ this method in long-horizon jobs in the future, when a robot would pick up one tool, use it, and then go on to another. To boost performance, they also wish to include bigger robotics datasets.

“We will need all three kinds of data to succeed for robotics: internet data, simulation data, and real robot data. How to combine them effectively will be the million-dollar question. PoCo is a solid step on the right track,” said Jim Fan, senior research scientist at NVIDIA and leader of the AI Agents Initiative, in a statement.

The details of the MIT team’s research are available in the Arxiv archive.

ABOUT THE EDITOR

Jijo Malayil Jijo is an automotive and business journalist based in India. Armed with a BA in History (Honors) from St. Stephen’s College, Delhi University, and a PG diploma in Journalism from the Indian Institute of Mass Communication, Delhi, he has worked for news agencies, national newspapers, and automotive magazines. In his spare time, he likes to go off-roading, engage in political discourse, travel, and teach languages.