Bringing Artificial Intelligence to Primary Care Medicine

Helen Albert chats with Samira Abbasgholizadeh-Rahimi, PhD, a researcher based at McGill University and the Mila-Quebec AI Institute.

Over the last few years there has been increasing hype and publicity about the use of artificial intelligence (AI) in many walks of life. In medicine, AI has the potential to improve medical outcomes for patients by speeding up various administrative and medical processes, improving accuracy of diagnoses, and reducing costs. It can also improve the working life of healthcare professionals such as pathologists and radiologists, when used as a diagnostic aid.

However, many patients and healthcare providers are still uncertain about how useful AI can be and are unclear about what it can and cannot achieve. Abbasgholizadeh-Rahimi is the Canada Research Chair (Tier II) in advanced digital primary health care and an assistant professor at McGill University and Mila-Quebec AI Institute.

Her research is focused on developing and implementing advanced, AI-based digital health technologies that can be used in primary healthcare. She has a particular focus on preventing chronic diseases and conditions before they start and making sure vulnerable populations are included and screening and risk analysis. She is also working to improve daily life and care for older adults with dementia and related conditions.

Abbasgholizadeh-Rahimi spoke with Inside Precision Medicine’s senior editor Albert to discuss her work and how AI can really make a difference at this early stage of healthcare and help achieve precision medicine on a wider scale.

Q: How can AI help us achieve precision medicine?

A: Over the past few years, AI has been successful in terms of identifying populations at high risk in different fields in medicine including cardiology, oncology, and radiology. After identifying these people, it is then possible to develop some personalized treatments and also preventive approaches.

Part of my research lab is focusing on how we can identify high risk people as early as possible and prevent disease before it happens. We want to personalize the type of preventive interventions that we are targeting to the population that is at higher risk of different diseases, including cardiovascular disease. I think AI and machine learning have been successful in helping us to identify those people who are at higher risk, and also very successful in helping us in terms of personalizing these pretreatments and preventive interventions for those populations.

Of course, there are some aspects, such as the ethical consideration and responsible use of AI and how we collect our datasets, that is reflective of the whole population, not one specific population. Preventing potential biases in AI is something that we all need to work on, more specifically on the explainability and transparency of these models. But overall, I think AI has been successful so far in helping us to advance precision medicine and personalized approaches for people at higher risk.

Q: How can biases in AI influence healthcare provision?

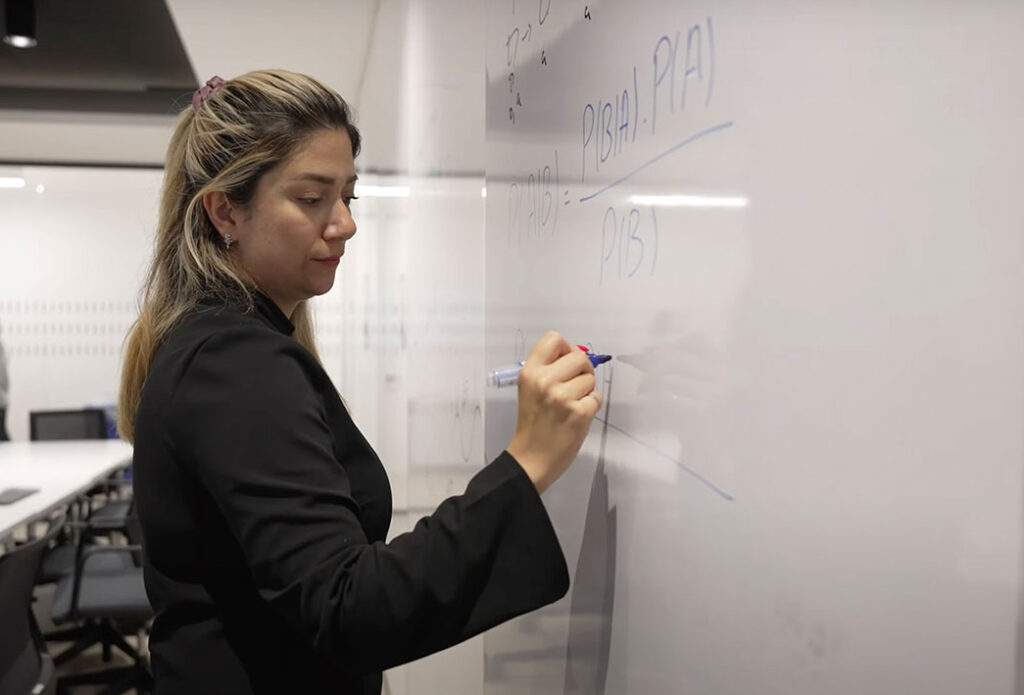

A: One example is in primary healthcare for cardiovascular disease. Prediction of risk of cardiovascular disease in primary healthcare uses risk calculators, like the Framingham Risk Calculator, but the data used to develop that risk calculator and the algorithm goes back to the 1990s. The majority of the population used to create the calculator was from one specific geographical location and was a majority white Caucasian population. When they use this calculator for the non-White population the calculator doesn’t calculate as well, because it has only been trained on the datasets for a White population.

We have the datasets now, as before, but we are more conscious of the way that we are collecting those data.

Q: What are you trying to achieve with your research?

A: I’ve been focusing on two areas. Firstly, on women’s health, more specifically on cardiovascular disease prediction and prevention among women who are at high risk. Women’s cardiovascular disease has been under diagnosed and undertreated for many years. We’re trying to look at differences in different populations, for example people from an ethnic minority, or those with lower socioeconomic status. Usually, those aspects are neglected in traditional risk prediction for cardiovascular disease. Social aspects, economical aspects, and different factors are not normally considered in risk prediction and prevention.

In primary healthcare, people are not being trained in terms of women’s cardiovascular disease prevention and management. That was a motivation for us to focus on this area and to look into the development of tools needed for risk prediction and prevention for this specific population. We’ve been working on this for the past few years, by looking into the different datasets and building algorithms that could help us to identify those at higher risk. Now we are looking into how we can personalize preventive interventions for each woman by collecting their own data and providing individual personalized recommendations for them.

The second population that we are focusing on is older adults. We are looking into dementia and early detection of mild cognitive impairments. We have looked into frailty and depression among this population and also how we can use sensors in order to identify which older adults are at higher risk.

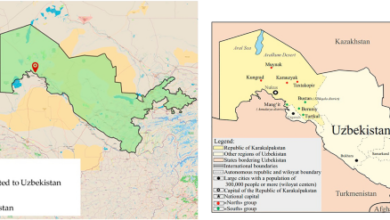

McGill University and the Mila-Quebec AI Institute. [Frank Roop]

Q: What kind of sensors are you using for this?

A: We’re using motion detection information that we’re collecting from their houses. We are collaborating with our industry partner using their sensors inside the house connected by Wi-Fi signals. We will identify patterns of movement, sleep, and also other behavioral aspects of older adults in their homes to see if we can detect signs of depression, frailty, and cognitive impairment at an early stage, in combination with validated questionnaires.

We want to provide some interventions for these older adults. Another new area that we are adding to the lab’s focus is using humanized robots to help provide care. We want to assess how we can use humanized robots for providing better care, reducing loneliness, and improving mental health among older adults by using generative AI. There is a lot of potential for integration of robotics and AI for improving the care that we’re providing for vulnerable populations, including older adults, also for those who are not being seen.

Q: I know that AI is often treated with suspicion, and many non-AI experts don’t really know what to do with it. How are you approaching trying to make it more user friendly?

A: There are two parts to this. From the development side, we are trying to make it more transparent, and focus on the responsible use of AI by looking into the ethical aspects, making sure that the explainability and interpretability of the algorithms are in place as well as much as we can, because there’s also always a balance between precision and explainability.

I’ve been co-leading a project with another colleague, professor Elham Emami, also from McGill University, on how we can include equity, diversity, and inclusion (EDI) in the lifecycle of AI. There is still a lot that we can do and a lot that we don’t know on how we can integrate these accessibility aspects and EDI aspects into AI algorithm development and implementation.

The second part of this is implementation, which can be challenging and difficult. There is a lot of resistance and a lack of education in terms of how these technologies can be used in clinical settings. Many clinicians are not familiar with what AI is, and how to use it. From an educational perspective, our medical curriculums are not well prepared to train the next generation of clinicians. We also have to work on that side. I am leading a project on development of that curriculum framework for teaching AI during a family medicine residency. Hopefully, we can expand it to other fields in coming years to make sure that this training is in place to help future clinicians.

Q: Do clinicians and healthcare workers come and talk to you more, because you’re based in a hospital setting?

A: I think that that has an impact. I’m not a clinician by training, I’m an engineer, but I am well placed in a clinical setting. I’m trying to be there and be in direct contact with clinicians and be present in the hospital and the clinics to make sure that I have a better understanding of what’s going on in the field. It’s a good way to earn trust. A simple discussion with them could help me to have a better understanding of the problems that they are facing, so we can build a solution for that problem. As an engineer, I’m always looking for the development of solutions and tools for the problem. Being based in a clinical setting has helped me to better identify the problems that people are facing in clinical settings and to develop solutions that will address those needs and problems, both from the clinician side and also the patient side.

I think that it is important that computer scientists and engineers be more involved in the clinical settings and in fieldwork to have a better understanding of the needs and problems that need to be solved. But, on the other hand, there is also a need for clinicians to be open to new ideas and to use these tools. I have heard a lot of different excuses and resistance there as well. It’s important for engineers and computer scientists and also clinicians to be open to learn from and trust each other to start using this technology more in clinical settings.

Q: Is there more understanding and uptake of AI in medicine than there used to be?

A: I think it’s much better now. In 2017, when I started working in this area, I was among the first people who started talking about using AI in family medicine and healthcare at that time. Everyone was skeptical, and nobody was eager to use those tools in the clinical settings. Now we’re seeing much more curiosity from clinicians about AI. They are willing to start pilot testing and see where it goes, so it’s much better than before. I think one influential factor is improved knowledge and education of what AI is and what it is not. But of course, we still have to work on that side even more.

Education is very important to improve knowledge among clinicians and patients. They need to know exactly what AI can do and what it cannot do, and we need to manage the expectation that AI will solve the whole problem. Instead, we need to show them it could assist in some of their tasks if they eager to learn.

Q: What do you hope to see going forward in the area of AI and family care medicine?

A: I’m hoping to see more collaboration and interdisciplinary work from both the computer scientists and also the clinicians. I hope people will be eager to collaborate and be open to multidisciplinary research and implementation work. I’m also hoping that there will be more openness with regard to implementation of these tools because a study showed that up to 97% of the work that has been published on AI in primary healthcare will be pilot tested and never go to the implementation stage in real clinical practice. I’m hoping that this will shift and get better in the future so we can properly use this technology for the benefit of patients and healthcare professionals in the future.

Helen Albert is senior editor at Inside Precision Medicine and a freelance science journalist. Prior to going freelance, she was editor-in-chief at Labiotech, an English-language, digital publication based in Berlin focusing on the European biotech industry. Before moving to Germany, she worked at a range of different science and health-focused publications in London. She was editor of The Biochemist magazine and blog, but also worked as a senior reporter at Springer Nature’s medwireNews for a number of years, as well as freelancing for various international publications. She has written for New Scientist, Chemistry World, Biodesigned, The BMJ, Forbes, Science Business, Cosmos magazine, and GEN. Helen has academic degrees in genetics and anthropology, and also spent some time early in her career working at the Sanger Institute in Cambridge before deciding to move into journalism.