Patient-centered radiology reports with generative artificial intelligence: adding value to radiology reporting

Effective communication is essential in patient-centered health care24, and radiologists ultimately communicate with patients based on their radiologic reports. The LLM has shown a significant impact on the field of radiology11,25,26,27,28. In this study, we aimed to investigate the potential of providing optimized communication to patients through patient-friendly radiologic reports using ChatGPT. Furthermore, we sought to assess the practical implications of such AI technology on patients and subsequent workflow in radiology. And this is why we employed ChatGPT, an LLM that has achieved historical record as the first successfully popularized AI model worldwide and has been widely adopted among the public.

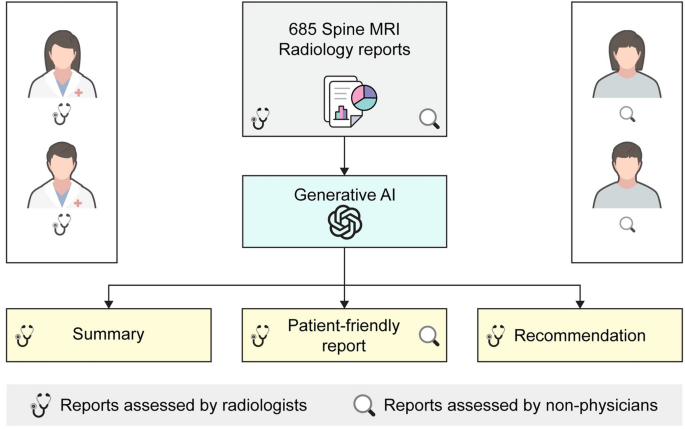

The use of ChatGPT to generate radiologic reports that are more concise or easily understandable was an interesting and potentially valuable application of natural language processing technology. This study found that both formats of the transformed texts generated by ChatGPT demonstrated excellent quality and almost perfect agreement among readers. Summary of radiology report is an actively researched topic, and there are some reports have shown the excellent agreements of simplified reports as well18,29. These results demonstrate the potential of employing a reliable AI chatbot such as ChatGPT to translate radiologic reports that meet our established standards and expectations. The reason for requesting both a summary and a patient-friendly report from ChatGPT was that these texts serve different purposes, although they are closely related. The summary aimed to provide a concise report that includes all the essential information, even if medical terminology is used as it is30. On the other hand, the patient-friendly report focused on transforming the text to ensure that medical terminology is avoided and the content is presented in a way that patients can easily understand, prioritizing comprehension over the length of the text. Patient-friendly reports are designed to aid patient comprehension, while the summary could be used to communicate between radiologists and referring physicians, and it could be more useful, particularly for hospitals with non-structured original report formats. The LLM has demonstrated potential in converting unstructured data into structured data, indicating encouraging outcomes for standardization and data extraction31,32. The LLM based radiology report significantly enhances the readability and understandability of radiology reports33,34.

Furthermore, this study evaluated the difference in comprehension between the original and patient-friendly reports by the non-physicians, and although inter-rater agreement between the two non-physicians was not high, each of them demonstrated significant improvements. This suggests that regardless of the variation in individuals’ background knowledge, there was a substantial enhancement in understanding when reading the patient-friendly report compared to the original report, which had an average comprehension rate below 50%. So far, no study has evaluated the quality of radiologic reports generated by ChatGPT through assessments by radiologists and non-physicians, considering both accuracy and comprehensibility. This is one of the key strengths of our study, which has not been researched in previous studies. By providing both a summary report for medical professionals and a patient-friendly report for patients, ChatGPT has the potential to enhance communication, improve understanding, and ultimately contribute to better patient care in the field of radiology.

The recommendation system is a challenging application of natural language processing technology. This study found the highest scores in the use of AI-generated recommendations among the three formats in terms of the qualitative evaluation by radiologists and, unsurprisingly, showed a significant difference in length. It is common for our original reports, primarily focused on communication with healthcare professionals, to provide suggestions that consider clinical correlation for general-level results unless there is a specific emphasis on important recommendations. While respecting the physician’s expertise and the practical considerations of clinical situations, the report may be somewhat less cautious or empathetic when received by the patients. Similar to a study12, instead of a succinct one-word response from a human doctor, or even if it is a recommendation that pinpoints a single key point, people would perceive a comprehensive explanation by ChatGPT as superior, and it could be indicative of the compassionate attributes associated with ChatGPT.

Translation of radiologic reports using ChatGPT has shown many benefits, as previously discussed. However, the confident utilization of ChatGPT in the medical domain continues to raise questions. The presence of artificial hallucination in generative AI models, such as ChatGPT, represents is an important inherent issue when these models are applied in medical application. In this study, two radiologists thoroughly assessed the accuracy of the generated text. In the reports generated by ChatGPT, 1.12% of artificial hallucination was observed, and additionally, there was 7.40% inappropriate language that could potentially lead to misunderstandings in future communications. While the observed percentages of artificial hallucination may not be significantly high, they cannot be disregarded in medicine, which relies heavily on truthfulness, especially in non-healthcare professionals who could not aware of the potential issues they can pose. And ChatGPT is not fundamentally a model developed for healthcare purposes. Thus, radiologists are responsible for identifying and addressing artificial hallucinations moving forward to ameliorate the issues.

This study has a few limitations. First, this study was a single-center study using spine MRI reports. Thus, the consistency of our findings should be confirmed in the reports of other imaging modalities for other joints and organs as well. Second, we examined the potential of ChatGPT alone, and no other LLMs, in the unrestricted alterations of radiology reports. The consistency of findings should be verified in the recently introduced GPT4 with enhanced reasoning capability and in other LLMs. However, considering the aim of this paper, which focuses on whether general individuals without medical knowledge can understand medical reports through AI and the risks involved, the novelty or performance of the LLM is less critical than identifying which LLM is most widely known gratuitously available to the public. By using the ChatGPT, which can be deemed successful in its popularization among the public, the study aimed to identify and highlight the challenges associated with its usage, catering to the dual purpose of engaging medical professionals in the field of radiology and the public. Third, it should be noted that the evaluation in this study was conducted with two non-physician raters who lacked medical backgrounds. Although there are limitations in the number of evaluators in this study, we believe it lays the groundwork for future research exploring differences in comprehension and further investigations into AI-generated reports across a more diverse group of general individuals. The clinical significance highlighted by this study opens up the possibility of providing radiologic reports in a patient-friendly reports using LLMs immediately to real patients, and it can serve as a foundation for future research in this area. Fourth, the prompts used in this manuscript were determined without the assistance of prompt engineering expertise due to the study being in its early stages. Instead, they were made from clinical radiologic viewpoints. This could have influenced the effectiveness of the prompts and consequently the outcomes of the study. Using more crafted prompts by advanced prompt engineering is expected to yield better results18,27. Last limitation is the lack of direct validation of the content with patients. Alternatively, we included two adults of average knowledge level, one male and one female, to evaluate the comprehensibility of the reports, and matched them with two radiologists to assess the appropriateness and accuracy of the translated reports. Of course, the non-physician raters did not have professional medical knowledge. In future research, the evaluations from patients undergoing MRI scans or radiology report with a readability with specific level (e.g. eighth grade)34,35 is expected.

This study aims to evaluate the effectiveness of AI-generated radiology reports across various dimensions, including summaries, patient-friendly reports, and recommendations, thereby contributing to the enhancement of radiology workflows. The results consistently confirm their performance, maintaining accuracy. Furthermore, the study emphasizes the value of patient-centered radiology by improving comprehension through AI-generated patient-friendly reports, promoting better patient engagement and understanding of medical imaging results. While AI-generated reports may still have limitations in possibly incorporating false information, even in minor instances, their potential as a useful tool is convincingly demonstrated in this study. Rather than posing potential harm, they show promise as a valuable tool with proper oversight and correction by radiologists in the future.