Apple Intelligence is bringing generative AI to your iDevices

This article covers a developing story. Continue to check back with us as we will be adding more information as it becomes available.

Apple has long been rumored to be bringing generative AI to its devices, with leaks suggesting that the company had shopped around for the tech to power what it was working on. Now, the company has finally announced its suite of AI features at WWDC, and it’s calling it Apple Intelligence, which runs on-device. Apple says thanks to the A17 Pro and its M series of chips, all of this software is able to run on your devices, locally, without giving any of your personal data to Apple.

Apple is very clear that it doesn’t want to store your data or misuse your data. The company is very clear that users have no way to verify that the data on a server isn’t accessed, but the software on your smartphone can still be audited. To get around this, Apple is also launching “Private Cloud Compute”, where you can privately run models on your data while offering the privacy and security that you can expect from your smartphone.

Proof-read and summarize

Source: Apple

The first big generative use case that Apple has shown off is introduction of system-wide writing tools within its new software versions. You can proofread and summarize text, or even ask it to write for you. These writing features, Apple says, are coming to Mail, Notes, Safari, Keynote, and even third-party apps. It’ll even let you choose a tone to write in so that you can correct the writing to fit the exact way you want it to be written.

Personal context

Your phone will understand you

Source: Apple

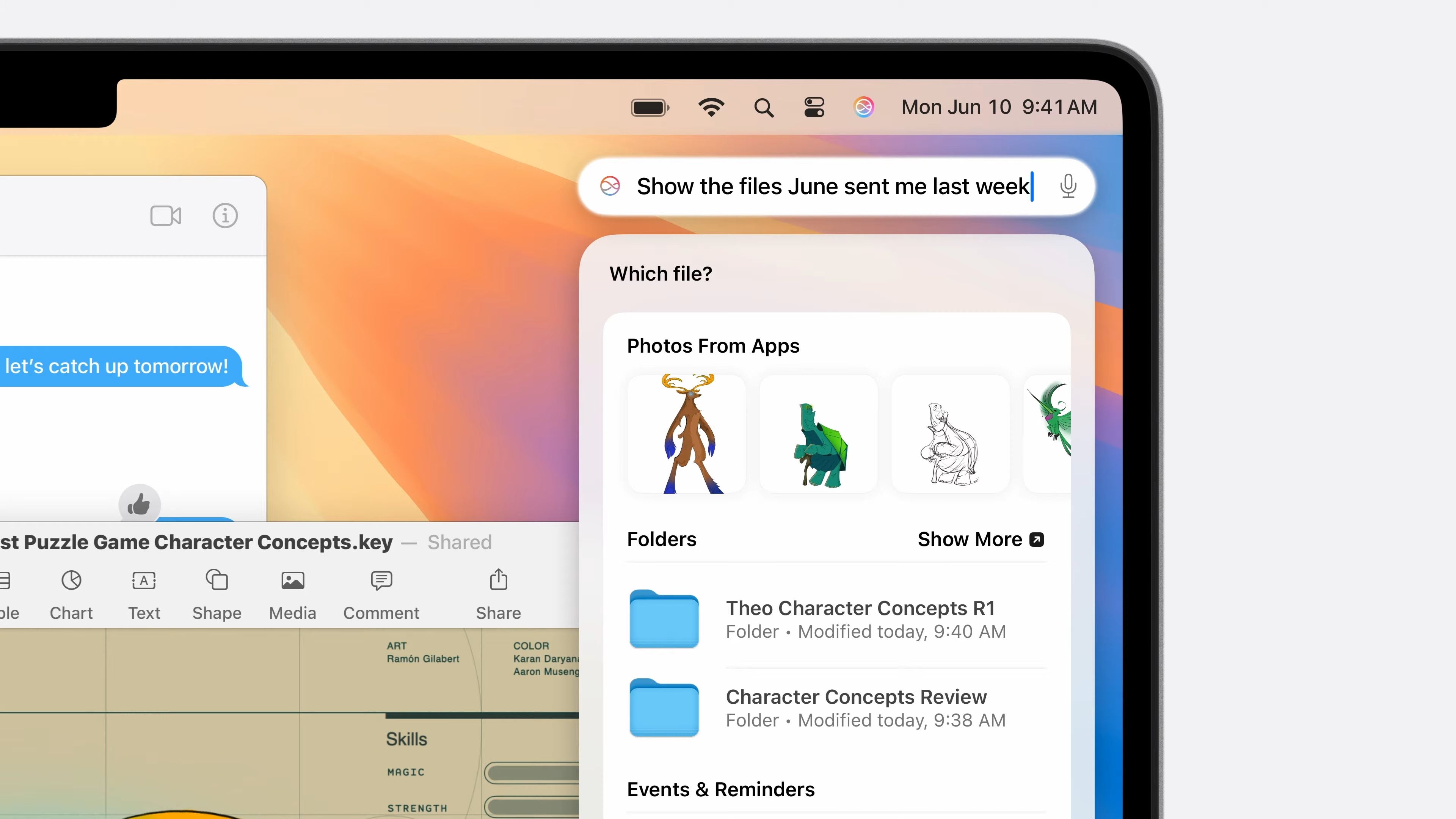

The big thing that Apple is pushing is that your smartphone won’t just be smart, it will be smart relative to you. Apple says that it will understand your life in ways that it can contextually piece together your requests. For example, if you ask it to “Play the podcast that my wife sent me the other day,” it can actually do that. It will pull that information from your messages and play it for you, understanding who your wife is.

This goes for practically any request you can make that requires your phone to understand you. It can understand your priority notifications, what you mean when you ask for files in across your emails or texts, and can understand notifications that might need priority focus rather than just shoving everything in your face at all times.

Plan your day and find information

Pulling information from your calendar and other places

Source: Apple

If you ask Siri how to whether you can still make your daughter’s play based on the time, it can pull information from your calendar and check your GPS, traffic statistics, and more to find out if you still can make it. You don’t need to do anything; simply asking is enough.

Another demo that Apple showed off was asking Siri “When will my Mom arrive at the airport?” Siri was able to pull information about the incoming flight with flight information and the expected landing time, making it significantly easier to find information that might be stored across multiple apps, such as Messages, Calendar, and Mail.

Image Playground

Generate images right on your device

If you want to create an image to reply to someone, you can create images on-device with your own prompts, thanks to Image Playground. It’s a feature that will let you generatively create images to send to people, and it will understand your personal context to pull information from your conversation in order to contextualize it further.