Beware of identify fraud empowered by generative AI

While some businesses and organizations have called for an outright ban or pause on AI, it’s not possible because a number of apps and software products are rapidly integrating AI to forestall regulation. In fact, Meta and Google recently added AI to their flagship products with mixed results from customers. Instead of trying to ban or slow down emerging technology, Congress should pass the Biometric Information Privacy Act, or BIPA, to ensure that the misuse of someone’s identity using generative AI is punishable by law.

Recently, corporate leaders and school principals alike have been impersonated using GAI, leading to scandals involving nonconsensual intimate images, sexual harassment, blackmail, and financial scams. When used in scams and hoaxes, generative AI provides an incredible advantage to cybercriminals, who often combine AI with social engineering techniques to enhance the ruse.

For example, before the New Hampshire primary earlier this year, some residents received a robocall in which it appeared that President Biden was urging them not to vote in the GOP primary and “save” their vote for November. However, Biden’s voice had been cloned. In his 2024 State of the Union address, Biden called for a ban on impersonation technologies.

Apps and software that can be used to make convincing audio/video impersonations, like Snapchat’s face swap feature, are already available on your smartphone and computer. Using vast datasets available online, apps powered by generative AI allow users to create original content without all of the expensive equipment, professional actors, or musicians once needed for such a production. And like most technology made for the masses, it’s fun to play with. The excitement quickly devolved into an entire industry of deepfake pornographic vignettes of celebrities and nonpublic figures.

In 2019 and again in 2021, Representative Yvette Clarke of New York proposed the Deep Fakes Accountability Act, which would amend the laws and increase penalties for fraud and false statements to include audio and visual impersonation through generative AI. In Massachusetts, Representative Dylan Fernandes of Falmouth championed an act similar to BIPA that is now being considered as part of a larger data privacy act by the Legislature.

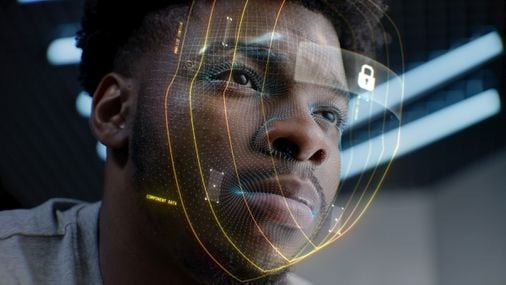

Generative AI represents a concerning intersection of advanced technology and privacy infringement. Recently, The New York Times tech journalist Kashmir Hill tracked the rise of new biometric products in her bestselling book, “Your Face Belongs to Us.” Tech companies, like Clearview AI and Palantir, use facial recognition algorithms that have sparked widespread criticism due to the indiscriminate scraping of billions of images from social media platforms and other online sources without users’ consent, she wrote. By compiling these large databases about people, facial recognition technology companies enable their clients, including law enforcement agencies, to conduct mass surveillance and identify individuals without their knowledge or permission.

This unchecked access to personal data raises serious ethical questions about privacy, consent, and the potential for abuse. Moreover, the lack of transparency surrounding generative AI models and the refusal to disclose what kinds of data is stored and how it is transmitted puts individual rights and national security at risk. Without strong regulations, widespread public adoption of this technology threatens individual civil liberties and is already creating new tactics for cybercrime, including posing as colleagues over video conferencing in real time.

These issues highlight the urgent need for comprehensive privacy legislation in the digital age. Regulation aimed at stopping the spread of generative AI is futile though. Just as the federal government doesn’t ban 3-D printers because users can make 3-D-printed guns, Congress should manage the improper use of this emerging technology by requiring active consent.

BIPA, the Biometric Information Privacy Act, originated in Illinois in 2008, and protected residents’ biometric data from incorporation into databases without affirmative consent. The act also penalized companies for each individual infraction. ) In a landmark case filed in 2015, Facebook in 2021 paid out $650 million for by capturing users’ face prints and then auto-tagging users in photos. In the class action suit, each Illinois resident affected received at least $345.

Biometric data, such as fingerprints, facial recognition, voice prints, iris scans, and even DNA, uniquely identify individuals. Sometimes biometric data serve as a password to access sensitive information and spaces. The integration of biometric identification on cellphones and computers, such as face ID, fingerprint scans to unlock a laptop, or hand scans at Whole Foods, is ubiquitous now, but it remains controversial among privacy advocates. While biometric technology can offer convenience and added security, its misuse or mishandling can lead to severe breaches of privacy and personal autonomy.

Unlike passwords or PINs, which can be changed if compromised, biometric data is inherent to an individual and cannot be altered. Moreover, the collection and storage of biometric data by multinational technology companies, like Palantir, raises concerns about surveillance and potential data misuse by governments, corporations, or malicious actors. Mass surveillance and the creation of comprehensive profiles of individuals without their consent could lead to potential discrimination, identity theft, or even a surveillance state.

Furthermore, biometric information privacy is essential for maintaining individual autonomy and freedom of expression. Without adequate protection, individuals may feel pressured to relinquish their biometric data in various contexts, compromising their ability to control their personal information and make informed decisions about its use. Instead of tracking down every company that may have used your data to “opt out,” BIPA requires active opt in.

As generative AI technology continues to advance, the threat to personal identity becomes increasingly sophisticated, necessitating robust safeguards, education, and detection mechanisms to mitigate harm. Biometric information privacy is crucial for protecting individuals’ fundamental rights in the digital age. Governments, businesses, and technology developers must prioritize privacy regulations, transparent data practices, and secure storage systems to ensure that biometric data is used responsibly and ethically, respecting individuals’ rights to privacy and personal autonomy.

It’s also possible that these generative AI systems are more problematic than profitable, raising the question of what kinds of emerging technology undermine personal and public safety.

Joan Donovan is an assistant professor of journalism and emerging media studies at Boston University and founder of The Critical Internet Studies Institute.