Rise in AI Adoption Prompts Global Push for Regulation – Fintech Schweiz Digital Finance News

The rapid expansion and deployment of generative artificial intelligence (gen AI) and AI more broadly across organizations worldwide has resulted in a global push for regulation.

In the US, President Joe Biden signed an executive order on AI in October 2023, laying out AI standards that are set to be eventually codified by financial regulators. Over the past five years, 17 US states have enacted 29 bills focused on regulating the design, development and use of AI, according to the Council of State Governments.

In China, President Xi Jinping introduced last year the Global AI Governance Initiative, outlining a comprehensive plan focusing on AI development, safety and governance. Authorities have also issued “interim measures” to regulate the provision of gen AI services, imposing various obligations relating to risk assessment and mitigation, transparency and accountability, as well as user consent and authentication.

Recently, Japanese Prime Minister Fumio Kishida unveiled an international framework for the regulation and use of gen AI called the Hiroshima AI Process Friends Group. The group, which focuses on implementing principles and code of conduct to address gen AI risks, has already gained support from 49 countries and regions, the Associated Press reported on May 03.

Impact of EU’s AI Act on financial services firms

The European Union’s AI Act is perhaps the most impactful and groundbreaking regulation to date. Approved by the EU Parliament in March 2024, the regulatory framework represents the world’s first major law for regulating AI and is set to serve as a model for other jurisdictions.

According to Dataiku, an American AI and machine learning (ML) company, the EU AI Act will have considerable impact on the financial services industry and firms should prepare for compliance now.

Under the AI Act, financial firms will need to categorize AI systems into one of four risk levels and take specific mitigation steps for each category. They will need to explicitly record the “Intended Purpose” of each AI system before they start developing the model. While Dataiku says that there’s some uncertainty about how this will be interpreted and enforced, it notes that this indicates a stricter emphasis on maintaining proper timelines than current regulatory standards.

Additionally, the AI Act introduces “Post Market Monitoring (PMM)” obligations for AI models in production. This means that firms will be required to continually monitor and validate that their models remain in their original risk category and maintain their intended purpose. Otherwise, reclassification will be needed.

Dataiku recommends financial services companies to promptly familiarize themselves with the AI Act’s requirements and assess whether current practices meet these standards. Additionally, documentation should begin at the inception of any new model development, particularly when models are likely to reach production, it says.

Moreover, Dataiku warns that the EU’s proactive stance may encourage other regions to accelerate the development and implementation of AI regulations. By 2026, tech consulting firm Gartner predicts 50% of governments worldwide will enforce use of responsible AI through regulations, policies and the need for data privacy.

A groundbreaking regulatory framework

The EU’s AI Act is the world’s comprehensive regulatory framework specifically targeting AI. The legislation adopts a risk-based approach to products or services that use AI, and impose different levels of requirements depending on the perceived threats the AI applications pose to society.

In particularly, the law prohibits applications of AI that pose an “unacceptable risks” to the fundamental rights and values of the EU. These applications include social scoring systems and biometric categorization systems.

High-risk AI systems, such as remote biometric identification systems, AI used as a safety component in critical infrastructure, and AI used in education, employment and credit scoring, are forced to comply with stringent rules relating to risk management, data governance, documentation, transparency, human oversight, accuracy and cybersecurity, among others.

Gen AI systems are also subject to a set of obligations. In particular, these systems must be developed with advanced safeguards against violating EU laws, and providers must document their use of copyrighted training data and uphold transparency standards.

For foundation models, which include gen AI systems, additional obligations are imposed, such as demonstrating mitigation of potential risks, using unbiased datasets, ensuring performance and safety throughout the model’s lifecycle, minimizing energy and resource usage and providing technical documentation.

The AI Act was finalized and endorsed by all 27 EU member states on February 02, 2024, and by the EU Parliament on March 13, 2024. After final approval by the EU Council on May 21, 2024, the AI Act is now set to be published in the EU’s Official Journal.

Provisions will start taking effect in stages, with countries required to ban prohibited AI systems six months after publication. Rules for general purpose AI systems like chatbots will start applying a year after the law takes effect, and by mid-2026, the complete set of regulations will be in force.

Violations of the AI Act will draw fines of up to EUR 35 million (US$38 million), or 7% of a company’s global revenue.

AI adoption surges

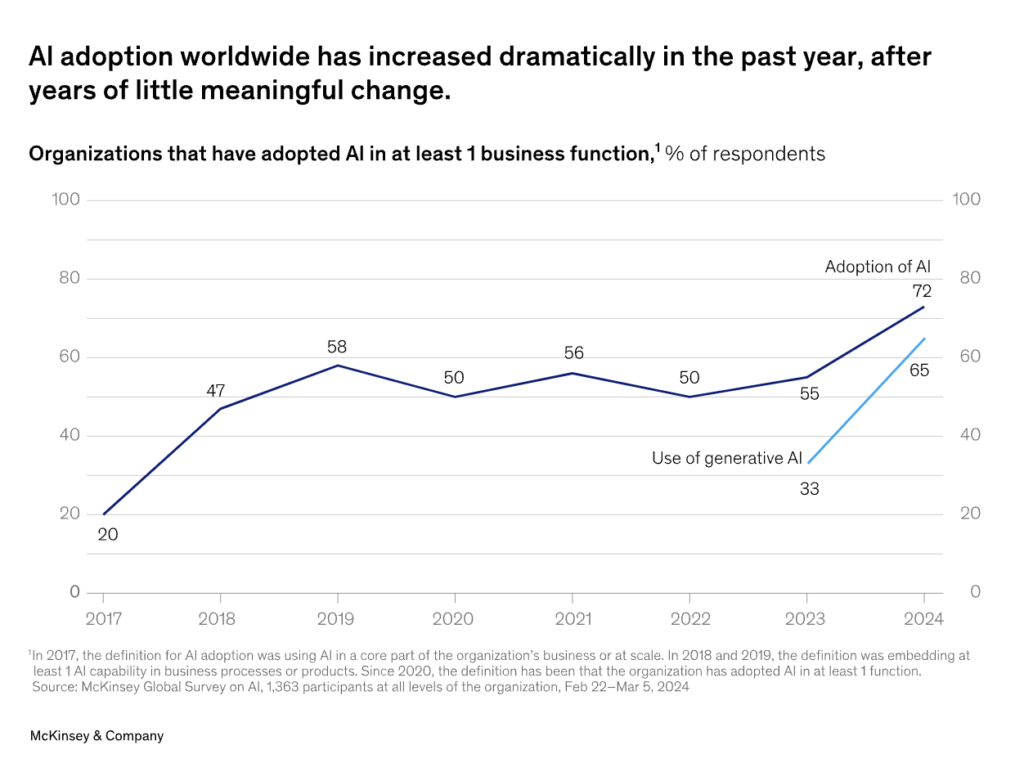

Globally, jurisdictions are racing to regulate AI as adoption of the technology surges. A McKinsey survey found that adoption of AI has reached a remarkable 72% this year, up from 55% in 2023.

Gen AI is the number one type of AI solution adopted by businesses worldwide. A Gartner study conducted in Q4 2023 found that 29% of respondents from organizations in the US, Germany, and the UK are using gen AI, making it the most frequently deployed AI solution.

Featured image credit: edited from freepik