EUs new AI rules Industry opposed to revealing guarded trade secrets | Tech News

The European Union’s recently enacted AI Act will be implemented in phases over the next two years, giving time to regulators to enforce the new laws

Rimjhim Singh New Delhi

New laws mandating the use of artificial intelligence (AI) in the European Union (EU) will now compel companies to be more transparent about the data used by them to train their systems, which could further reveal the industry’s closely guarded secrets, according to a report in the Times of India.

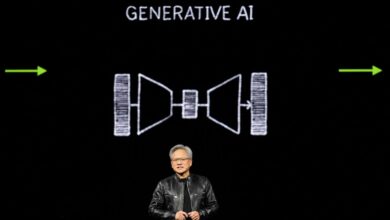

Since Microsoft-backed OpenAI introduced ChatGPT to the public 18 months ago, there has been a significant increase in public interest and investment in generative AI. This technology involves applications capable of swiftly generating text, images, and audio content.

Click here to connect with us on WhatsApp

However, as the industry expands, questions have arisen about how AI companies acquire the data used to train their models and whether using bestselling books and Hollywood movies without the creators’ permission constitutes a copyright breach.

Act mandates ‘detailed summaries’

One of the more contentious sections of the Act mandates that organisations deploying general-purpose AI models, such as ChatGPT, must provide “detailed summaries” of the content used for training. The newly established AI Office announced plans to release a template for these summaries in early 2025, following stakeholder consultations. However, AI companies strongly oppose disclosing the training content of their models, arguing that such information constitutes a trade secret and could give competitors an unfair advantage if made public, the report said.

Over the past year, several prominent tech companies, such as Google, OpenAI, and Stability AI, have faced lawsuits from creators alleging improper use of their content for training AI models. Although US President Joe Biden has issued multiple executive orders addressing AI security risks, copyright issues remain largely untested, the report further stated.

OpenAI faces backlash

In response to increasing scrutiny, technology companies have entered into numerous content-licensing agreements with media outlets and websites. Notably, OpenAI has secured deals with the Financial Times and The Atlantic, while Google has partnered with NewsCorp and the social media platform Reddit.

Despite these efforts, OpenAI faced criticism in March when chief technology officer (CTO) Mira Murati declined to confirm whether YouTube videos had been used to train its video-generating tool, Sora, citing that such disclosure would violate the company’s terms and conditions.