Adobe Premiere Pro to Introduce Generative AI in 2024 – Adding Objects, Extending Shots, and Other AI-Enhanced Tools

It was somewhat obvious that this year’s NAB show wouldn’t be short on big AI-related announcements. And so it goes: Adobe just shared a sneak peek into new video features they are planning to include in Premiere Pro in 2024. Already this year, editors will be able to generate and add objects into shots, remove disturbing elements with one click, and even extend footage length. What’s interesting is that Adobe also previewed their vision for integrating third-party generative models into the editing software (yes, Open AI’s Sora is among them). Let’s take a closer look at what we can expect from new generative AI in Adobe Premiere Pro.

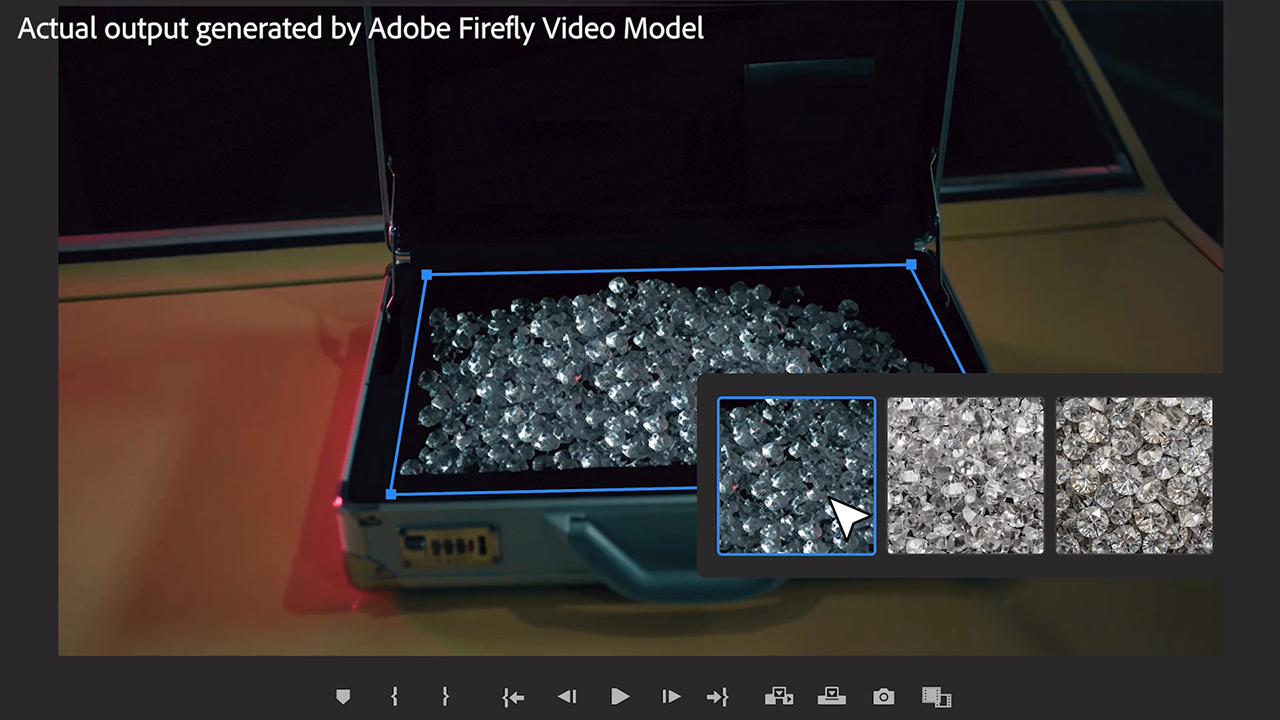

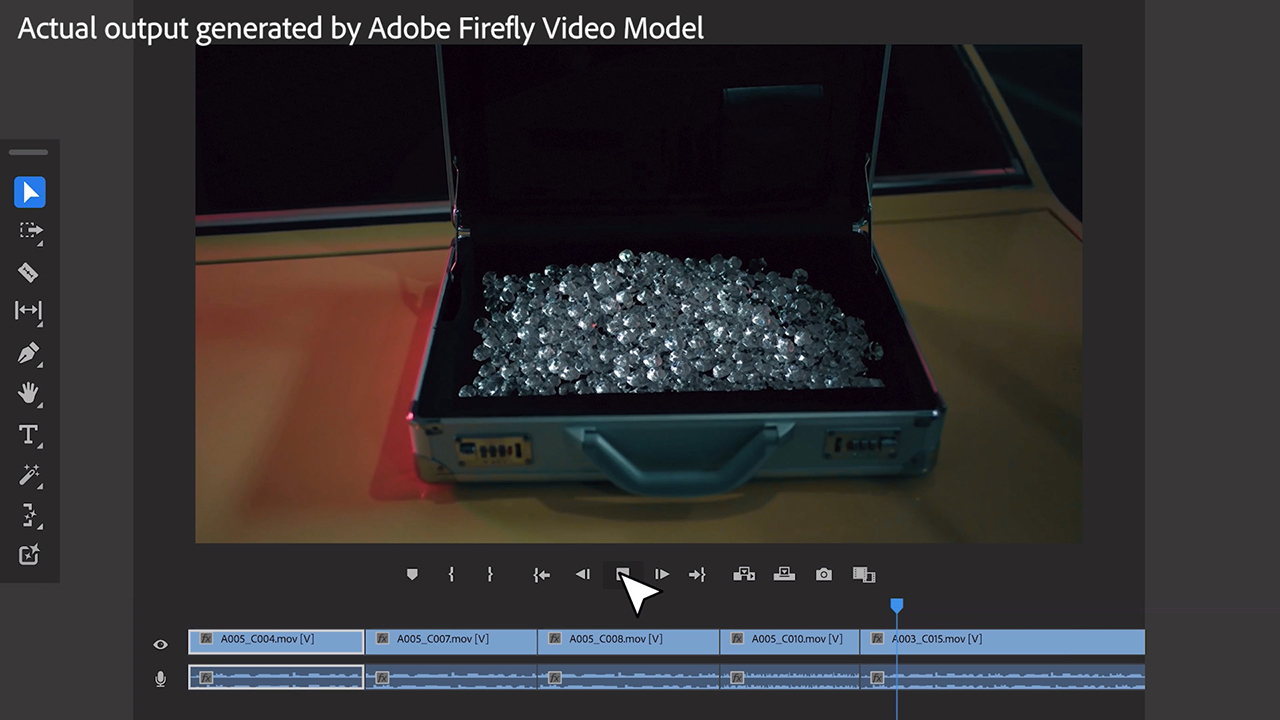

Last year during NAB 2023, Adobe only announced they were working on an AI model: Firefly for video. Fast forward to today, and we get to glimpse the first experimental tests of this tool. Although the new technology is still considered to be in the research phase, what you see in the presentation below are real pixels generated with Adobe’s AI. Well, I can’t deny that the results look impressive!

Adding or removing objects with generative AI in Adobe Premiere Pro

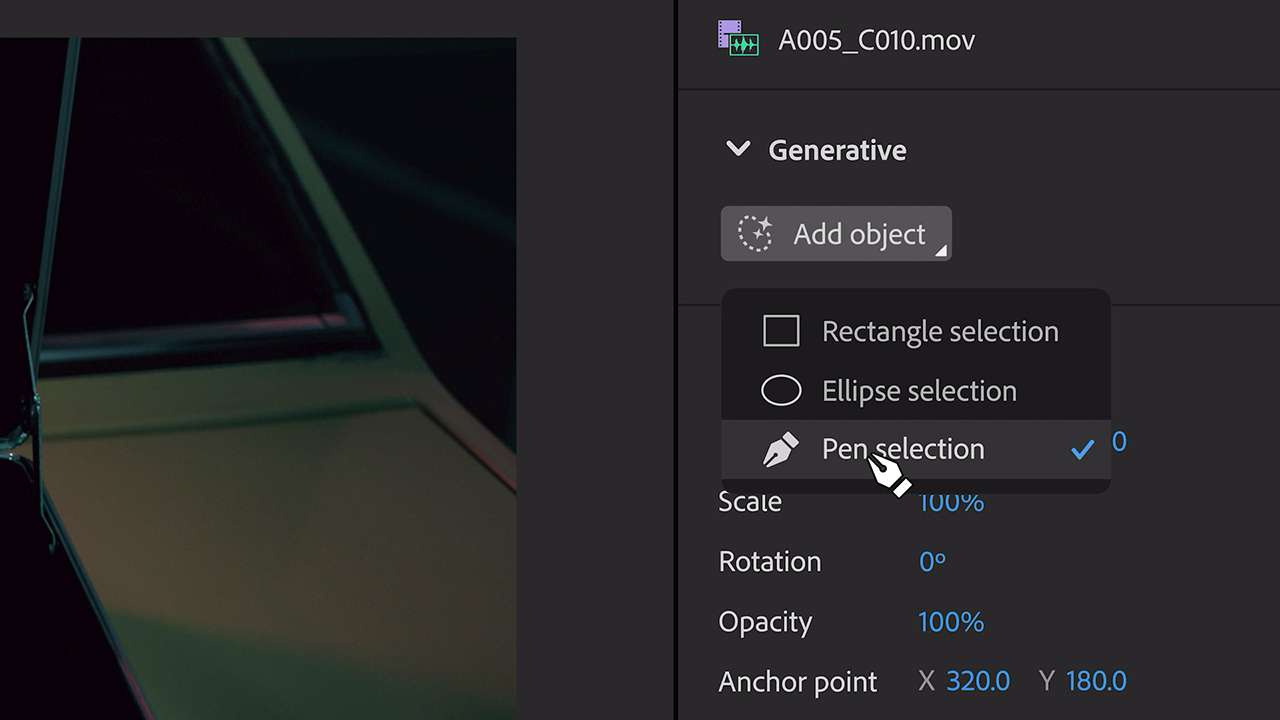

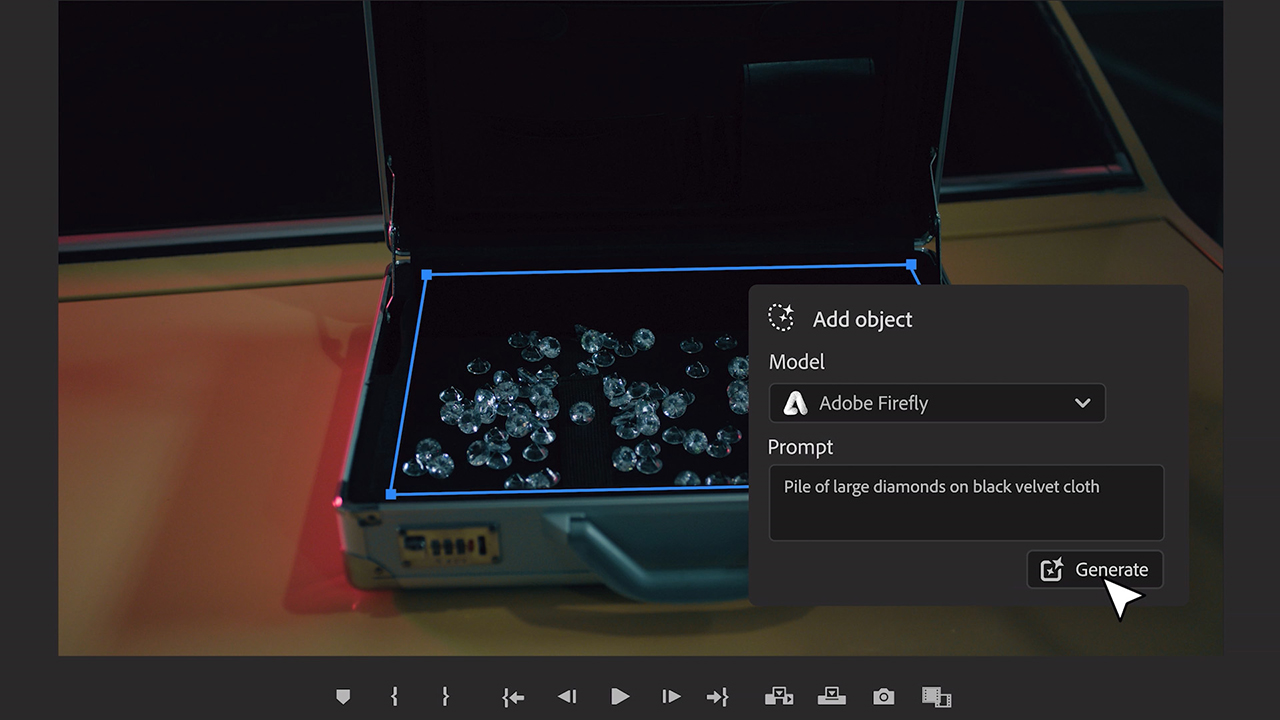

First, something we have been waiting for, since this AI feature works so well in Adobe Photoshop: Generative Fill. Editors will be able to select and track objects in shots and then replace them with automatically generated pixels. The preview shows Adobe Firefly both removing elements from a scene completely and adding something different in their place. The latter works based on text prompts – a familiar workflow, as we know it from text-to-image generators.

In my opinion, this could become one of the most useful features for simplifying and accelerating editing workflows. Just imagine: quickly replacing a logo for a client who went through rebranding, or removing a piece of gear that infiltrated the final take by mistake. Even the famous “Boom in the frame!” might not be an issue anymore (just kidding!)

Extending the length of shots with AI

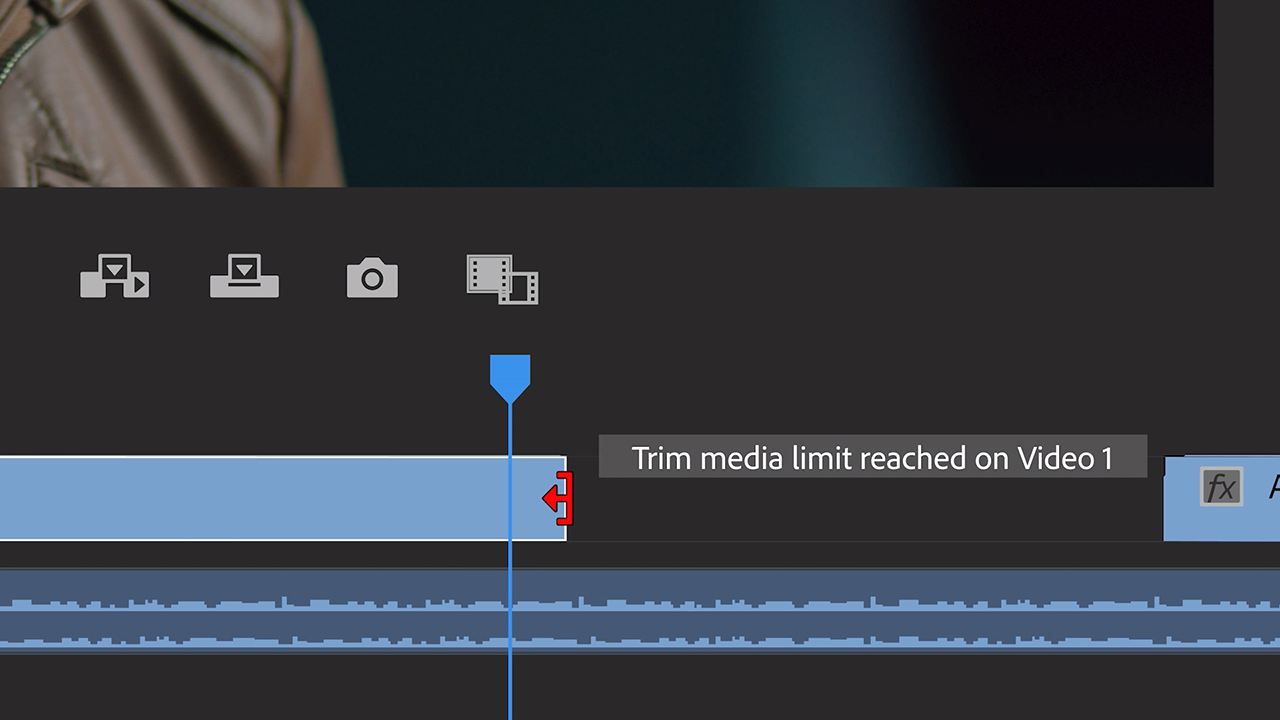

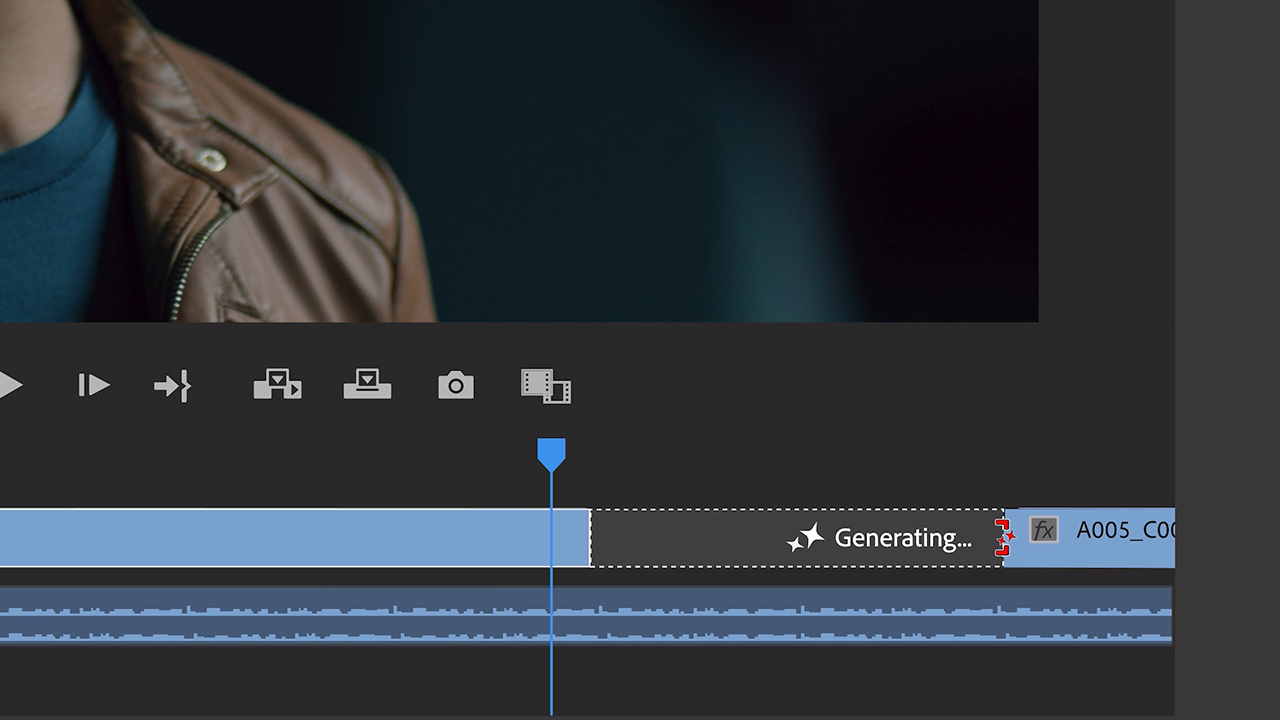

Another feature that Adobe previewed is called Generative Extend, and it allows users to add frames to make clips longer. According to the demonstration, this workflow will function similarly to the Adobe Remix tool, another AI-enhanced feature we are already familiar with. (However, this time not for soundtracks, but for video). Simply drag the right edge of your clip to extend it, and let AI do the rest:

The idea behind it seems plausible: editors often run into a problem when one or the other shot is not long enough. However, when the video clip involves the actor’s performance, I’m afraid we might be tapping into a grey zone. These are real people whose faces and acting will be generated by AI, even if only for a few frames. Still doesn’t sound right to me, so we’ll see what ethical solution Adobe is going to offer.

Working with third-party generative AI models like Sora

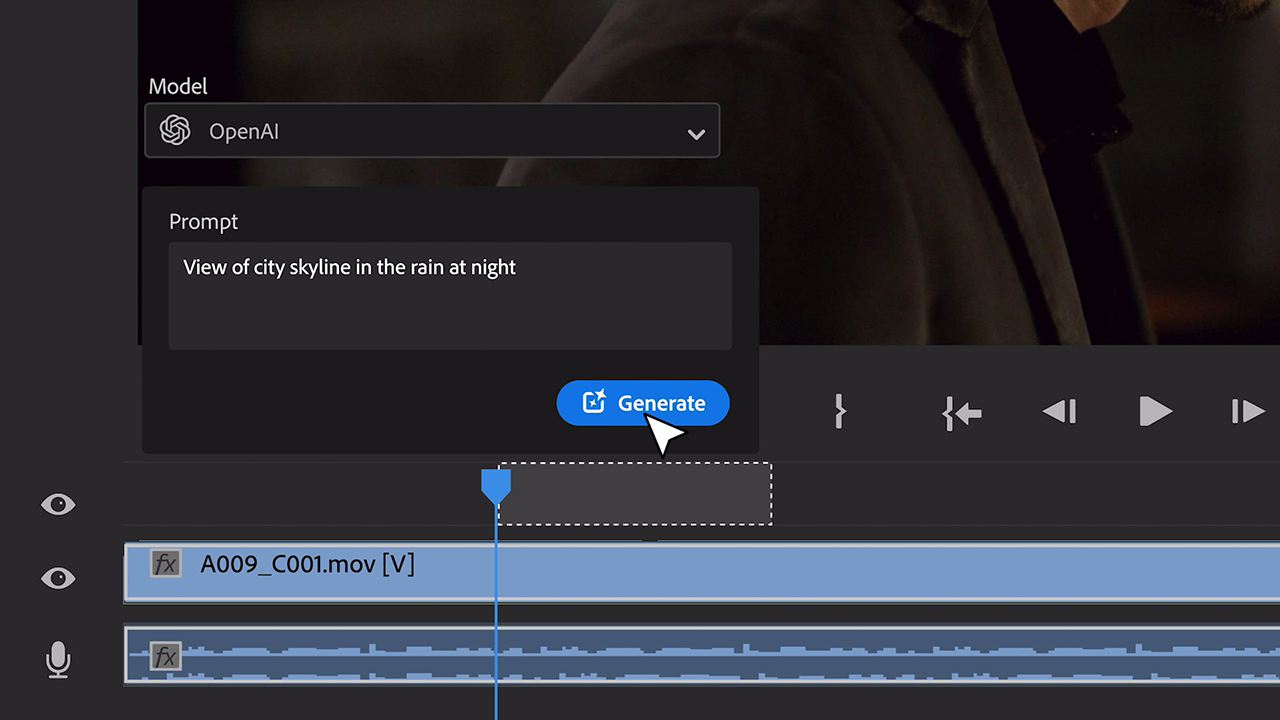

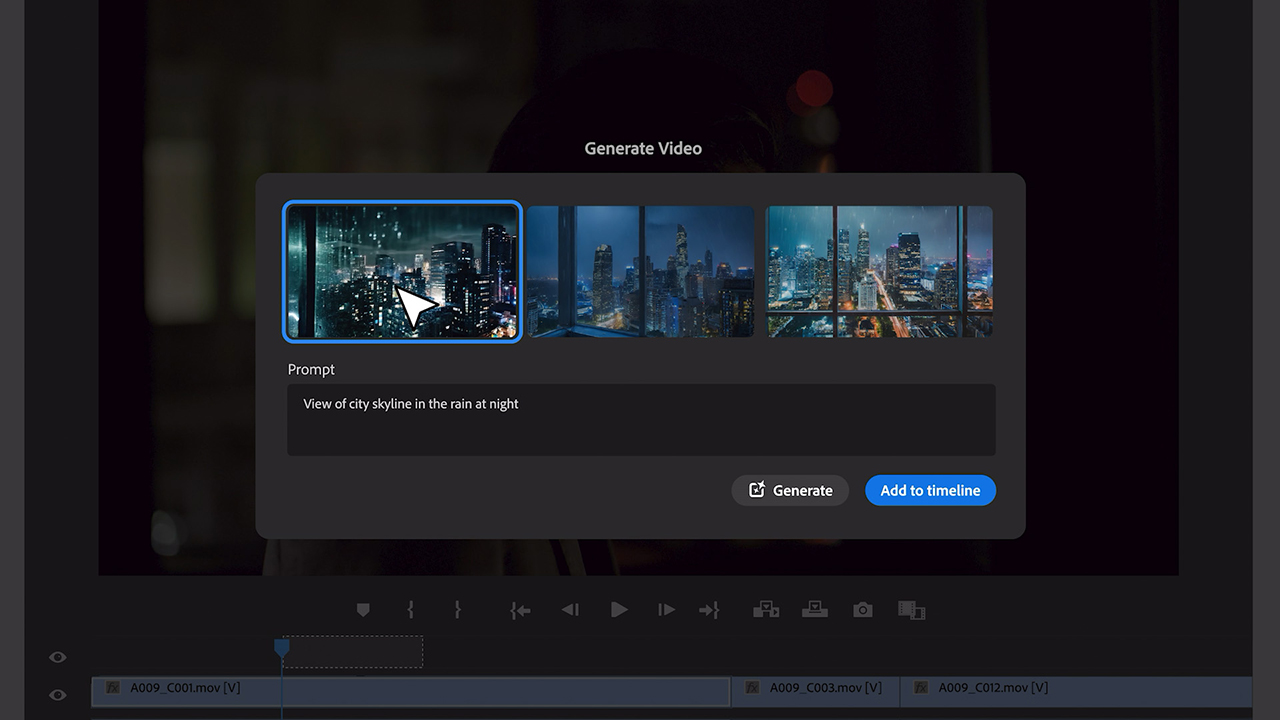

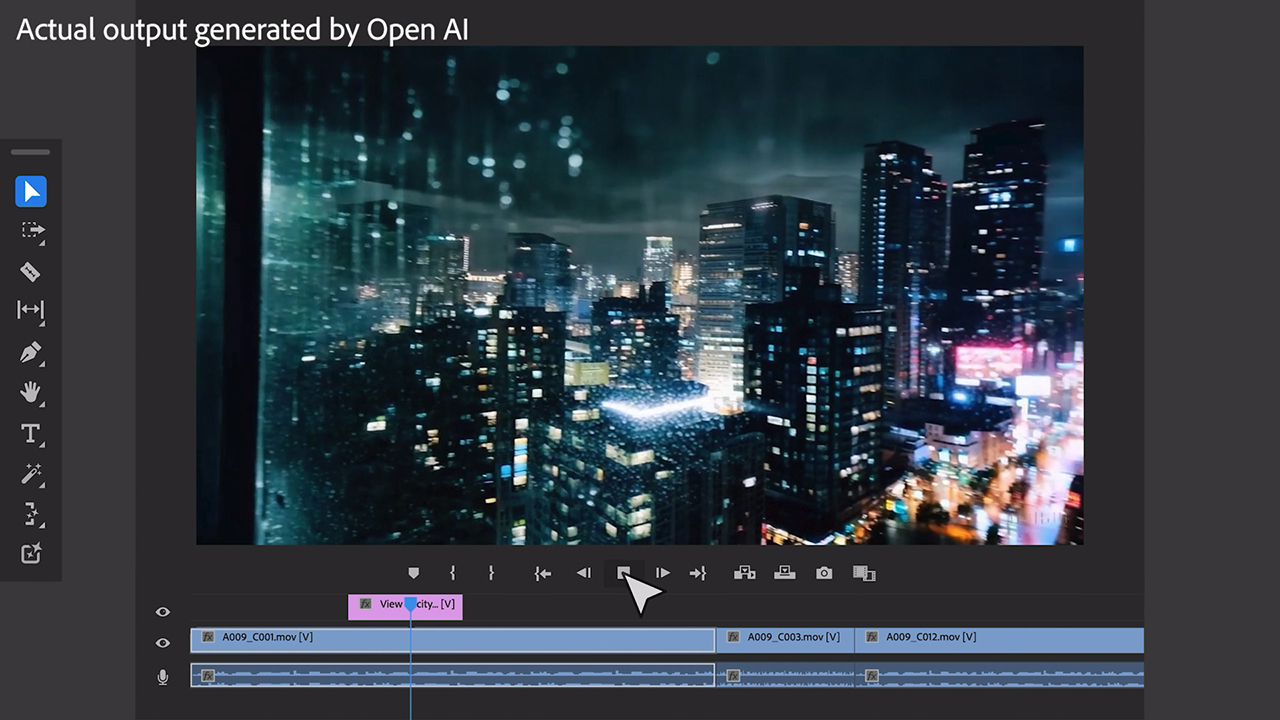

A particularly exciting part of Adobe’s announcement is that the company decided against competing in the video generator’s race. Instead, they previewed early explorations of bringing other famous AI models directly into Premiere Pro. Among them are the biggest ones on the market: Runway, Pika, and of course OpenAI’s Sora.

While much of the early conversation about generative AI has focused on a competition among companies to produce the “best” AI model, Adobe sees a future that’s much more diverse.

A quote from Adobe’s press release

This way users can choose between Adobe Firefly and other AI models directly in the editing software and pick the best result for their final cut. As we know, all video generators have their strengths. Thus, if you need a fully AI-generated shot for B-roll, Premiere Pro plans to offer that as well across different models:

Content credentials for generative AI in Adobe Premiere Pro

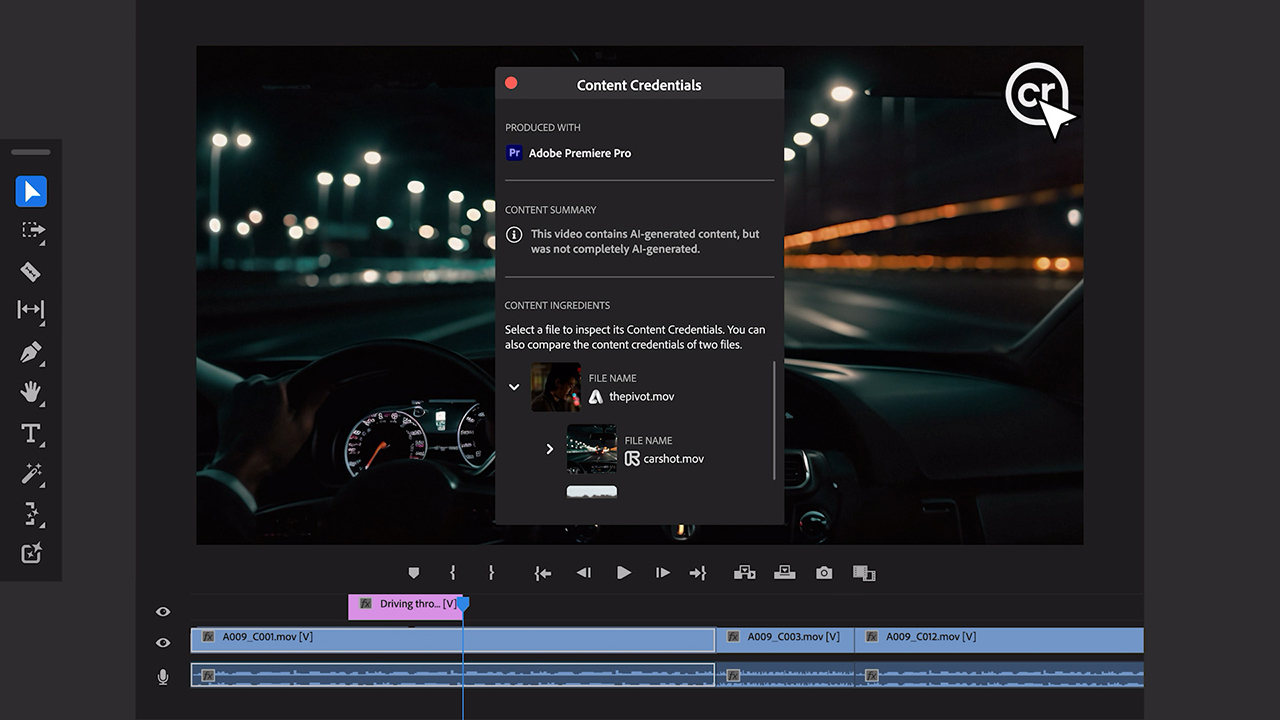

Of all the companies working on generative AI products, Adobe stands out with their ethical and transparent approach. You have probably already heard about “content credentials”, which the company developed to mark AI-generated footage. According to the demo preview, this tool is also coming to Premiere Pro. It will allow us to label (and as a result – the audience to understand) when AI was used and what model the creators applied in each shot.

AI-powered audio workflows in Premiere Pro

In addition to upcoming generative AI video tools, Adobe announced new audio workflows in Premiere Pro. The latest features include:

- Interactive fade handles for simple custom audio transitions;

- New Essential Sound badge with audio category tagging by AI (automatically tagging audio clips as dialogue, music, sound effects, or ambiance, and adding a new icon);

- Redesigned waveforms in the timeline that resize as the track height changes on clips

…and more. These improvements will be generally available to Adobe Premiere Pro users as soon as May.

Conclusion

Adobe didn’t announce exactly when they plan on releasing the showcased video features. However, developers mentioned that new generative AI tools in Adobe Premiere Pro will already be available this year. We’ll keep you updated, so stay tuned!

What do you think about the improvements and Adobe’s vision of integrating Firefly and other generative AI models directly into Premiere? Please, share your thoughts with us in the comments below.

Feature image source: Adobe