A Comprehensive Human-Robot Interaction Dataset

In an article recently published in the journal Scientific Data, researchers created a new comprehensive dataset, designated as AFFECT-HRI, containing physiological data labeled with human affect to create empathetic and responsible human-robot interaction (HRI) involving human-like/anthropomorphic service robots.

Background

During a human-human/HRI, the affective state of humans is influenced by their counterpart/communication partner, specifically by the information given, voice, gestures, or spoken words. The human communication partner can perceive this affective state, which enables an empathetic behavior.

Although such empathy is also anticipated from anthropomorphic service robots due to their human-like appearance with hands, eyes, and faces, these robots cannot inherently respond/interact empathically like human counterparts as reliably recognizing the affective state of a human, such as emotion, is still a challenge for robots. Existing technological capabilities and emotion recognition methods have displayed their potential to address this challenge.

However, the currently available datasets for developing the emotion recognition capabilities and methods are unsuitable in practical settings with robots as these datasets have been derived from general human-machine interaction studies. Additionally, recent publications have indicated that the lack of open data further hinders the affective computing development in HRI using physiological data.

The AFFECT-HRI Dataset

In this work, researchers provided a new comprehensive dataset, designated as AFFECT-HRI, to address the existing issues like lack of open data. This dataset included physiological data labeled with human affect like mood and emotion for the first time and was gathered from a conducted complex HRI study.

The AFFECT-HRI dataset followed a multi-method approach, combining subjective human-affect assessments and objective physiological sensor data. In addition, the dataset contained insights from participants regarding demographics, affect, and socio-technical questionnaire ratings, along with robot speech and robot gestures.

In the HRI study, 146 participants, including 60 males, 85 females, and one diverse participant aged between 18 and 66, interacted with an anthropomorphic service robot in a complex and realistic retail scenario. Researchers selected physiological signals as they correlated with human affect.

Additionally, physiological signals cannot be easily manipulated by humans, unlike voice or video data. A realistic retail scenario was selected as the experimental environment as service robots demonstrate significant application potential in such scenarios.

Previous studies have displayed the necessity of combining the expertise of law, computer science, and psychology research fields for designing a responsible human-centered HRI. Thus, five conditions, including immoral, moral, liability, transparency, and neutral, that covered the perspectives from these research fields were considered during the study, which elicited various affective reactions and enabled interdisciplinary investigations between psychology, law, and computer science.

Specifically, two anthropomorphic service robots were utilized for the psychology research field. Each condition consisted of three scenes: a handover, a sensitive personal information request, and a product consultation.

The Experiment Design

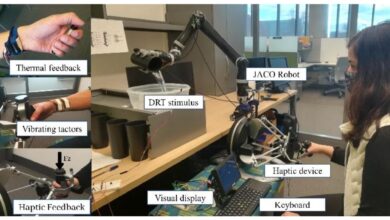

The empirical study design, comprising a complex HRI, followed a three-step approach, including the preparation phase/first step, experimental manipulation phase/second step, and post-experimental phase/third step. In the first step, the Empathica E4 wristband (E4) was placed on the participant’s non-dominant hand to reduce the interference of arm movements.

The participants also answered a pre-questionnaire that contained questions about their current affective state and demographics. At the end of the preparation phase, a first baseline measurement was performed to obtain the participant’s initial physiological state while resting.

In the experimental manipulation phase, the participant was initially guided to the experimental area to show him/her the robot before the beginning of the experiment to reduce the robot’s impact as an emotional trigger during the actual experiment. Subsequently, the participants were asked to interact with the robot within a described scenario and fulfil a shopping list.

Finally, the participant started interacting/conversing with the robot, and three scenes were performed uninterruptedly. In the third step/after the conversation, the participant was guided to a quiet and separate room for answering the post-questionnaire. Then, the second baseline measurement was performed, and the E4 wristband was removed. Two questionnaires and the E4 wristband with different physiological sensors were used to collect data.

Significance of the Work

The transparency condition led to a more positive and relaxed affect, while the liability condition displayed a significant negative influence on the users’ affect compared to the neutral condition. In the moral condition, a more relaxed affect was perceived compared to the neutral and immoral conditions.

Additionally, the immoral condition evoked more aroused and stressed feelings compared to neutral and moral conditions. Physiological sensor data, questionnaire data combining the post-questionnaires’ and pre-questionnaires’ data during pre-processing, ground-truth data, and gesture and speech data were generated from this complex HRI study.

To summarize, the AFFECT-HRI dataset is the first publicly available dataset offering physiological data labeled with human affect in an HRI. This dataset could be used to develop new emotion recognition methods and technological capabilities or prove established methods.

Journal Reference

Heinisch, J. S., Kirchhoff, J., Busch, P., Wendt, J., Von Stryk, O., David, K. (2024). Physiological data for affective computing in HRI with anthropomorphic service robots: The AFFECT-HRI data set. Scientific Data, 11(1), 1-22. https://doi.org/10.1038/s41597-024-03128-z, https://www.nature.com/articles/s41597-024-03128-z