A.I. Romance, Robot Rendezvous – Pagosa Daily Post News Events & Video for Pagosa Springs Colorado

If I know anything about romance — and that’s certainly a valid question — it’s that communication is important. Also, there are better and worse ways to communicate. Ask me how I know.

Attitude is important. Choice of words. Are you crossing your arms? Rolling your eyes? Raising your eyebrows? Giving a wink?

Communication is a complex process, for humans. And also, for computers running Generative AI models. Of course, computers don’t have eyebrows to raise. But they seem to have opinions about how to communicate.

As we are learning more and more about Artificial Intelligence, it’s beginning to appear that AI computers are better at communicating with other AI computers, than humans are at communicating with AI computers. They can’t see us winking, for one thing. But also, they understand words in their own special way, which maybe we don’t.

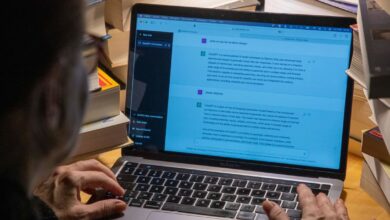

As many Daily Post readers are already aware, human communication with Generative AI typically begins with a “prompt”.

For example:

I want you to act as a travel guide. I will write you my location and you will suggest a place to visit near my location. In some cases, I will also give you the type of places I will visit. You will also suggest places of similar type that are close to my first location. My first suggestion request is “I am in Istanbul and I want to visit only museums.”

It might seem weird to tell a computer to act as a travel guide, but it turns out that Generative AI computers give more accurate responses if you ask them to think and act like a human being. Depending on what kind of information you want, you might ask the computer to act as an English teacher, the President of the United States, a former President of the United States, or the commander of a Star Fleet spaceship.

Obviously, some of these suggested ‘characters’ will generate highly inaccurate results.

Don’t laugh. A couple of researchers from software firm VMware in California were looking for the best way to get accurate AI answers to math questions. Apparently, Generative AI is better at telling stories than at solving math problems. It’s especially bad at algebra and geometry. (Just like me!) So the researchers decided to let Generative AI itself write hundreds of prompts for math problems, and then tried to figure out why some prompts generated more accurate math answers than other prompts.

They have no idea why telling their AI computer to act as if it was a Star Trek commander produced the most accurate math answers. But it did.

One of their conclusion from the study had little to do with Star Trek. The conclusion was that AI computers are better at communicating with AI computers, than humans are. If you want a good prompt, let AI write the prompt.

Actually, this makes perfect sense. Humans — even the engineers and programmers who are creating Large Language Models like ChatGPT and Google Gemini — don’t actually understand how an AI computer comes to its conclusions. (Which is not too surprising, considering that we don’t understand how humans come to their conclusions, either, and we’ve had a lot more time to figure that one out.)

All of which got me wondering. If someone told me to act as if I were George Carlin, when writing my humor columns, would I actually write better columns?

I do hope someone will prompt me to act that way, so I can find out.