Advanced AI Model Elevates Road Pattern Recognition

In a recent article published in the journal Applied Sciences, researchers from China conducted a comprehensive study on accurately recognizing pavement patterns using advanced deep-learning algorithms. Their goal was to tackle the critical challenge of enabling vision-guided robots to precisely identify and navigate through diverse road conditions, which is essential for the safe and effective operation of these robots in outdoor environments.

Background

Vision-guided robots use cameras and other vision sensors to perceive their surroundings and perform tasks accordingly. One of their main applications is visual navigation, which involves recognizing road patterns and following them to reach a destination. Road patterns include features or markings on the road surface that indicate the direction, shape, or boundaries of the road, such as lane lines, crosswalks, arrows, curves, and intersections.

Road pattern recognition is a challenging task for vision-guided robots due to the complexity and dynamic nature of real-world scenarios. Road patterns can vary in shape, size, color, and orientation, and they can be affected by factors such as illumination, occlusion, and noise. Additionally, road patterns may not always be visible or distinguishable from the background, especially in rural or off-road areas. Therefore, accurate and robust road pattern recognition is crucial for the safety and efficiency of vision-guided robots.

About the Research

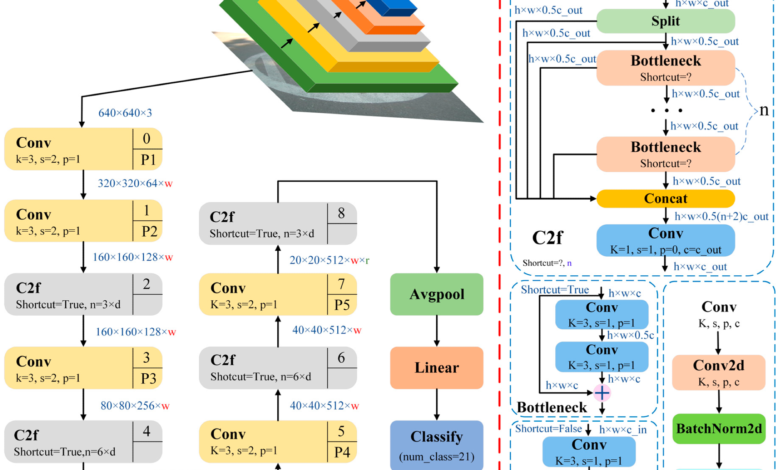

In this paper, the authors aimed to improve road pattern recognition for vision-guided robots using an enhanced version of the You Only Look Once version 8 (YOLOv8) model, named YOLOv8n. YOLOv8 is a state-of-the-art deep learning model for real-time object detection, capable of identifying multiple objects of different classes in a single image. The researchers modified YOLOv8 to make it suitable for road pattern recognition.

The experimental system captured images of 21 different road patterns across various conditions, including urban roads, rural roads, highways, and off-road areas. These images were labeled with 21 classes of road patterns, such as straight lines, curves, intersections, crosswalks, and arrows. As a baseline, the authors constructed a road pattern recognition model using the residual neural network with 18 layers (ResNet18), a convolutional neural network (CNN) that learns features from images and classifies them.

The improved YOLOv8n model consisted of three main components: the backbone network for extracting features, the neck network for fusing features from different levels, and the head network for predicting the bounding boxes and class labels of road patterns. Furthermore, three techniques: the cross-scale fusion and offset-dynamic convolution (C2f-ODConv) module, the adaptive weight downsampling (AWD) module and the EMA attention mechanism were employed to optimize the YOLOv8n model.

The C2f-ODConv module was utilized to enhance the feature extraction ability of the backbone network using cross-scale fusion and offset-dynamic convolution strategies. The AWD adaptive weight downsampling module was employed to reduce the computational cost of the neck network through adaptive weight and dynamic downsampling strategies. The EMA attention mechanism was used to improve feature fusion in the neck network using an exponential moving average and channel-wise attention strategies.

Moreover, the authors assessed the YOLOv8n model’s performance on the road pattern image dataset and compared it with the ResNet18 and standard YOLOv8 models. The evaluation metrics included mean average precision (mAP), average intersection over union (IoU), average recall, and average precision. mAP measures the model’s accuracy in detecting and classifying road patterns, while average IoU gauges the overlap between the predicted and ground-truth bounding boxes. Average recall quantifies the proportion of road patterns correctly detected by the model, and average precision represents the proportion of detected road patterns correctly classified by the model.

Research Findings

The outcomes demonstrated that the enhanced YOLOv8n model achieved a remarkable mAP of over 93%, an impressive average IoU of over 87%, a high average recall of over 95%, and a strong average precision of over 94%. These outcomes significantly surpassed those of the ResNet18 and standard YOLOv8 models, indicating the superior accuracy of the improved YOLOv8n model in road pattern recognition.

Additionally, the researchers conducted qualitative experiments to showcase the effectiveness of the improved YOLOv8n model in various road scenarios, including urban and rural roads, highways, and off-road areas. The model consistently and accurately recognized a wide range of road patterns, even in complex and challenging situations.

This study holds significant implications for enhancing the autonomous road state recognition capabilities of wheeled robots. Accurate road pattern recognition is vital for tasks like vehicle control and path planning, crucial for ensuring the safety and efficiency of vision-guided robots. With the improved YOLOv8n model, providing reliable, real-time road pattern information to vision-guided robots becomes feasible, enabling them to navigate autonomously across diverse environments.

Moreover, the model’s versatility extends beyond road pattern recognition, offering potential applications in other domains requiring object detection. These include face recognition, traffic sign recognition, and medical image analysis. Thus, the impact of this research reaches far beyond robotics, promising advancements in various fields reliant on accurate object detection.

Conclusion

In summary, the novel YOLO model demonstrated superior performance in road pattern recognition compared to classical CNN and the standard YOLOv8 model. It exhibited robustness and effectiveness across various road scenarios, including urban roads, rural roads, highways, and off-road areas, showcasing its potential to enhance the autonomous recognition capability of wheeled robots in diverse environments.

Moving forward, the researchers suggested directions for future work, including enhancing the improved YOLOv8n model’s speed and efficiency, expanding the road pattern image dataset to cover more diverse and complex road conditions, and applying the model to other types of vision-guided robots, such as aerial and underwater robots.

Journal Reference

Zhang, X.; Yang, Y. Research on Road Pattern Recognition of a Vision-Guided Robot Based on Improved-YOLOv8. Appl. Sci. 2024, 14, 4424. https://doi.org/10.3390/app14114424, https://www.mdpi.com/2076-3417/14/11/4424.