AI Tool “SUBTLE” Deciphers Complex Animal Movement Patterns

Researchers from the IBS Center, KAIST, and the Institute for Basic Science collaborated to develop a new analytical tool known as Spectrogram-UMAP-Based Temporal-Link Embedding (SUBTLE). This tool uses artificial intelligence to classify and analyze animal behavior based on 3D movement data. The study has been published in the AI computer vision journal International Journal of Computer Vision (IJCV).

Animal behavior analysis is an essential tool in many fields of study, from fundamental neuroscience to the diagnosis and treatment of disease. It is extensively used in many industrial domains, such as robotics and biological research. Recently, AI technology has been used to analyze animal behaviors accurately. However, unlike human observers, AI still has limitations regarding its ability to distinguish between various behaviors intuitively.

The mainstay of traditional animal behavior research was single-camera animal filming and low-dimensional data analysis, such as timing and frequency of particular movements. Similar to providing AI with questions and their answers, the analysis method gave AI matching results for every training data set.

Although this method is simple, building the data requires a lot of time and labor-intensive human supervision. Another factor is observer bias, which arises from the possibility of the experimenter’s subjective judgment distorting the analysis results.

To circumvent those restrictions, Meeyoung Cha, the Chief Investigator (CI) of the Data Science Group at the IBS Center for Mathematical and Computational Sciences and a Professor in the School of Computing at KAIST, and Director C. Justin Lee of the Center for Cognition and Sociality within the Institute for Basic Science collaborated to develop a new analytical tool.

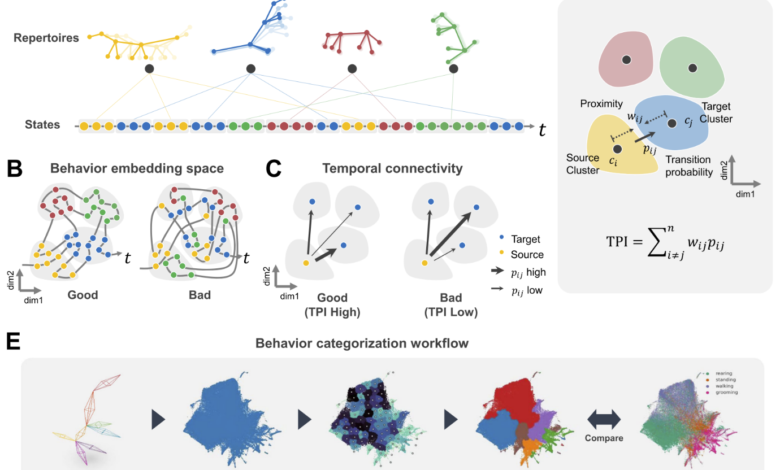

To obtain 3D action skeleton movement data over time, the research team first recorded the movement of mice using multiple cameras. The coordinates of nine key points, including the head, legs, and hips, were extracted. To embed the data, which generates a set of vectors for every piece of data, they first reduced the time-series data to two dimensions. This makes it possible to represent complex data more clearly and meaningfully.

The researchers then organized comparable behavioral states into subclusters, which were then organized into superclusters that represented standardized behavior patterns or repertoires, like standing, walking, grooming, and so on. During this process, the researchers developed the Temporal Proximity Index (TPI), a new metric, to assess behavior data clusters. Measuring whether every cluster contains the same behavioral state and accurately captures temporal movements, this metric is comparable to how humans value temporal information when categorizing behavior.

Ci Cha Mee Young stated, “The introduction of new evaluation metrics and benchmark data to aid in the automation of animal behavior classification is a result of the collaboration between neuroscience and data science. We expect this algorithm to be beneficial in various industries requiring behavior pattern recognition, including the robotics industry, which aims to mimic animal movements.”

We have developed an effective behavior analysis framework that minimizes human intervention while understanding complex animal behaviors by applying human behavior pattern recognition mechanisms. This framework has significant industrial applications and can also be used as a tool to gain deeper insights into the principles of behavior recognition in the brain.

C. Justin Lee, Study Lead and Director, Center for Cognition and Sociality

In April last year, the research team gave Actnova, a business specializing in AI-based clinical and non-clinical behavior test analysis, access to SUBTLE technology. For this study, the team used Actnova’s AVATAR3D animal behavior analysis system to collect 3D movement data from animals.

The research team has made the code for SUBTLE open-source. The SUBTLE web service (http://subtle.ibs.re.kr/) offers an intuitive graphical user interface (GUI) to help with animal behavior analysis for researchers without programming knowledge.

Journal Reference:

Kwon, J., et al. (2024). SUBTLE: An Unsupervised Platform with Temporal Link Embedding that Maps Animal Behavior. International Journal of Computer Vision. doi.org/10.1007/s11263-024-02072-0.

Source: https://www.ibs.re.kr/eng.do