Amazon Robotics achieves worldwide scale and improves engineering efficiency by 35% with Amazon DynamoDB

Amazon Robotics (AR) designs advanced robotic solutions so the Amazon fulfillment network can meet delivery promises for millions of customers every day. AR builds critical software that controls over a half a million mobile robots used in hundreds of Amazon sites spanning North America, Europe, Asia, and Australia. With a focus on engineering efficiency, the AR Movement Sciences and Scheduling (MOSS) team turned to Amazon DynamoDB to store millions of real-time work requests that orchestrate mobile robot motion.

In this post, we discuss how the MOSS team migrated from a self-managed database to DynamoDB, a fully managed, multi-Region, multi-active NoSQL database that delivers single-digit-millisecond performance at any scale. This change improved the team’s operational and engineering efficiency by 35%, allowing their engineers to focus on innovating for customers instead of provisioning, patching, and managing servers.

Before DynamoDB: High operational load

Prior to migrating to DynamoDB, MOSS software engineers spent roughly a third of their time managing, tuning, and troubleshooting database clusters, deployed across more than 2,000 Amazon Elastic Compute Cloud (Amazon EC2) instances. These tasks included the following:

- Adjusting capacity to keep up with ongoing growth, seasonal changes, and increasing business demands of Amazon’s global fulfillment network

- Monitoring resource utilization and manually replacing hosts to maintain cluster heath

- Implementing and testing data backup and restore procedures

- Updating the underlying operating system of their EC2 instances as well as software application dependencies with the latest security patches and OS versions

- Planning and testing database version upgrades and other maintenance-related tasks

Why choose a managed service like DynamoDB?

The MOSS team sought several crucial benefits by moving from a self-managed database solution to an AWS-managed service like DynamoDB:

- Simplified infrastructure management – AWS handles all database infrastructure and maintenance tasks, including provisioning and patching

- Effortless scaling – You can scale globally with high availability, durability, and fault tolerance, eliminating the need for manual capacity planning and adjustments

- Secure data storage and backup – You can benefit from the fine-grained access control in DynamoDB using AWS Identity and Access Management (IAM) policies and encryption at rest, data backups, and point-in-time recovery (PITR)

DynamoDB benefits: Adaptive scaling and transparent partition splitting

DynamoDB is designed for global reach and allows you to effortlessly scale your mission-critical workloads. As an AWS-managed solution, it automatically adjusts your application’s read and write capacities so you don’t need to be concerned with infrastructure or provisioning decisions as your workloads change or traffic patterns fluctuate.

So how does DynamoDB help you scale? First, it supports adaptive capacity. This means it will allocate capacity from other partitions in the event a partition becomes hot (takes on a higher volume of read and write traffic compared to other partitions). DynamoDB also supports partition splitting for heat, meaning the service will split a hot partition into two and will continue to do so until traffic is served without throttling. Partition splitting, just like adaptive capacity allocation, happens transparently, with no action or steps required by you.

Migration approach

To undertake this migration, the MOSS team only had to redesign the persistence layer of their service, which handles connections to the database. This approach allowed them to safely swap out their legacy database for DynamoDB without affecting any core service logic and gave them granular control during the migration to DynamoDB.

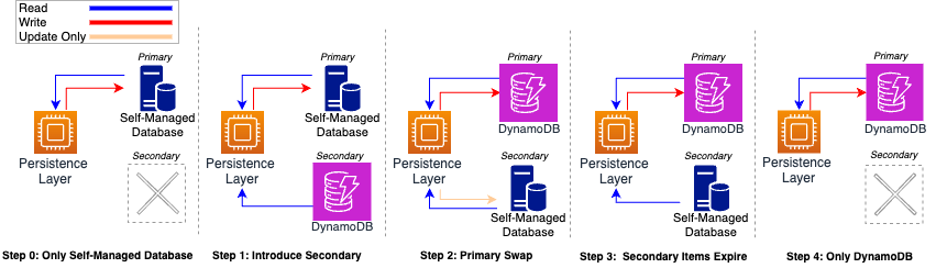

The database migration employed a primary-secondary strategy to ensure a smooth transition with zero downtime for clients. The procedure included the following steps:

- The team introduced a DynamoDB table, which became the secondary database, and ensured all hosts could connect to it.

- They promoted DynamoDB to the primary database, managing all new item insertions. Their legacy database, now secondary, was tasked only with updating items previously inserted when it was primary.

- When all updates were handled by DynamoDB, they removed their legacy database as an endpoint.

The following diagram illustrates the different stages in this process.

Key learnings from testing

The MOSS team conducted extensive testing to confirm DynamoDB could meet their high-throughput and scaling needs. The following are some key learnings:

- Call strategy – To manage high concurrency demands and improve service reliability, the MOSS team uses parallel batch calls with synchronized retries. This approach consolidates failed items, significantly reducing the total number of necessary server connections. Alternately, non-consolidating strategies can be used in situations requiring fast retries, such as transient network or brief server outages.

- Scaling considerations – Both provisioned and on-demand DynamoDB tables can provide high throughput for any service. With on-demand mode, DynamoDB instantly accommodates workloads as they ramp up or down to any previously reached traffic level. If you anticipate your traffic will exceed a previous peak by two times the volume within 30-minute period, you can pre-warm your table by setting it to provisioned mode at the anticipated traffic level and then switch to on-demand mode later. This will mitigate the risk of requests being throttled.

- Partition sharding – To optimize query performance and minimize traffic throttling, the team employs two sharding techniques: automatic and manual. For non-sequential partition keys (such as unordered data), they use deterministic uniformly random hashes to evenly distribute data across the partitions and for splits to occur uniformly across the partitions. For sequential partition keys (such as time series data), they use manual sharding to ensure even distribution of traffic across their partitions.

Conclusion

Amazon Robotics achieved a 35% efficiency lift by migrating a critical resource to DynamoDB, freeing up almost 9,000 hours annually for their engineers to focus on driving innovation for customers instead of managing backend infrastructure. Looking ahead, Amazon Robotics plans to modernize and migrate additional workloads to AWS-managed services and will continue improving the efficiency of their teams.

If you have any questions or suggestions about this post, we invite you to leave a comment.

About the Authors

Kratesh Ramrakhyani, SDE, Movement Sciences and Scheduling (MOSS)

Kratesh Ramrakhyani, SDE, Movement Sciences and Scheduling (MOSS)

Kratesh is a Software Development Engineer on the MOSS team. Kratesh has been with Amazon Robotics for 5 years now. He has effectively spearheaded numerous projects from start to finish, with the Amazon DynamoDB migration project as the most recent and one of the most impactful ones. Kratesh owns and supports numerous critical services on the MOSS team, ensuring their seamless operation and continuous improvement.

Daniel Martin, SDE, Movement Sciences and Scheduling (MOSS)

Daniel is a Software Development Engineer responsible for managing the progression of requests and activities on mobile robotic floors. He is familiar with database migration to Amazon DynamoDB, contributing to more efficient system operations.

Giovanni Scialdone, SDM, Movement Sciences and Scheduling (MOSS)

Giovanni is the Software Development Manager of the MOSS team. His team is responsible for the central service for sequencing all requests and activities that orchestrate mobile robot movement to fulfill customer orders.

Karen Roberts, SDM Robotic Movement

Karen is a Software Development Manager in the Robotic Movement team in Amazon Robotics. Her teams are responsible for mobile robot orchestration and management.

Jon Olmstead, Principal Customer Solutions Manager (CSM)

Jon partners with engineering teams at Amazon to help them realize value through AWS Cloud adoption and modernization. He lives on Bainbridge Island, WA, and enjoys spending quality time with family and taking in long trail runs on the island.