“Apple Intelligence” brings long-discussed AI features to Apple devices

In brief: Apple took great pains to avoid saying “AI” during its 2024 WWDC presentation. Still, the new features around “Apple Intelligence” bring generative AI to recent Macs, iPads, and iPhones. The upcoming OS major releases will include image generation, text proofing, and context-aware organization.

Following months of teasing, Apple finally pulled back the curtain on its plans for generative AI at WWDC this week. Starting this fall, the iPhone 15 Pro and all Apple devices with M-series processors will gain beta access to the Cupertino giant’s answer to ChatGPT and Copilot.

Apple Intelligence, which arrives this fall in iOS 18, iPadOS 18, and macOS Sequoia, introduces AI that can rewrite text, create images, and organize information across multiple apps.

Siri will also become a generative AI chatbot and assistant similar to those from OpenAI and Microsoft.

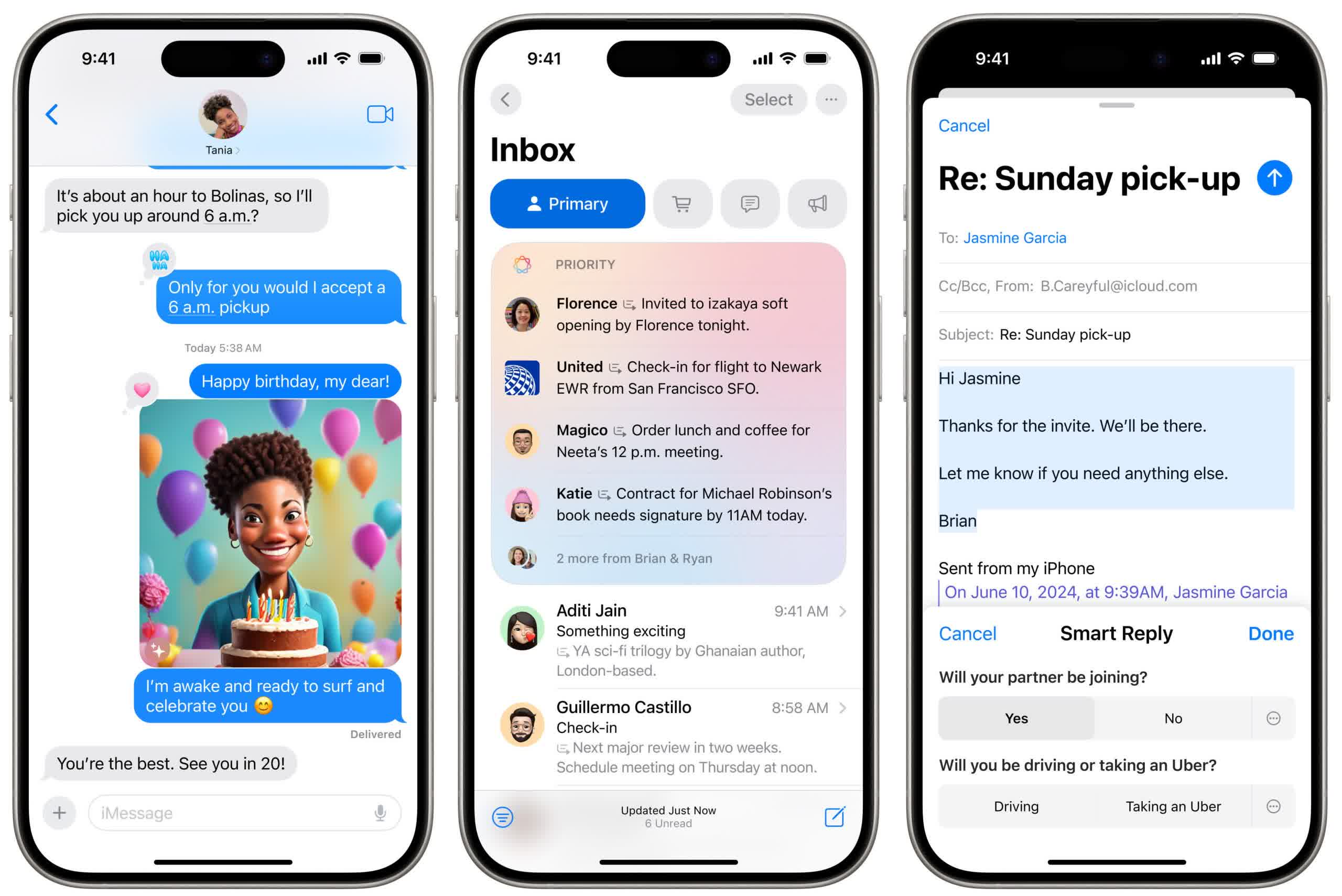

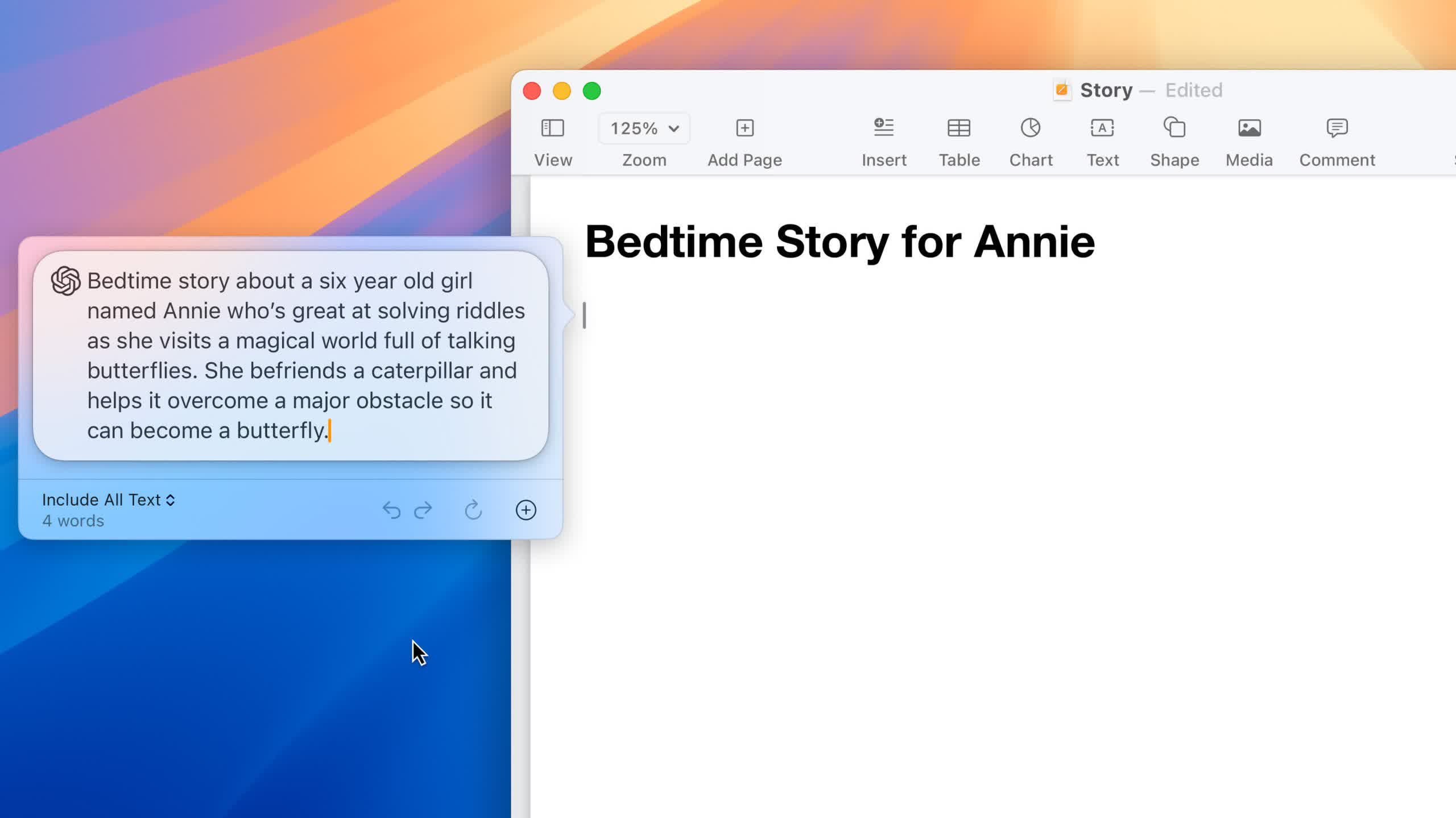

The new text editing features, dubbed Writing Tools and Rewrite, employ functionality akin to Grammarly across most native apps in each device’s operating system. Users can automatically proof, summarize, and rephrase text written in Mail, Pages, Messages, or other apps. Settings are granular and include professional, informal, concise, and poetic tones.

Additionally, Apple’s generative model automatically organizes emails and notifications based on priority and category. Previews now contain summaries of the entire contents instead of the first few words of the first sentence.

Users can ask Apple Intelligence to create images for emojis, presentations, and text conversations.

The model can transform basic sketches into detailed pictures and suggest images from scratch based on the contents of a writing sample. New photo manipulation tools allow users to remove specific objects without impacting the rest of the composition.

Siri can now understand user queries based on context and the information it reads from various apps. For example, it can retrieve a file, photo, or other information based on a vague description of its contents or who sent it.

Furthermore, Siri can plan trips or other activities by combining information from Messages, Apple Maps, or other apps. The assistant can also quickly change device settings or offer users tutorials on performing a specific task. Like ChatGPT or Copilot, users can talk to Siri through text.

However, Siri sometimes requests permission to refer to ChatGPT, effectively embedding ChatGPT functionality across Apple’s systems. This functionality does not require a ChatGPT account, but OpenAI subscribers can link to their Apple accounts to easily take advantage of its paid features. Powered by GPT-4o, the AI can also compose text and generate images for various apps.

The link to OpenAI’s tool is always opt-in, but it might signal that Apple’s generative AI tools aren’t quite as mature as the competition. Future updates will enable Siri to connect to other models.

Like Microsoft and others, Apple tried to assuage privacy concerns by stressing that its generative AI runs tasks entirely on-device without contacting cloud servers most of the time. However, workloads requiring cloud models will use Apple Silicon servers using independently inspected code rather than competing data hubs. The servers won’t save information sent from devices, and all software involved must be publicly logged for inspection.

Whether Apple Intelligence can avoid the hallucination problem that has dogged all generative AI models remains to be seen. Furthermore, integrating ChatGPT into the system introduces all of the trustworthiness, privacy, and copyright concerns that have long surrounded OpenAI’s tool.

Apple Intelligence will only be available for American English when it launches this fall, with other languages coming later.