Apple Intelligence: Siri gets an LLM brain transplant, ChatGPT integration, and Genmojis

We knew that artificial intelligence would be part of Apple’s WWDC 2024 keynote. The company came out swinging with its own take on AI powered by large language models (LLMs) — though AI, in this instance, stands for “Apple Intelligence,” its all-encompassing set of features that will work across its iOS, iPadOS, and macOS platforms starting this summer (in beta).

Apple says it wants to make its devices more helpful to users by using AI to assist with tasks ranging from big to small. It will do so by performing the majority of processing on-device, preserving your privacy. However, if a task can’t be completed on-device, Apple will take advantage of its Private Cloud Compute platform — which, of course, runs on Apple Silicon.

Siri gets a big update, courtesy of Apple Intelligence

Siri was first launched 13 years ago as Apple’s take on the personal assistant. However, most industry observers have felt that Siri has long lagged behind the competition in functionality. This will change with the new Apple Intelligence-infused version of Siri, which can now accept voice or text prompts (by double-tapping at the bottom of the screen), and can also remember and build upon your previous queries instead of treating each new request independently.

Siri’s voice is now more natural and has better language understanding. For example, if you stumble over your words or have to regather your thoughts during a query, Siri can still process the information without issue. Siri will also have “on-screen awareness,” meaning that it can take inputs from text. For example, if a friend shared their address with you in a text string, you could simply say, “Add address from Jason’s text to his contact.”

These new abilities will even extend to images in the Photos app. During the keynote, a presenter told Siri, “Show me photos of Stacey in New York wearing a pink coat.” The results were then shown. After selecting one of the photos, the presenter told Siri, “Add this photo to my note with Stacey’s bio.” Siri was able to use context to figure out what the presenter wanted to do and took action based on her prompt.

These capabilities extend across all of Apple’s first-party apps and even third-party apps using the App Intents API. Siri can help you find specific bits of information even if you don’t remember whether they came from a text, an email, or a previous note. For example, if you’re filling out a form that requires your driver’s license number, Siri can search your device to find an image of your driver’s license and can input the corresponding number into the required text field.

Another demo involved the presenter inquiring when her mom’s flight was landing. It brought up the flight itinerary from the airline’s app, including the updated time of touchdown. She then asked about the lunch plans that she made with her mom, which showed up. Finally, she asked Siri how long it would take to get from the airport to the restaurant from lunch, and Siri plotted out the course with Apple Maps.

Apple Intelligence in apps

One of the cool things that Apple showed was a new feature called Genmoji in Messages. You can create your own Genmoji in iOS 18, iPadOS 18, and macOS Sequoia. One example took the input of “smiley relaxing with cucumbers over its eyes” and output the requisite Genmoji in the familiar yellow-faced hue. You can swipe to see different variations of the generated output and find the one that best suits your taste.

Apple is also introducing Image Playground, which is accessible through the Messages app. You can generate your own cartoonish images using text or voice prompts. Image Playground is also available as a standalone app, and third-party apps will be able to tap into the API for their own use. Speaking of images, a feature similar to Google‘s Magic Erase will be coming to the editing function in the Photos app.

We’ve already seen many of the features that Apple is touting from other companies (or example, the ability to summarize a long-winded email, rewrite the text in your email for clarity, or change its tone). Such features will be available system-wide across all of Apple’s operating systems.

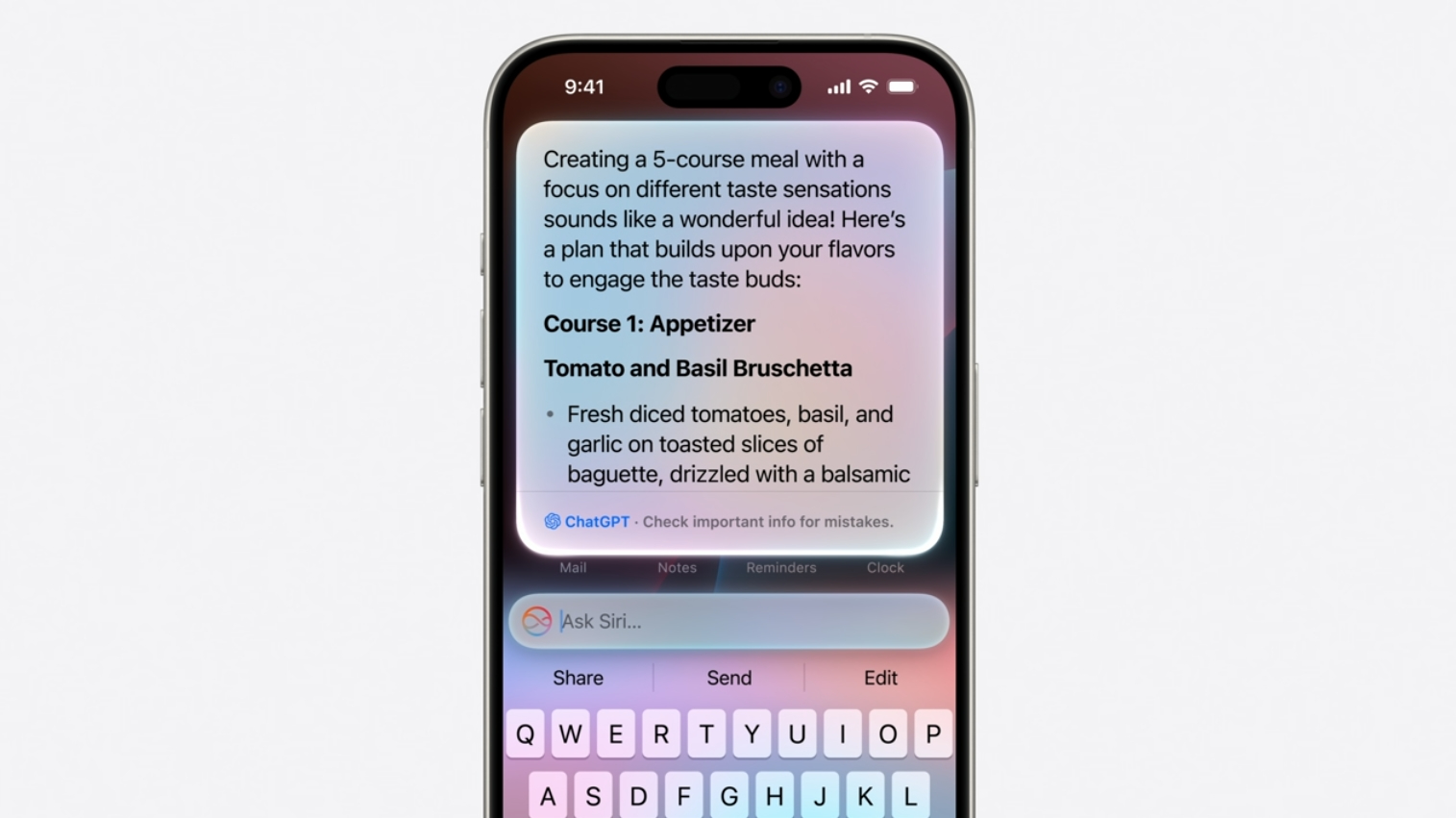

Apple’s partnership with OpenAI

If you’re a big fan of ChatGPT, then the latest agreement between Apple and OpenAI will be music to your ears. ChatGPT-4o is coming to Apple’s new operating systems — free of charge, with no external account needed.

Apple says that users will be able to switch from its LLMs to ChatGPT if the latter offers superior results. When making a Siri query, an option to access ChatGPT will be provided. You will then be asked to give permission to let ChatGPT handle the heavy lifting from that point forward. Although baseline access is available for free, those who subscribe to ChatGPT’s premium features can link their accounts to integrate that functionality.

Final thoughts

Apple Intelligence overall seems like a full-throated take on AI by Apple. The company is notoriously slow to jump on emerging trends in tech, and Apple Intelligence is no exception. However, the company’s emphasis on privacy, along with its partnership with OpenAI, at least put the company on the right path to introducing LLMs to its vast customer base.

While some of the features such as Genmoji and the Image Playground might seem a bit hokey (remember Memojis?), the extensive improvements to Siri alone will be welcome additions to iOS 18, iPadOS 18, and macOS Sequoia, which will be released to the public this fall (although developer and beta versions will launch sooner).