Artificial Intelligence — both a problem and solution to our climate crisis?

In 2020, carbon footprint researcher and writer Mike Berners-Lee detailed the carbon footprint of almost everything in his book How bad are bananas? — from consuming, well, a banana, a large oat latte, a glass of tap water, to volcanic eruptions, wars, and the World Cup. On page 18, we learn that 5 minutes of browsing the web on a laptop emits 8.6g of CO2e, which, really, isn’t that significant. It’s 0.0001% of the average person’s annual carbon footprint (7 tons of CO2e). However on page 166, we learn that the world’s data centres, the ‘Cloud’, which stores all of this data we access, emitted 160 million tons of CO2e in 2020. Information, databases, downloads, photos, videos, are being stored in industrial-scale buildings containing computer and storage systems, servers, IT operations and networks fuelled and maintained by lots of energy and electricity keeping them cool and powered.

The world’s ICT emits 1.4 billion tons, accounting for 2.5% of global emissions. According to OpenAI researchers, by 2040, ICT emissions could account for 14% of global emissions. This includes everything that makes up the current era one of information and technology, and amongst that, Artificial Intelligence. The exponential increase in AI usage has been viewed by many as ground-breaking, innovative and, frankly, quite seductive as AI Chatbots progressively take over our labour markets. AI has had its footing in the sciences, medicine, aeronautics, biology, engineering, software, but also in creative fields such as visual arts and music. From AI Spotify DJs to programs that detect fraudulent credit card activity, its efficiency and automative nature have given scientists the impetus to intensify its development. Not only that, but AI has proved itself a useful tool for climate action, facilitating impact assessments and programming prediction models, but also contributing to developments in monitoring and energy efficiency. As email and texting replaces paper letters and videoconferencing reduces commuting time and hence transport emissions, wouldn’t it make sense to see a potential decrease in global carbon emissions in the future thanks to AI?

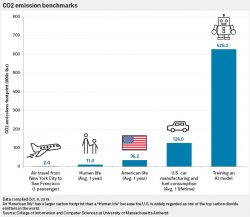

The carbon footprint of AI is masked behind its triumphs, but is undeniably enormous

An interim report was published by an expert panel chaired by leading computer scientist and professor Yoshua Bengio from Montreal University on rising safety concerns regarding AI ahead of this year’s AI summit in Seoul. The report warns that the computing power required for AI may potentially make AI the largest contributor to data centre electricity consumption in the future. The carbon footprint of AI hardware is made up of emissions emanating from the manufacturing, transportation, the physical building infrastructure, electronic waste disposal as well as energy and water consumption required for cooling of power systems. A study conducted by University of Massachusetts researchers found that the process of training large AI models can emit over 300 tons of CO2e, equivalent to almost five times the emissions of an average American car produced over its lifetime including its manufacture. The carbon footprint of AI is masked behind its triumphs, but is undeniably enormous, and doesn’t seem to be decreasing anytime soon.

Interestingly, carbon footprinting researcher Mike Berners-Lee understands ICT and technology in the modern age through the Jevons paradox, and I’d like to borrow this thought process for the increased use of AI. The idea that AI will bring about an increase in energy efficiency is appealing in theory — technological automation could even potentially give humans the time and resources to focus on other pursuits. But unfortunately, as AI usage increases drastically, it could have the opposite effect. The efficiency gains may lead to higher demand for the industry in question, and hence increased environmental burdens. Essentially, any gains made in energy efficiency will be offset by increased consumption, as economist William Stanley Jevons observed in the 19th century with regards to steam engines and coal consumption. But arguably, with AI, it’s too early to tell.

The sustainable future of AI therefore inevitably relies, once again, to our detachment from the world’s current dependency on fossil fuels

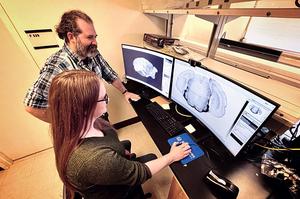

Does this mean we should we stop AI development altogether for the sake of the environment? Do scientists see a future for sustainably (whether environmentally or generally, in fact) coexisting with AI? Shirin Dora, computer science lecturer at Loughborough University, explains that there may be opportunities to amend the training process of AI models that consider their carbon footprint. Artificial Neural Networks (ANNs) are the commonly used models for current AI systems: they work by replicating the brain’s neurological systems, learning patterns through analysing lots of data, therefore requiring lots of memory, energy and time. While the ANNs’ artificial neurons are always active, Spiking Neural Networks (SNNs) are active only when a spike occurs. These spikes, small intermittent electrical signals, transfer information through their binary, ‘all-or-none’ timing pattern, similarly to the way Morse code works. SNNs are therefore much more energy efficient than ANNs. Another solution to improve the energy efficiency of AI models is to integrate lifelong learning algorithms which allows the AI models to learn building on pre-existing knowledge as opposed to being retrained from scratch when their environment shifts.

On a more obvious note, data centres generally run on electricity derived from fossil fuels. The power grid for France is relatively clean, powered mainly by renewable sources and nuclear energy, therefore it can support the reduction of AI’s carbon footprint. The sustainable future of AI therefore inevitably relies, once again, to our detachment from the world’s current dependency on fossil fuels. But that is up to the technocrats to decide.