Artificial intelligence enables precision diagnosis of cervical cytology grades and cervical cancer

Study design and participants

Between January 2016 and December 2020, a total of 16,056 eligible participants were enrolled in this multicenter study. Distinct datasets were created, including retrospective and prospective population-based datasets, as well as a randomized observational trial, to train and validate the AICCS system for the auxiliary diagnosis of cervical cytology grade and cervical cancer. The AICCS system consists of two main functional AI models: a patch-level cell detection model and a WSI-level classification model. Our study adhered to the reporting and analysis guidelines of STARD, CONSORT-AI Extension, and the MI-CLAIM checklist27,28,29. This study obtained approval from the institutional review boards of each participating hospital and adhered to the principles outlined in the Declaration of Helsinki.

The AICCS system underwent training and validation for cervical cancer screening in three phases. In the proof-of-concept (POC) phase, the training phase involved the retrospective acquisition of WSIs. In the validation phase, the AICCS underwent internal and external validation using multicenter, retrospective, and prospective population-based datasets. The third phase involved further validation through a randomized observational trial. Supplementary Figs. 1 and 2 present the design flowchart of the study.

WSI acquisition and preprocessing

For the acquisition and preprocessing of WSIs, cervical liquid-based preparation samples collected and maintained using the sedimentation liquid-based preparation method were initially digitalized. WSIs were generated using two prominent digital pathology scanners manufactured in China. One scanner was the PRECICE 600 (UNIC TECHNOLOGIES, INC.), equipped with a 40× objective lens, providing a specimen-level pixel size of 0.2529 μm × 0.2529 μm. The second scanner was the KF-PRO-400-HI (Ningbo Jiangfeng Bio-Information Technology Co., Ltd.), also featuring a 40× objective lens and offering a specimen-level pixel size of 0.2484 μm × 0.2484 μm. Depending on the size of the smear sample, each WSI (scanned at 40× objective power) contained billions of pixels and had a data size ranging from several hundred megabytes to a few gigabytes. All WSIs were saved in a proprietary confidential format.

Six cytopathologists from the Cellular and Molecular Diagnostics Center, SYSMH, each with over 5 years of experience, participated in cell annotation and WSI classification according to The Bethesda System (TBS) 2014 guidelines. Negative smears did not require annotation or labeling. As per TBS 2014 criteria, satisfactory samples were defined as those containing a minimum of 5000 visible and uncovered squamous epithelial cells with the presence of abnormal cells (atypical squamous cells or atypical glandular cells and above). Samples with fewer than 5000 visible cells, uncovered squamous epithelial cells, or those affected by blood, inflammatory cells, epithelial cell overlapping, poor fixation, excessive drying, or unknown component contamination affecting over 75% of squamous epithelial cells were excluded.

Quality control was ensured through participant eligibility assessment and adherence to strict specimen criteria. Participant inclusion criteria comprised being 18 years or older, not pregnant, and free from mental illness or cognitive impairment. All participants provided consent for cervical liquid-based cytology for definitive diagnosis. In addition to manual quality control measures, we implemented an AI-assisted approach to identify and address potential scanning quality issues during the digitization process. For this purpose, we developed an image classification model utilizing thumbnail images of WSIs to detect instances of scanning quality hindrances such as blurriness or incomplete scanning areas (Fig. S6). The thumbnails’ short edges were standardized to 1000 pixels, to represent scaled-down versions of the original WSIs. Images flagged by this model as potentially problematic underwent manual review. Upon confirmation of scanning quality issues, they were then excluded from our dataset.

A quality assessment model was constructed with three main components: an EfficientNet backbone, a quality summary branch, and a quality detail branch. The EfficientNet backbone was employed for feature extraction. The quality summary branch provided an overall assessment of slide quality, performing binary classification to categorize slides as either acceptable or problematic. In contrast, the quality detail branch offered a more nuanced evaluation by estimating the severity of specific quality issues. It employed a multi-label classification technique to identify and categorize different types of quality problems within the slides.

ROI annotation and WSI labeling

All cervical liquid-based preparation samples underwent review by cytopathologists from the Cellular and Molecular Diagnostics Center, SYSMH. with unsatisfactory samples being excluded. Following digitization, each WSI was randomly assigned to two of the six cytopathologists for independent annotation and labeling. In cases where consensus was not reached, an expert cytopathologist conducted further review. During the patch-level annotation phase, 2848 WSIs served as training data for the patch-level deep neural network detection model. Each pathologist was randomly allocated 950 WSIs and tasked with marking and labeling positive cells within each WSI’s bounding box, while adhering to TBS 2014. For the WSI-based classification model construction phase, 9,316 WSIs from the SYSMH training dataset were diagnosed and labeled by the cytopathologists (Supplementary Fig. 1). Approximately 3106 WSIs were equally randomized to each cytopathologist for labeling.

Based on the TBS 2014 guidelines, classification included both NILM and epithelial lesions. Epithelial lesions included squamous epithelial lesions and glandular epithelial lesions. Moreover, squamous epithelial lesions were further categorized into ASC-US, LSIL, ASC-H, HSIL, and SCC. Glandular epithelial lesions included atypical glandular cells, not otherwise specified (AGC-NOS), atypical glandular cells, favor neoplastic (AGC-FN), endocervical adenocarcinoma in situ (AIS), and adenocarcinoma (ADC). A list of all abbreviations and classifications are provided in Supplementary Table 10.

Patch-level annotation of WSIs encompassed comprised two distinct phases: an initial manual annotation phase and an AI-suggested annotation phase. In the initial manual annotation phase, cytopathologists participated in labeling a subset of patches, wherein all glandular epithelial lesions were grouped into the category of atypical glandular cells (AGC). This categorization was due to the low detection rate, limited specimen quantities, overlapping morphological features, and similar clinical management approaches associated with AGC. Thus, cytopathologists annotated six categories of positive cells considered highly representative or typical: ASC-US, LSIL, ASC-H, HSIL, SCC, and AGC. It is important to note that negative smears did not require annotation. Subsequent to the initial phase of manual annotation, a detection model was trained and deployed to perform a sliding-window inference on WSIs, thereby generating AI-suggested regions of interest (ROIs), encompassing cells identified as intraepithelial lesions (Supplementary Fig. 7). These AI-suggested ROIs underwent review and annotation by cytopathologists before integration into our patch-level training dataset. This iterative process, involving the confirmation and potential adjustment of AI-recommended ROIs by cytopathologists, ensured a progressive refinement of AI performance based on expert cytopathological input. Ultimately, this procedure methodology educated the model to discern abnormal cells within WSIs, each potentially containing tens of thousands of cells. The output generated by the cell detection model served as input for the WSI-level classification models, highlightng its crucial role in the overarching analytical framework. This approach facilitated the construction of our training dataset with high-quality annotations while expediting the annotation process.

For WSI-level classification, patches annotated as ASC-H, HSIL, and SCC were grouped into the WSI-level category of HSIL+ owing to their morphological similarities and similar clinical management. Consequently, WSI-level classifications comprised five categories: NILM, ASC-US, LSIL, HSIL+, and AGC. The detailed procedures for classification at both the patch-level and WSI-level are presented in Supplementary Fig. 8.

Data augmentation, particularly color augmentation, was systematically applied rather than randomly or blindly in order to play a crucial role in improving the accuracy of deep learning object detection frameworks and addressing overfitting concerns. Augmentation strategies included various techniques such as random patch cuts around annotated cells with different overlapping ratios, random rotations, and alterations in staining colors. The specific steps encompassed within this augmentation procedure were as follows: Before training, the distribution of the H and E components for all images in the training set was quantified, and their mean values (μ), standard deviations (σ), as well as upper and lower bounds were fitted. During training, the H and E components of the current image were first obtained. Then, either the H component, the E component, both, or neither were randomly selected, and random sampled within the range of [−2σ, 2σ] for the corresponding component were uniformly sampled. These numbers were added to the original components of the image, ensuring that the values would remain within the specified upper and lower limits after the operation. In summary, each RGB image was transformed into the stain density absorbance (SDA) space. Subsequently, the Macenko method was utilized for perform color deconvolution, resulting in a 3 × 3 stain component matrix. Finally, the perturbed stain component matrix was employed to reconstruct the SDA image back into the RGB space. This approach ensured that the color augmentation process was both controlled and consistent with common practices in pathological image processing, thereby mitigating potential performance variations arising from staining differences.

Development and architecture of the AICCS system for cervical cytology diagnosis

The development and architecture of the AICCS system involved training and validating the system using retrospectively obtained images from 11,468 eligible individuals at SYSMH. These images were randomly divided into a training cohort (n = 9316) and an internal validation cohort (n = 2152) at a 4:1 ratio. The AICCS system consisted of two major functional models: a patch-level cell detection model and a WSI-level classification model.

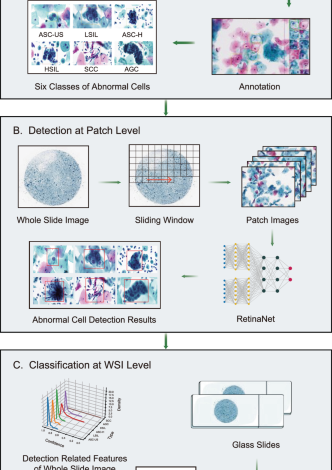

During the operational mode, the trained cell detection model processed a WSI by dividing it into smaller patches using a sliding window approach. Subsequently, the model annotated abnormal cells based on the criteria defined by TBS 2014. The output of the cell detection model serves as input for the WSI-level classification model.

The WSI-level classification model utilizes the results from the patch-level cell detection model and employed a set of well-designed features. These features were then fed into the trained WSI-level classification model, which assigns one of five possible cytology grades according to TBS 2014: NILM, ASC-US, LSIL, HSIL+, and AGC. The workflow chart of the AICCS is depicted in Fig. 1.

Validation and comparative analysis of the AICCS system: retrospective and prospective evaluation

The performance of the AICCS system in predicting cervical cytology images was initially retrospectively validated on the SYSMH internal validation dataset (n = 2152). Subsequently, external validation was conducted using the GWCMC dataset (n = 600) and TAHGMU dataset (n = 600).

To further evaluate the generalizability and robustness of the AICCS system in clinical practice, cervical cytology images from 2780 eligible participants were prospectively collected at SYSMH. The same cervical cytology images were individually reviewed by cytopathologists, comparing their results with those obtained solely from the AICCS system and those obtained by cytopathologists assisted by the AICCS system.

In the cytopathologist group, digital cervical images were individually screened by cytopathologists, and the TBS class for each image was determined without any additional assistance. In the AICCS group, screening results were automatically generated by the AICCS alone. In the AICCS-assisted cytopathologists group, the AICCS was first employed to screen the WSI and generate potential abnormal cell boxes. Then, a second screening was conducted by a cytopathologist before the final decision was made.

Evaluation and randomized observational trial design

To compare the performance of cytopathologists, the AICCS alone, and AICCS-assisted cytopathologists, a randomized observational trial was conducted at SYSMH from August 13, 2020, to December 14, 2020. A total of 608 participants who met our inclusion criteria were randomly assigned in a 1:1:1 ratio to receive a diagnosis from cytopathologists, the AICCS alone, or AICCS-assisted cytopathologists. There were no withdrawals from the study after randomization. To prevent selection bias, randomization was performed using a random number generator without any stratification factors. After receiving an initial diagnosis, all participants, identified only by a masked identification number, received a gold-standard diagnosis from an expert cytopathologist.

AICCS algorithms

Deep learning-based object detection models fall into two primary categories: two-stage detectors and one-stage detectors. The two-stage object detection approach involves generating region proposals, followed by classifying and refining these proposals. The R-CNN family of object detectors, including R-CNN, Fast-R-CNN, and Faster-R-CNN30, gained significant popularity and were state-of-the-art object detection models for an extended period. In contrast, the one-stage object detection approach can directly predict objects without the intermediary step of region proposal generation. One-stage object detection methods aim to streamline the object detection pipeline by predicting object class labels and bounding box coordinates in a single pass. This often results in faster processing speeds compared with two-stage methods. Owing to their efficiency, they have become favored options for real-time object detection. Prominent examples of one-stage object detection models include YOLO, SSD, and RetinaNet31.

In the proposed AICCS system, based on our selection studies for the two major detection approaches (Supplementary Tables 1, 2), we adopted RetinaNet, a one-stage object detection approach, as our anomaly cell detection model at the patch-level. RetinaNet leverages convolutional neural networks (CNNs) and employs ResNet+FPN (ResNet plus a feature pyramid network) as its backbone for feature extraction. Additionally, it incorporates two task-specific subnetworks for classification and bounding box regression. Notably, RetinaNet introduces a focal loss function to address the class imbalance between the foreground and background, which is a common issue in medical data. It thus achieves good performance. ResNet, with its identity shortcut connection that bypasses one or more layers, mitigates the vanishing gradient problem in deep neural networks. Furthermore, the FPN enhances a standard convolutional network by incorporating a top-down pathway and lateral connections, thereby efficiently constructing a multi-scale feature pyramid from a single-resolution input image.

Considering the TBS 2014 guidelines and real-world data distribution, we designed our patch-level detector to distinguish between six classes of abnormal cells: ASC-US, LSIL, ASC-H, HSIL, SCC, and AGC. Owing to the distinct morphological patterns between squamous cells and glandular cells, our patch-level detector integrates an additional binary classifier subnetwork to distinguish between squamous epithelial cells and glandular cells. This subnetwork complements the conventional subnetworks responsible for multiclass recognition and position localization, thereby allowing for the incorporation of loss from all subnetworks during the training process.

The output of the detection model served two main purposes as follows: (1) The top 20 detections of each class were displayed in the graphic user interface of the AICCS system, enabling cytopathologists to review and select them as evidence when issuing a final report. (2) All detections are aggregated at the WSI level and utilized as input for the WSI-level classifier.

Furthermore, in the WSI process, the foundational step in developing a WSI classification model is to generate features based on statistical data from the WSI detection results. Initially, the development of a WSI classification model begins with generating of features that encapsulate the statistical data derived from the WSI detection outcomes. Specifically, these statistical metrics encompass the distribution of confidence levels for each classified object, encompassing the maximum, mean, and standard deviation of the confidence scores for objects within each category, as well as the proportion of each confidence interval pertaining to a given class. Subsequently, these statistical metrics are subsequently converted into features, which are then utilized to train the WSI classification model via the implementation of a random forest algorithm. The top 20 features selected in the random forest model areillustrated in Supplementary Fig. 9.

Statistical analysis

The performance of cytopathologists, the AICCS alone, and AICCS-assisted cytopathologists in identifying cervical cytology grades was evaluated by determining the sensitivity, specificity, accuracy, and area under the curve (AUC) for each group. Receiver operating characteristic (ROC) curves were then plotted to visually demonstrate the diagnostic ability of cytopathologists, the AICCS alone, and AICCS-assisted cytopathologists in classifying cervical cytology grades.

To compare continuous variables, an independent t test was conducted, and a χ2 test was employed for two-group categorical variables. The P value and 95% confidence interval (CI) were utilized to compare the performance of cytopathologists, the AICCS alone, and AICCS-assisted cytopathologists in determining cervical cytology grades.

Statistical significance was considered when the two-tailed P value was less than 0.05 for all statistical tests. Model training and validation were conducted using Python (version 3.6.8). Statistical analyses were performed using Python (version 3.6.8) and Medcala (version 15).

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.