Artificial intelligence race: Is Israel in danger from China, Russia?

Israel will have its work cut out for it if, in the future, either Russia or China uses a synergy of artificial intelligence (AI) and cyber tools against it, said former IDF Unit 8200 Col. (res.) and Team 8 Chief Ideation Officer Bobby Gilburd.

Speaking to the Magazine following Team 8’s recent report relating to AI and business issues, Gilburd said that Jerusalem has had a big advantage over its current weaker cyber adversaries such as Iran, Hezbollah, and Hamas, but that Russia and China are in a different league.

Gilburd, who retired in mid-2022 from the IDF’s Unit 8200 after two decades of work, said Israel does benefit from observing the AI competition between Western countries and countries like China or Russia. He noted that the US has regulations now regarding AI in areas where Israel has still failed to implement proper regulations, but that America faces much tougher adversaries, such as China and Russia “with much vaster capabilities than our enemies.”

“I don’t know how we would do if they [China and Russia] invested” in undermining Israel using their AI capabilities, as opposed to Israel’s main adversary Iran, which does not compare to Israel, China, or Russia as a tech power.

He said, “The US challenge is much harder and complex due to China and Russia, which has resulted in America developing more regulations for its national infrastructure. Israel can watch and learn” from both American regulation and strategic successes and failures in defending against foreign AI interference and hacking.

“We aren’t in the first wave of being attacked; America is more interesting to them than we are,” he stated.

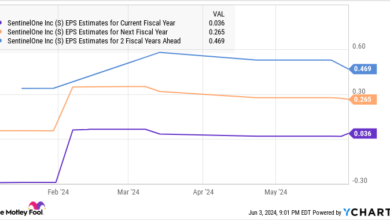

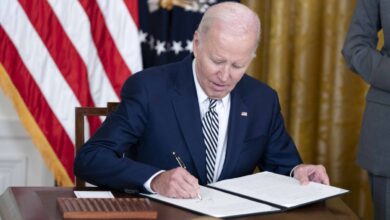

More specifically, Gilburd discussed an executive order issued by US President Joe Biden on AI in October 2023 and again in March, in which the National Institute of Standards and Technology (NIST) addressed risks from adversaries through AI.

Gilburd said that the new policies “are incredible because they came out earlier than usual. With cloud technologies, it took years after the technology had already existed to speak about addressing attacks. Now the US government is leading initiatives to defend digital infrastructure. They understand that this is a weapon.”

But he warned that social networks are only one aspect, since AI can do fake audio and video.

He was concerned about deep fake capabilities leading to a mix of zero day (unknown attacks) style attacks and also known attacks, where civilians and the private sector have not made recommended patches to protect themselves from outside tampering.

Gilburd did express some concern that China and Russia may shift more attention against Israel as the pre-war geopolitical alliances shift and leave less middle ground for Israel to keep positive relations with both those countries and the West.

On April 29, the Biden administration issued further updates about its strategy to rein in AI threats.

A report by the National Institute of Standards and Technology on “Reducing Risks Posed by Synthetic Content” provided an overview of the technical approaches to “digital content transparency” – meaning methods to document and access information about the origin and history of certain digital content in question.

The four-pronged approach by NIST includes formally reflecting that a particular system produced a piece of content; setting ownership of content; providing tools to label and identify AI-generated content; and mitigating the production and distribution of AI-generated child sexual abuse material and non-consensual intimate imagery of real individuals.

Content authentication and provenance tracking, digital watermarking and metadata recording, and technical mitigation measures for synthetic child abuse and intimate imagery materials – such as training data filtering and cryptographic hashing – are some of the standards, tools, methods, and practices NIST studied.

Then on May 1, the US House Energy and Commerce leaders hauled in the chief executive of UnitedHealth Group to demand answers about the massive cyberattack on the health giant’s payment processing subsidiary in February.

On May 2, the US Department of State, the Federal Bureau of Investigation (FBI), and the National Security Agency released a cybersecurity advisory on a new tactic the North Korean cyber group known as Kimsuky is deploying to enhance its social engineering and hacking efforts targeting think tanks, academic institutions, nonprofit organizations, and members of the media.

Another major change in this arena was US Gen. Timothy Haugh replacing Paul Nakasone in February, after a six-year term as the leader of the US’s cyber command and NSA.

Haugh is expected to utilize new budgetary powers which Nakasone obtained at the end of his term and will be pushed by some to significantly expand the size of US cyber command staff to keep up with the ballooning digital threats worldwide.

The battle in this area continues daily.

AI spray attacks

Returning to Gilburd, his next concern was that AI can carry out “spraying” attacks, going after 5,000-10,000 organizations, “achieving a successful hit on any one of them, even if they did perform proper patching of hacking holes. All they need is to hit around 10 of the organizations which they spray attack, and they make a major profit and can do serious damage.”

He noted that “attackers always adapt before defenders” and cautioned that if “it used to take one month from the time that a cyber hole and patch were identified to when hackers utilized the vulnerability for widespread attacks, now hackers can exploit such holes within days or even hours,” of being identified.

Further, he said that ChatGPT can be hacked and then it can be abused, such as to compel the program to write out detailed instructions about how to make C4 explosives, even though ChatGPT’s standard (unhacked) program blocks responding to such queries.

Moreover, Gilburd warned that ChatGPT could also be used to assist a hacker with quicker ways to hack other specific computers.

In that vein, defenders are working on deploying security mechanisms tailored to protect against known AI hacking programs and defending specific industries, such as the water industry.

“In cybersecurity, we are so good because we started early,” and Israel is also strong in AI. But, he said, the US is by far the leader in producing GPT AI models, with the EU being much weaker.

Pressed that many national threat reports warn of China passing the US in various technological arenas, he responded, “I don’t know about there being a strong model in China [regarding GPT AI]. But China is great at stealing technology,” he added.

“GPT will be weaponized. Whoever gets their hand on this first, it’s a weapon for all purposes. I use GPT more than Google. On the way to work, I wanted to work on a PowerPoint presentation. I did it using voiceover GPT as I drove the car,” he recounted excitedly.

In addition, he warned that while the interference by Russia in the 2016 US presidential election was mostly run by human operatives, that was before GPT.

“So take what they did in 2016, and imagine instead of 100 individuals, 100 GPT bots working for Russian hackers. This is a major event.”

Further, he noted that Elon Musk himself had claimed that Twitter had already 20-25% fake identities prior to his buying the company; while Gilburd said the numbers could be as high as 50% fake identities. For example, he said Musk has figured out that fake “likes” still make him money.

Using AI and social media against Israel, he said the Jewish state’s adversaries tried to “split Israel in two. Social media was at the core of this process. The whole purpose is to divide people into warring factions. This creates more ‘interactions,’ which makes money. Increasing tensions is in the interest of these platforms, and AI is great for this.”

“I am worried about Israel. There is not a lot that can be done. The only people who can fix this are the platforms, and they have no interest in doing so,” he said.

Israel could regulate the issue, Gilburd said, but that the social media giants are powerful.

From a technological perspective, AI can also be used to more easily “capture” fake accounts. But he also predicted a new “arms race” with both sides using AI: hackers to create more deep fake identities and hack real ones, while defenders use AI to make it harder to fake identities

As AI improves in faking identities, “We will move toward biometric identification using people’s faces, but eventually AI will get there also. Identity issues are very concerning. How can you make an identity card that cannot be forged? Now we also have digital passports.”

Hi-tech coping with the war

Asked about the economic impact of the current war, Gilburd responded, “The hi-tech sector is usually good at this, but it is hard to say because elsewhere things are not good. We in Team8 are in a climax. We had two big exits in six months, and we just successfully raised new investor capital. Outside and around us there is a war, but business is good.”

Combined, the two exits add up to around $1 billion.

For one, he said “the cyber sector always expands when there is increased fear” of being hacked, which there is now, due to the war.

Generally, he said, there has not been an anti-Israel backlash from clients because most clients remain supportive of Israel, either because they know the region’s complex history or because of their contact with Israelis in business.

He only noted two individuals with whom there were issues, calling them “drops of water in a larger sea.”

Rather, he said, the hardest business issue of the war was having so many employees away part of the time, performing reserve duty. He said Team8 had to show more flexibility regarding working hours and location to help cope with these issues.

Going forward in the age of GPT he said, “Execution is a commodity. Whoever is not innovative with ideas or capabilities will fail.”