As Massachusetts leans in on artificial intelligence, AG waves a yellow flag • Rhode Island Current

BOSTON — While the executive branch of state government touts the competitive advantage to investing energy and money into artificial intelligence across Massachusetts’ tech, government, health, and educational sectors, the state’s top prosecutor is sounding warnings about its risks.

Attorney General Andrea Campbell issued an advisory to AI developers, suppliers, and users on Tuesday, reminding them of their obligations under the state’s consumer protection laws.

“AI has tremendous potential benefits to society,” Campbell’s advisory said. “It presents exciting opportunities to boost efficiencies and cost-savings in the marketplace, foster innovation and imagination, and spur economic growth.”

However, she cautioned, “AI systems have already been shown to pose serious risks to consumers, including bias, lack of transparency or explainability, implications for data privacy, and more. Despite these risks, businesses and consumers are rapidly adopting and using AI systems which now impact virtually all aspects of life.”

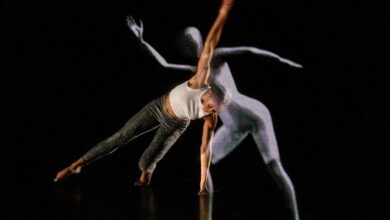

Developers promise that their complex and opaque systems are accurate, fair, effective, and appropriate for certain uses, but Campbell notes that the systems are being deployed “in ways that can deceive consumers and the public,” citing chatbots used to perpetrate scams or of false computer-generated images and videos called “deepfakes” that mislead consumers and viewers about a participant’s identity. Misleading and potentially discriminatory results from these systems can run afoul of consumer protection laws, according to the advisory.

The advisory has echoes of a dynamic in the state’s enthusiastic embrace of gambling at the executive level, with Campbell cautioning against potential harmful impacts while staying shy of a full-throated objection to expansions like an online Lottery.

Gov. Maura Healey has touted applied artificial intelligence as a potential boon for the state, creating an artificial intelligence strategic task force through executive order in February. Healey is also seeking $100 million in her economic development bond bill – the “Mass Leads Act” – to create an Applied AI Hub in Massachusetts.

“Massachusetts has the opportunity to be a global leader in Applied AI – but it’s going to take us bringing together the brightest minds in tech, business, education, health care, and government. That’s exactly what this task force will do,” Healey said in a statement accompanying the task force announcement. “Members of the task force will collaborate on strategies that keep us ahead of the curve by leveraging AI and GenAI technology, which will bring significant benefit to our economy and communities across the state.”

The executive order itself makes only glancing references to risks associated with AI, focusing mostly on the task force’s role in identifying strategies for collaboration around AI and adoption across life sciences, finance, and higher education. The task force members will recommend strategies to facilitate public investment in AI and promoting AI-related job creation across the state, as well as recommending structures to promote “responsible AI development and use for the state.”

In conversation with Healey last month, tech journalist Kara Swisher offered a sharp critique of the enthusiastic embrace of AI hype, describing it as just “marketing right now” and comparing it to the crypto bubble – and signs of a similar AI bubble are troubling other tech reporters. Tech companies are seeing the value in “pushing whatever we’re pushing at the moment, and it’s exhausting, actually,” Swisher said, adding that certain types of tasked algorithms like search tools are already commonplace, but the trend now is “slapping an AI onto it and saying it’s AI. It’s not.”

Eventually, Swisher acknowledged, tech becomes cheaper and more capable at certain types of labor than people – as in the case of mechanized farming – and it’s up to officials like Healey to figure out how to balance new technology while protecting the people it impacts.

Mohamad Ali, chief operating officer of IBM Consulting, opined in CommonWealth Beacon that there need to be significant investments in an AI-capable workforce that prioritizes trust and transparency.

Artificial intelligence policy in Massachusetts, as in many states, is a hodgepodge crossing all branches of government. The executive branch is betting big that the technology can boost the state’s innovation economy, while the Legislature is weighing the risks of deepfakes in nonconsensual pornography and election communications.

Reliance on large language model styles of artificial intelligence – melding the feel of a search algorithm with the promise of a competent researcher and writer – has caused headaches for courts. Because several widely used AI tools use predictive text algorithms trained on existing work but not always limiting itself to it, large language model AI can “hallucinate” and fabricate facts and citations that don’t exist.

In a February order in the troubling wrongful death and sexual abuse case filed against the Stoughton Police Department, Associate Justice Brian Davis sanctioned attorneys for their reliance on AI systems to prepare legal research and blindly file inaccurate information generated by the systems with the court. The AI hallucinations and the unchecked use of AI in legal filings are “disturbing developments that are adversely affecting the practice of law in the Commonwealth and beyond,” Davis wrote.

This article first appeared on CommonWealth Beacon and is republished here under a Creative Commons license.

GET THE MORNING HEADLINES DELIVERED TO YOUR INBOX