Auditing generative AI workloads with AWS CloudTrail

With the emergence of generative AI being incorporated into every aspect of how we utilize technology, a common question that customers are asking is how to properly audit generative AI services on AWS, such as Amazon Bedrock, Amazon Sagemaker, Amazon Q Developer, and Amazon Q Business.

In this post, we will demonstrate common scenarios that utilize a combination of AWS CloudTrail data events and CloudTrail Lake analysis. This approach will help you identify specific events and audit your generative AI workloads by investigating the API actions that you or your applications perform within your AWS environment.

AWS CloudTrail tracks user and API activities across AWS environments for governance and auditing purposes. It allows customers to centralize a record of these activities. CloudTrail captures API information for AWS services, so it can capture API information for your generative AI workloads. AWS CloudTrail Lake is a managed data lake that allows users to store and query CloudTrail events using SQL-like query language. Customers can ingest CloudTrail events into CloudTrail Lake to analyze API activity.

Setting up CloudTrail Lake

For all the scenarios in this blog, we recommend using CloudTrail Lake to run queries and audit events. We assume the event data store has already been created. To create a CloudTrail Lake event data store, follow the steps here. If you are not using CloudTrail Lake, you can achieve the same result by enabling data events on your existing CloudTrail Trail and using Amazon Athena for analysis.

Using CloudTrail Data Events for Amazon Q for Business

In our sample scenario, we assume that you have an Amazon Q Business application set up with data sources configured to Amazon S3, and we examine a situation where a group of users who could previously converse with Amazon Q Business to get information cannot retrieve the same information. To investigate the underlying issue, we will leverage the CloudTrail data events for Amazon Q Business.

- For this scenario, we will configure the data events for AWS::QBusiness::Application and AWS::S3::Object. AWS::QBusiness::Application logs data plane activities related to our Amazon Q Business application. More information can be found here. AWS::S3::Object records data events for our source Amazon S3 bucket. More information can be found here.

- Navigate to the CloudTrail console. From the left-hand panel, select ‘Lake’, then ‘Event data stores’. Navigate to ‘Data events’. Click ‘Edit’, and choose ‘Add data event type’.

- Under ‘Data event’, select ‘Amazon Q Business application’ for ‘Data event type’. For ‘Log selector template’, choose ‘Custom’. In the ‘Advanced event selectors’ section, choose ‘resources.ARN’ for the ‘Field’ Select ‘starts with’ for ‘Operator’, and in the ‘Value’ field, input the ARN of your Amazon Q Business application in the form arn:aws:qbusiness:<region>:<account_id>:application/<application_id>.

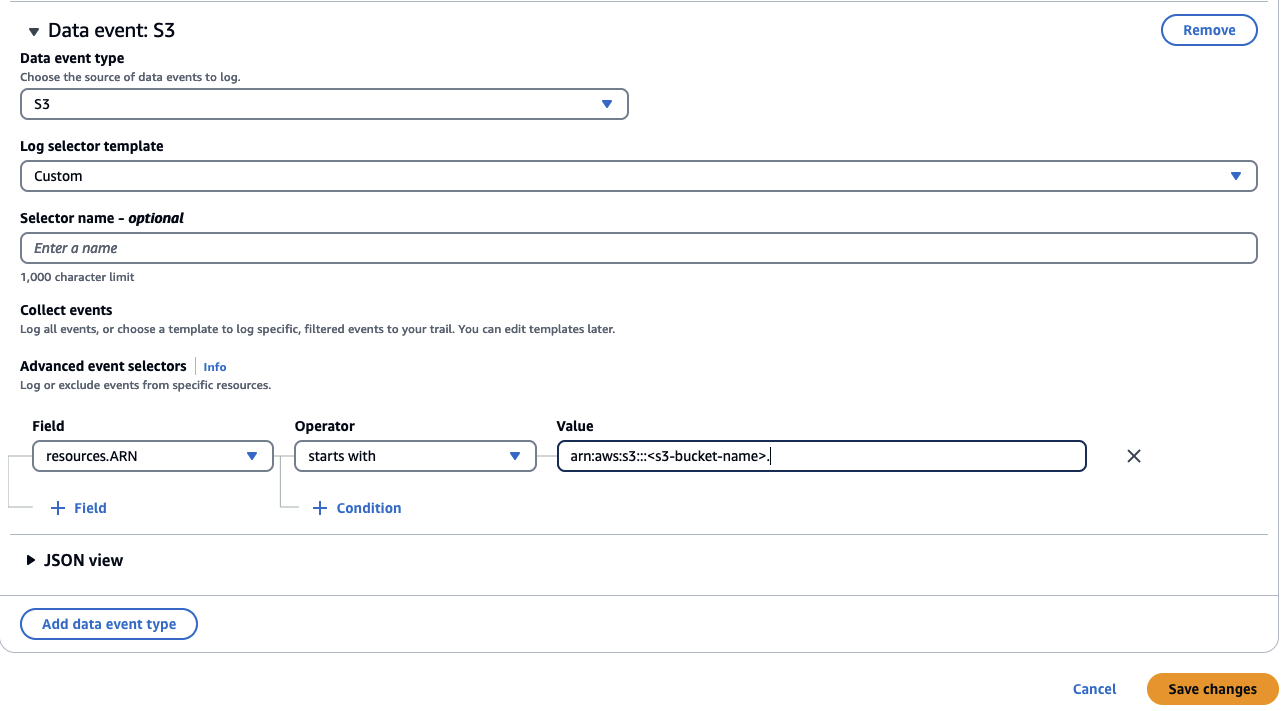

- Under ‘Data event’, select ‘S3’ for ‘Data event type’. For ‘Log selector template’, choose ‘Custom‘. In the ‘Advanced event selectors’ section, choose ‘resources.ARN’ for the ‘Field’ Select ‘starts with’, and in the ‘Value’ field, input the ARN of your S3 bucket in the form arn:aws:s3:::<s3-bucket-name>.

Once the data events are configured for the event data store and events start to be generated for Amazon Q Business and Amazon S3, we will be able to query them. It may take a short while for an event to appear in a CloudTrail Lake query after it has occurred.

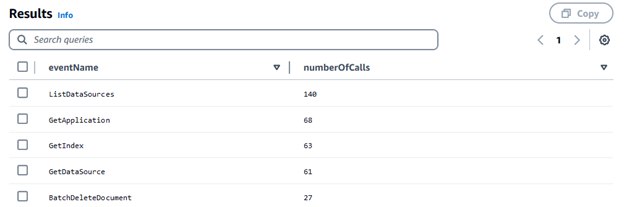

- Using CloudTrail Lake, we will query data events for Amazon Q business application within a specific time period (in this case, 1 day)

SELECT

eventName, COUNT(*) AS numberOfCalls

FROM

<event-data-store-ID>

WHERE

eventSource="qbusiness.amazonaws.com" AND eventTime > date_add('day', -1, now())

Group

BY eventName ORDER BY COUNT(*) DESCThe results show that there were API calls to BatchDeleteDocument indicating deletion of one or more documents which was used in our Amazon Q Business Application.

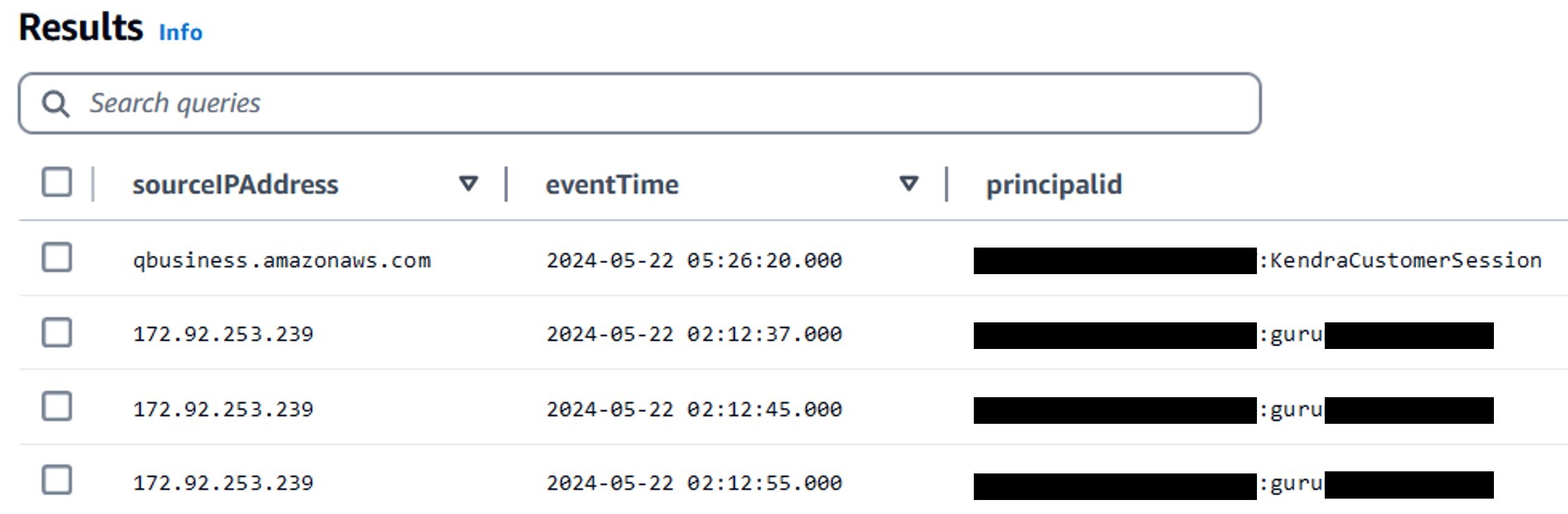

- To find the IAM identity associated with the BatchDeleteDocument API call, run the following query:

SELECT

sourceIPAddress, eventTime, userIdentity.principalid

FROM

<event-data-store-ID>

WHERE

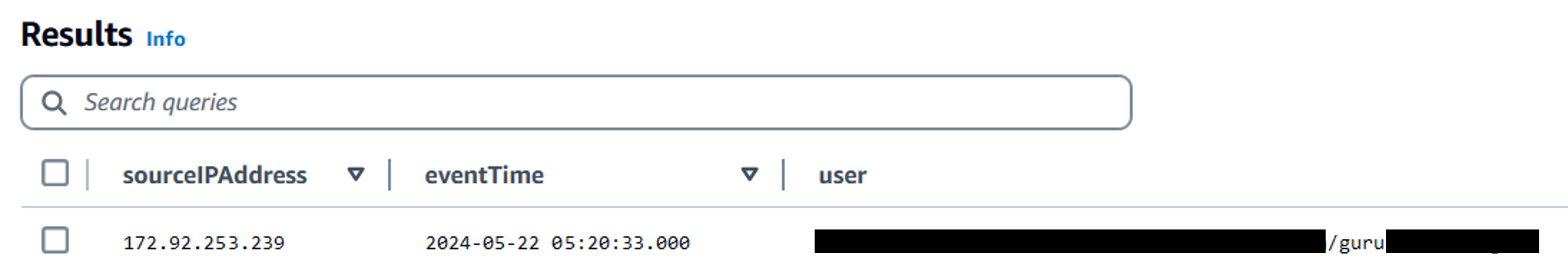

eventName="BatchDeleteDocument" AND eventTime > date_add('day', -1, now())The logs show that our admin “guruXXXXXXX” deleted documents attached to the Amazon Q Index, but a few deletions were attributed to the role “KendraCustomerSession.”. A sync job appears to have caused the deletions.

- To confirm, let’s check if a job triggered the synchronization of the S3 data source with the Amazon Q Business Applications by looking for the StartDataSourceSyncJob API call.

SELECT

sourceIPAddress, eventTime, userIdentity.arn AS user

FROM

<event-data-store-ID>

WHERE

eventName="StartDataSourceSyncJob" AND eventTime > date_add('day', -1, now())

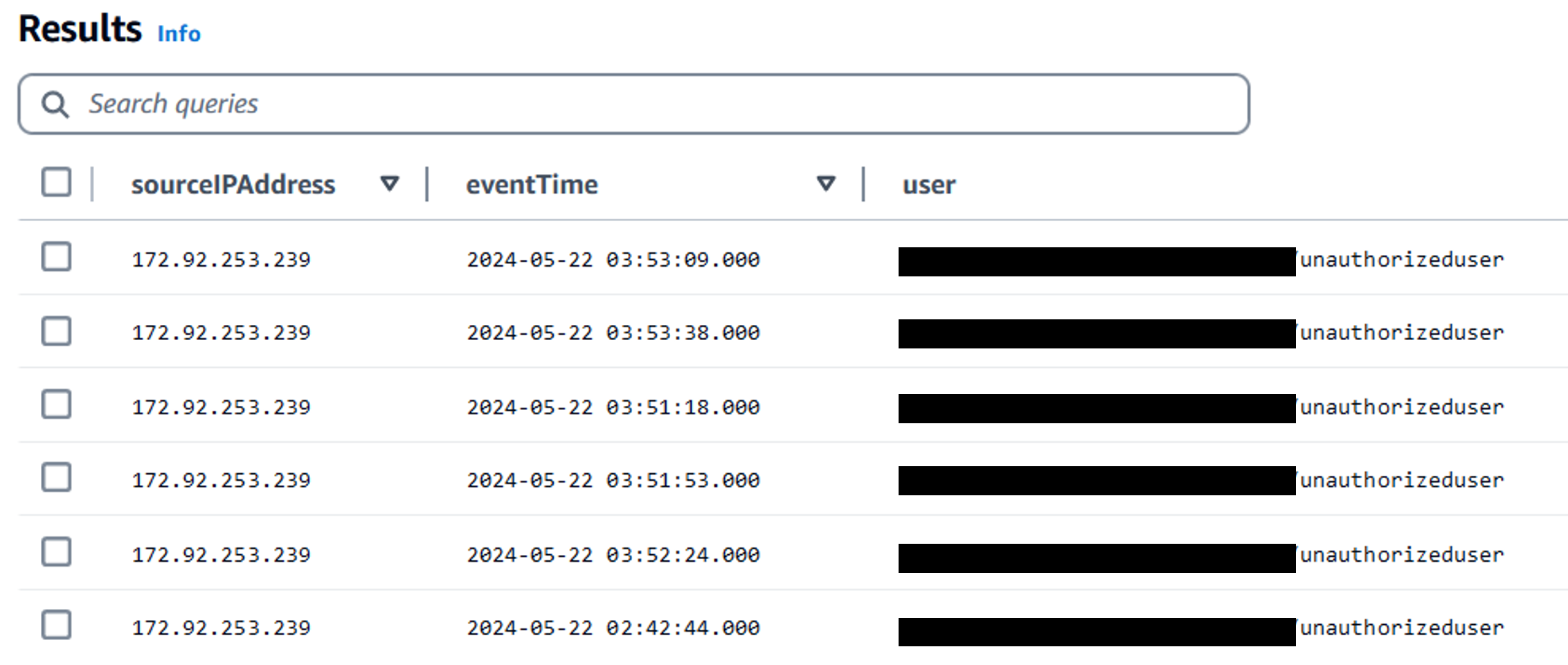

Our investigation reveals that a sync job was triggered, followed by the BatchDeleteDocument API. Notice the event time.

- Let’s finally find out if any objects were deleted from the S3 bucket that is connected as a data source to our Q business application by checking for DeleteObject API event:

SELECT

sourceIPAddress, eventTime, userIdentity.arn AS user

FROM

<event-data-store-ID>

WHERE

userIdentity.arn IS NOT NULL AND eventName="DeleteObject"

AND element_at(requestParameters, 'bucketName') like '<enter-S3-bucket-name>'

AND eventTime > '2024-05-09 00:00:00'

Here, we see a user deleted a file in our S3 bucket, which led to the removal of data from Amazon Q Business. The S3 bucket had excessive permissions that allowed the user to perform delete operations, resulting in the removal of some indexed documents in Amazon Q Business. This prevented users from conversing with the Q Business application.

Using CloudTrail Data Events for Amazon Q Developer

For this exercise, we will assume that you are actively using AWS IAM Identity Center to administer users and Amazon Q Developer (formerly known as CodeWhisperer) in your organization. Your goal is to identify which users utilize Amazon Q Developer to analyze code within your organization and determine the source of their requests.

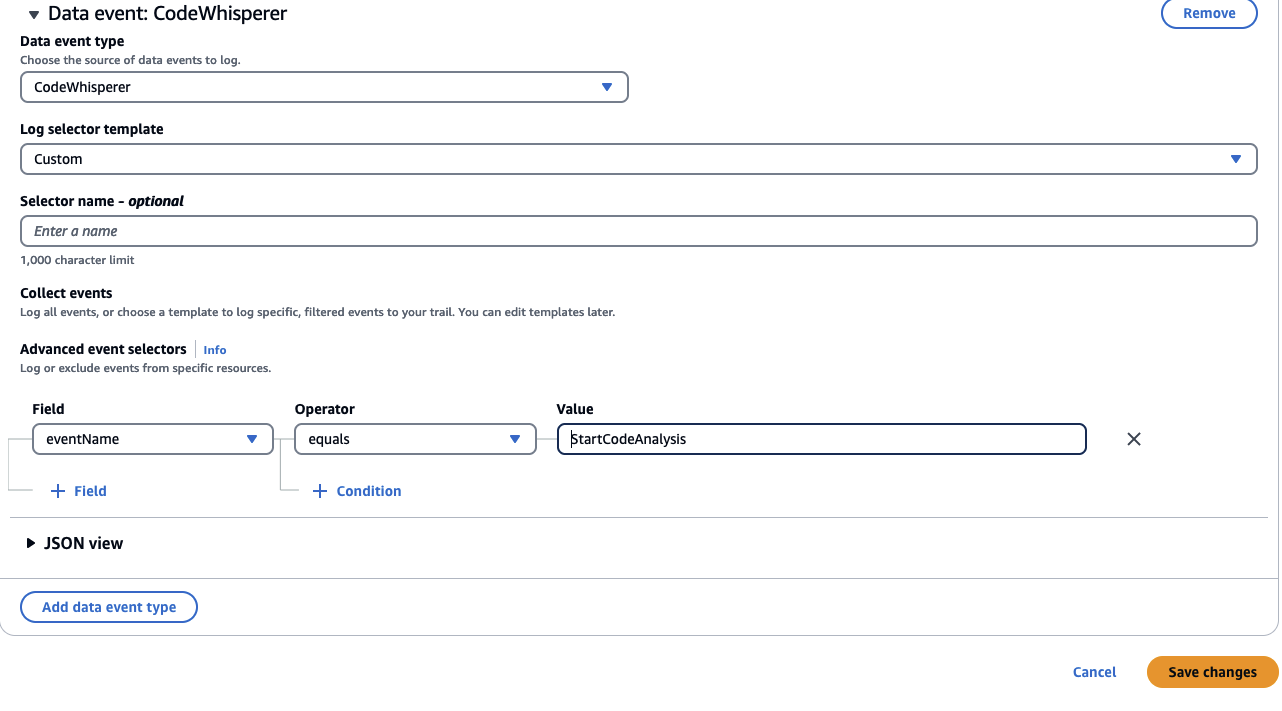

- In this scenario, we will select the ‘StartCodeAnalysis’ data event using Advanced event selectors, which tracks security scans performed by Amazon Q Developer for VS Code and JetBrains IDEs. The steps to do so are as follows:

- Navigate to the CloudTrail console, and from the left-hand panel, select ‘Lake’ and then ‘Event data stores’. In the left-hand panel, navigate to ‘Data events’, click ‘Edit’, and then choose ‘Add data event type’.

- Under ‘Data event’, for ‘Data event type’, select ‘Codewhisperer’. Under ‘Log selector template’, choose ‘Custom’. In the ‘Advanced event selectors’ section, for the ‘Field’ option, select ‘eventName’, for ‘Operator’, choose ‘equals’, and in the ‘Value’ field, input ‘StartCodeAnalysis’.

Once the data events are configured for the event data store and events start to be generated for Amazon Q Developer, we will be able to query them. It may take a short while for an event to appear in a CloudTrail Lake query after it has occurred.

- Next, utilizing the CloudTrail Lake service, we will execute the following query to retrieve a list of all users who initiated a security scan:

SELECT

userIdentity.onbehalfof.userid, eventTime, SourceIPAddress

FROM

<event-data-store-ID>

WHERE

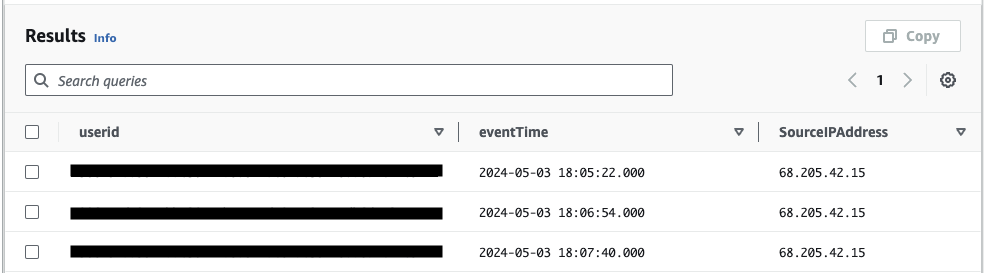

eventName="StartCodeAnalysis"The execution of the query should yield the following output:

The ‘userId’ values in the output should correspond to the users listed in the Identity Center, as shown below:

Now that we have a list of all userIds running code analyses with Amazon Q Developer, we can easily identify who executed the analyses and other helpful information, such as where and when they were executed.

Using CloudTrail Data Events for Amazon Bedrock

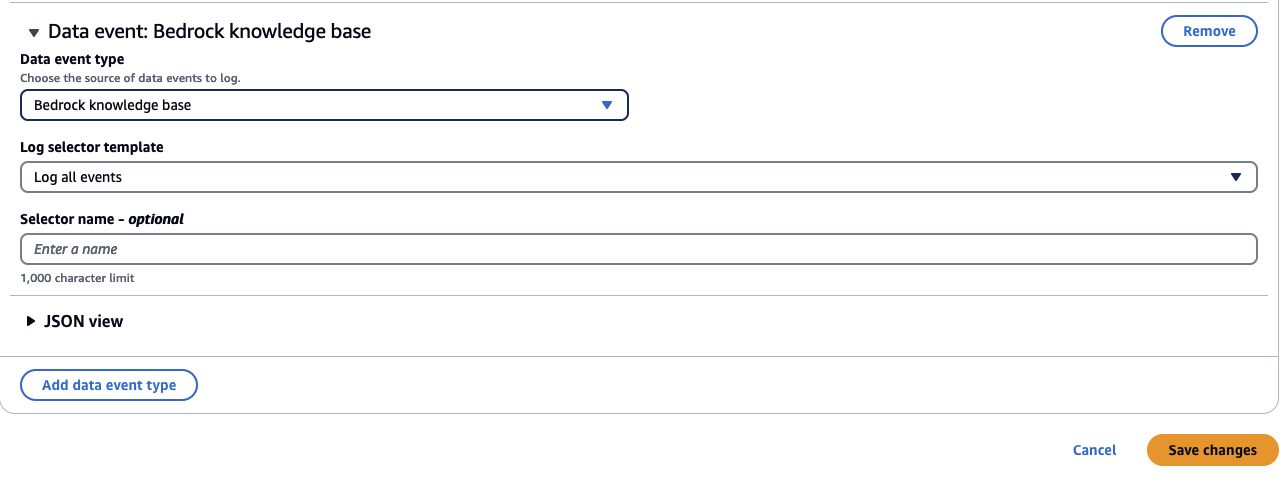

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI. Amazon Bedrock does not store user data by design. If organizations need to audit and analyze user activities on Amazon Bedrock, they must enable CloudTrail data events. CloudTrail data events for Amazon Bedrock track API events for Agents for Bedrock and Amazon Bedrock knowledge bases through the ‘AWS::Bedrock::AgentAlias’ and ‘AWS::Bedrock::KnowledgeBase’ resource type actions. To enable these data events:

- Navigate to the CloudTrail console, and from the left-hand panel, select ‘Lake’ and then ‘Event data stores’. In the left-hand panel, navigate to ‘Data events’, click ‘Edit’, and then choose ‘Add data event type’

- Under ‘Data event’, for ‘Data event type’, select ‘Bedrock agent alias’. Under ‘Log selector template’, choose ‘Log all events’. Click ‘Add data event type’.

- Under ‘Data event’, for ‘Data event type’, select ‘Bedrock knowledge base’. Under ‘Log selector template’, choose ‘Log all events’.

Once the data events are configured for the event data store and events start to be generated for Amazon Bedrock knowledge bases, we will be able to query them. It may take a short while for an event to appear in a CloudTrail Lake query after it has occurred.

In our sample use case, we have Agents for Amazon Bedrock configured and Knowledge Bases with one of the foundation models enabled. We also have a custom chat application powered by a foundation model in Bedrock. This application allows users to query EC2 instance information with their pricing details. The solution needs to follow regulatory and compliance requirements and should have a complete audit trail of all user interactions with the application. To support these requirements, we use CloudTrail data events along with CloudTrail Lake.

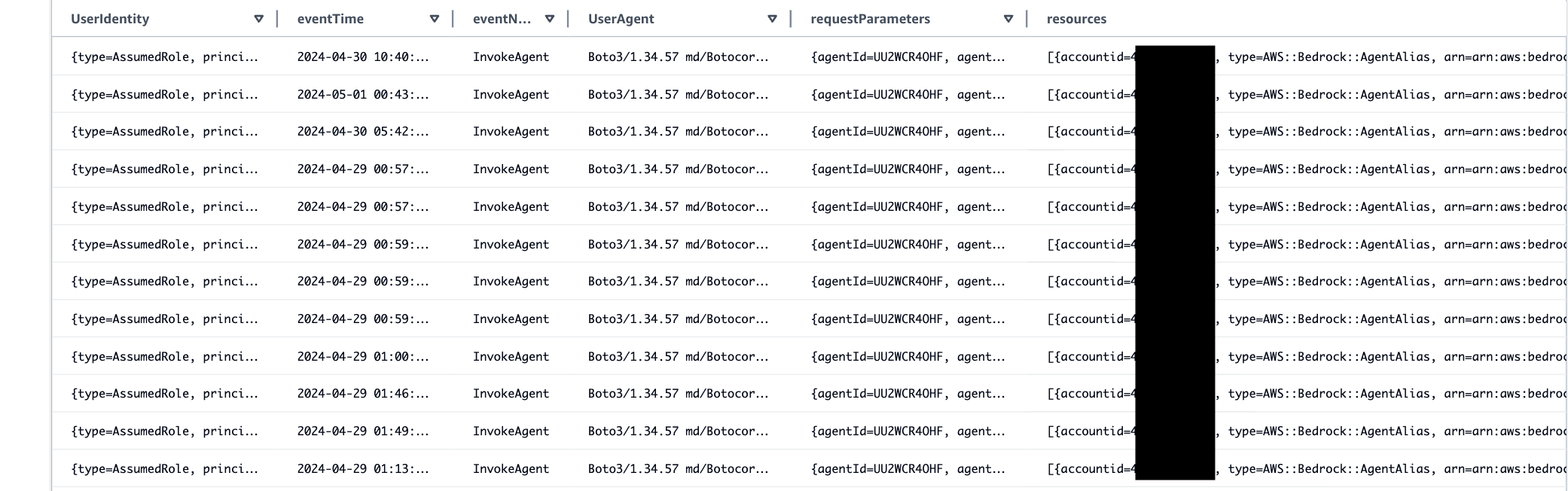

If the administrator of the chat application wants to audit the events related to Bedrock agents’ invocation, they can use the following query. The result will help determine the details on request and response parameters sent along with the invoked agent alias.

SELECT

UserIdentity,eventTime,eventName,UserAgent,requestParameters,resources

FROM

<event-data-store-ID>

WHERE

eventName="InvokeAgent"

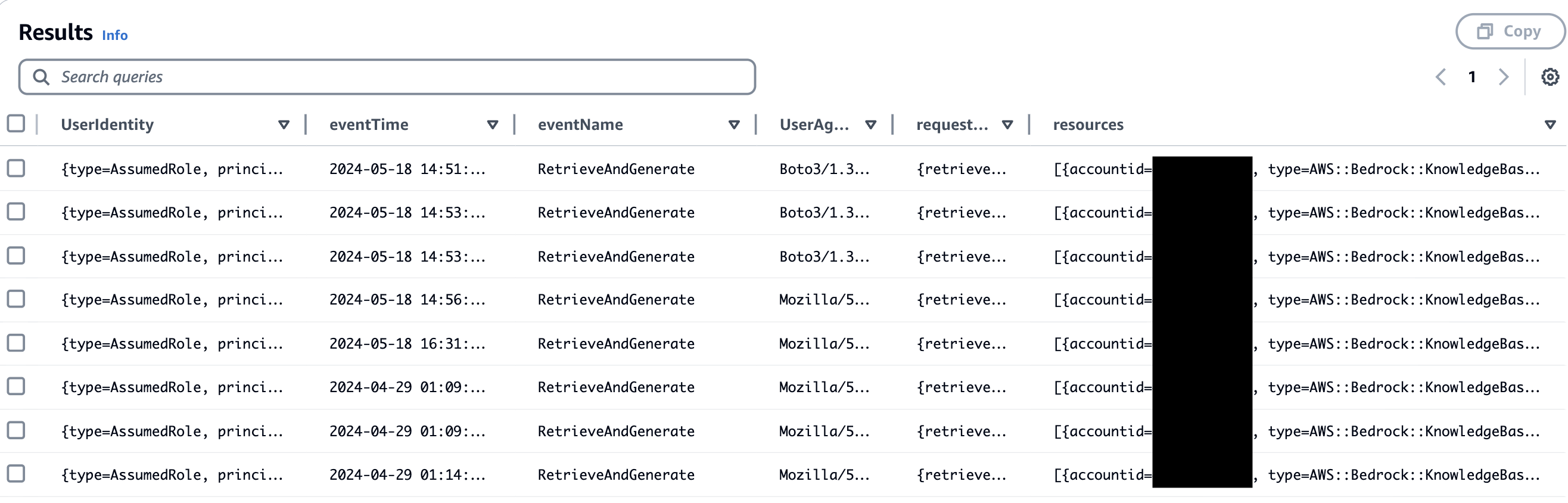

The following query will provide details on the invoked knowledge base, along with the returned request and response parameters:

SELECT

UserIdentity,eventTime,eventName,UserAgent,requestParameters,resources

FROM

<event-data-store-ID>

WHERE

eventName="RetrieveAndGenerate"

Cleaning up

To prevent incurring additional charges, delete the event data stores created during this walkthrough.

Conclusion

In this blog post, we demonstrated how to leverage CloudTrail Lake for streamlining the analysis of generative AI events recorded across your AWS accounts within CloudTrail. You can enable data events by utilizing advanced event selectors to promote cost-effectiveness. Furthermore, you can immutably store these events into CloudTrail Lake and execute queries to identify specific events generated from generative AI services. To learn more about these features, refer to the following resources:

For more information about AWS CloudTrail, refer to the ‘Getting Started with AWS CloudTrail’ documentation.

About the Authors