AWS boosts Amazon Bedrock GenAI platform, upgrades Titan LLM

AWS on Tuesday released a group of features for its Amazon Bedrock generative AI platform that ease the process of importing, evaluating and designing safety guardrails for third-party large language models.

The cloud giant also made generally available text-to-imaging capabilities for its own foundation model, Titan, along with an indemnification policy for users and a watermarking feature aimed at ensuring images are authentic.

Multi-model approach

The moves advance the vendor’s strategy of positioning itself as a model-agnostic provider in the GenAI market and aim to avoid recent GenAI missteps, including a disastrous breakdown by Google’s Gemini LLM.

Available in preview, Amazon Model Custom Import supports the widely used open model architectures Flan-T5, Meta’s Llama and Mistral, with plans to support more models later.

Model Evaluation, which enables users to assess, compare, and select the best model for their application, is now generally available.

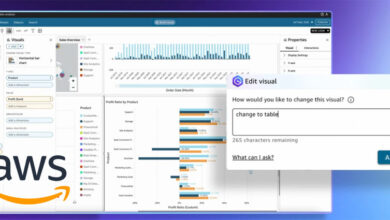

Guardrails, also generally available, lets users design custom safeguards for all models on Bedrock using natural language to define denied topics and configure thresholds to filter out hate speech, sexualized language, violence, profanity or other undesired content.

Already available on Bedrock and the Amazon SageMaker machine learning AI training and deployment service are LLMs from AI21, Anthropic, Cohere, Meta, Mistral and Stability AI. Llama 3, released last week, is now generally available on Bedrock, as is Cohere’s Command R+, introduced April 5.

Notably absent from Amazon’s roster of well-known LLMs are the powerful GenAI system from OpenAI and Google.

Amazon, with a multi-model strategy, is wise to focus on making LLMs easy to use and customize for enterprises, said Larry Carvalho, analyst at RobustCloud, a cloud advisory firm. Amazon has yet to release a model like OpenAI’s GPT-4, for example, but makes up for it with support for Anthropic’s Claude 3, which has been shown to be slightly faster than GPT-4, he said.

“I would look for whoever gives me the easiest to use product,” Carvalho said, adding that indemnification for the Titan image generator “may be a bit of a differentiator for Amazon.”

Correcting for GenAI errors, but lagging on integration

GenAI hallucinations can create financial issues and other problems for enterprise users, as illustrated in February when an Air Canada chatbot offered bereavement fares to a customer that went against the airline’s own policies. A Canadian tribunal found the airline negligently misrepresented the discount.

And after the text-to-image component of Google’s Gemini LLM the same month spit out image hallucinations of a Pope as a Black man and other racial inaccuracies, Google was forced to apologize and shut off the image generator. It remains unavailable.

As for Amazon Titan, Carvalho noted clients have told him that it doesn’t perform as well as most of its competition.

And Amazon lags its rival cloud giants Microsoft and Google in quickly integrating GenAI technology across its product line as Microsoft has done with Microsoft 365 and its search engine and Google with Workspace and Search and its analytics systems, Carvalho said.

“If I had to advise Amazon to do something better, I’d say they need to reach to the masses more,” by embedding generative AI in Amazon.com, Amazon Prime and the Alexa assistant line, he added.

Multiple models

Amazon’s tagline for its approach with Bedrock is “model choice.”

“Specific models … perhaps are more optimal for specific use cases, depending on what the customer is looking for,” Sherry Marcus, director of Amazon Bedrock Science, said in an interview before the new features launch. “So that’s why we believe in model choice for customers.”

With the Titan image generator, Amazon is aiming at customers in e-commerce, advertising and media entertainment that are looking to produce studio quality images or enhance or edit existing images at low cost using natural language prompts, Marcus said.

The embedded digital watermarks prove that the images were created by Titan, not by a bad actor, she said. In addition, Amazon supplies an API to which users can input an image to confirm that it was made by Titan.

“The reason why this is very important is [to prevent] media disinformation,” Marcus added. “We can now verify that images that come out of the Bedrock system are indeed from Bedrock and not falsified in any way.”

Progress, but more needed

In sum, Amazon’s moves to strengthen Bedrock are a logical step for the cloud vendor, said William McKeon-White, a Forrester analyst.

“These seem to be a number of smart, incremental upgrades to the platform, providing more developer options with tools specifically for them to manage their GenAI projects,” he said. “It’s a good approach for companies that are actively working to develop their own capabilities in-house and for organizations with resources to spin up a machine learning team.”

What Bedrock, even with its recent updates, still lacks is advanced orchestration capabilities, even with a tighter API link between Bedrock and SageMaker that Amazon released along with the other new features, McKeon-White said.

“How Amazon would appropriately implement that would be to give developers tools to run and identify LLMs where needed and to orchestrate those outputs together into something that is able to complete a singular task,” he said. “Using multiple different models in sequence is increasingly becoming a common architectural approach.”

Shaun Sutner is senior news director for TechTarget Editorial’s information management team, driving coverage of artificial intelligence, unified communications, analytics and data management technologies. He is a veteran journalist with more than 30 years of news experience.