Behavioral health and generative AI: a perspective on future of therapies and patient care

Rajpurkar, P., Chen, E., Banerjee, O. & Topol, E. J. AI in health and medicine. Nat. Med. 28, 31–38 (2022).

Nazarian, S., Glover, B., Ashrafian, H., Darzi, A. & Teare, J. Diagnostic accuracy of artificial intelligence and computer-aided diagnosis for the detection and characterization of colorectal polyps: systematic review and meta-analysis. J. Med. Internet Res. 23, e27370 (2021).

Aggarwal, R. et al. Diagnostic accuracy of deep learning in medical imaging: a systematic review and meta-analysis. NPJ Digit. Med. 4, 65 (2021).

Wang, C., Zhu, X., Hong, J. C. & Zheng, D. Artificial intelligence in radiotherapy treatment planning: present and future. Technol. Cancer Res. Treat. 18, 1533033819873922 (2019).

Zhou, Q., Chen, Z.-H., Cao, Y.-H. & Peng, S. Clinical impact and quality of randomized controlled trials involving interventions evaluating artificial intelligence prediction tools: a systematic review. NPJ Digit. Med. 4, 154 (2021).

Ho, D. et al. Enabling technologies for personalized and precision medicine. Trends Biotechnol. 38, 497–518 (2020).

Bae, S. W. et al. Leveraging mobile phone sensors, machine learning, and explainable artificial intelligence to predict imminent same-day binge-drinking events to support just-in-time adaptive interventions: algorithm development and validation study. JMIR Form. Res 7, e39862 (2023).

Paul, D. et al. Artificial intelligence in drug discovery and development. Drug Discov. Today 26, 80–93 (2021).

Kuziemsky, C. et al. Role of Artificial Intelligence within the Telehealth Domain. Yearb. Med. Inform. 28, 35–40 (2019).

Sujith, A. V. L. N., Sajja, G. S., Mahalakshmi, V., Nuhmani, S. & Prasanalakshmi, B. Systematic review of smart health monitoring using deep learning and artificial intelligence. Neurosci. Inform. 2, 100028 (2022).

Ho, J., Jain, A. & Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 33, 6840–6851 (2020).

Goodfellow, I. et al. Generative adversarial networks. Commun. ACM 63, 139–144 (2020).

Vaswani, A. et al. Attention is all you need. In: Advances in neural information processing systems 5998–6008 (2017).

Thirunavukarasu, A. J. et al. Large language models in medicine. Nat. Med. 29, 1930–1940 (2023).

Huang, K. et al. Artificial intelligence foundation for therapeutic science. Nat. Chem. Biol. 18, 1033–1036 (2022).

D’Amico, S. et al. Synthetic data generation by artificial intelligence to accelerate research and precision medicine in hematology. JCO Clin. Cancer Inf. 7, e2300021 (2023).

Zhao, J., Hou, X., Pan, M. & Zhang, H. Attention-based generative adversarial network in medical imaging: a narrative review. Comput. Biol. Med. 149, 105948 (2022).

Liu, Y. et al. CBCT-based synthetic CT generation using deep-attention cycleGAN for pancreatic adaptive radiotherapy. Med. Phys. 47, 2472–2483 (2020).

Koohi-Moghadam, M. & Bae, K. T. Generative AI in medical imaging: applications, challenges, and ethics. J. Med. Syst. 47, 94 (2023).

Acosta, J. N., Falcone, G. J., Rajpurkar, P. & Topol, E. J. Multimodal biomedical AI. Nat. Med. 28, 1773–1784 (2022).

Bommasani, R. et al. On the opportunities and risks of foundation models. arXiv https://arxiv.org/abs/2108.07258 (2021).

Bond-Taylor, S., Leach, A., Long, Y. & Willcocks, C. G. Deep generative modelling: a comparative review of VAEs, GANs, normalizing flows, energy-based and autoregressive models. IEEE Trans. Pattern Anal. Mach. Intell. 44, 7327–7347 (2022).

Sharma, A., Lin, I. W., Miner, A. S., Atkins, D. C. & Althoff, T. Human–AI collaboration enables more empathic conversations in text-based peer-to-peer mental health support. Nat. Mach. Intell. 5, 46–57 (2023).

van Heerden, A. C., Pozuelo, J. R. & Kohrt, B. A. Global mental health services and the impact of artificial intelligence-powered large language models. JAMA Psychiatry 80, 662–664 (2023).

Pandey, S. & Sharma, S. A comparative study of retrieval-based and generative-based chatbots using deep learning and machine learning. Healthc. Analytics 3, 100198 (2023).

Bird, J. J. & Lotfi, A. Generative transformer chatbots for mental health support: a study on depression and anxiety. In: Proceedings of the 16th international conference on pervasive technologies related to assistive environments, 475–479 (Association for Computing Machinery, 2023). https://doi.org/10.1145/3594806.3596520.

Sezgin, E., Chekeni, F., Lee, J. & Keim, S. Clinical accuracy of large language models and google search responses to postpartum depression questions: cross-sectional study. J. Med. Internet Res. 25, e49240 (2023).

Schueller, S. M. & Morris, R. R. Clinical science and practice in the age of large language models and generative artificial intelligence. J. Consult. Clin. Psychol. 91, 559–561 (2023).

Pataranutaporn, P. et al. AI-generated characters for supporting personalized learning and well-being. Nat. Mach. Intell. 3, 1013–1022 (2021).

Nye, A., Delgadillo, J. & Barkham, M. Efficacy of personalized psychological interventions: a systematic review and meta-analysis. J. Consult. Clin. Psychol. 91, 389–397 (2023).

Cronbach, L. J. & Snow, R. E. Aptitudes and instructional methods: a handbook for research on interactions. 574, (1977).

Zhong, Y. et al. The issue of evidence-based medicine and artificial intelligence. Asian J. Psychiatr. 85, 103627 (2023).

Mosqueira-Rey, E., Hernández-Pereira, E., Alonso-Ríos, D., Bobes-Bascarán, J. & Fernández-Leal, Á. Human-in-the-loop machine learning: a state of the art. Artif. Intell. Rev. 56, 3005–3054 (2023).

Park, S. Y. et al. Identifying challenges and opportunities in human-AI collaboration in healthcare. In: Conference companion publication of the 2019 on computer supported cooperative work and social computing, 506–510 (Association for Computing Machinery, 2019). https://doi.org/10.1145/3311957.3359433.

Sezgin, E. Artificial intelligence in healthcare: complementing, not replacing, doctors and healthcare providers. Digit Health 9, 20552076231186520 (2023).

Zhong, Y. et al. The Artificial intelligence large language models and neuropsychiatry practice and research ethic. Asian J. Psychiatr. 84, 103577 (2023).

Holmes, E. A., Arntz, A. & Smucker, M. R. Imagery rescripting in cognitive behaviour therapy: images, treatment techniques and outcomes. J. Behav. Ther. Exp. Psychiatry 38, 297–305 (2007).

Lang, P. J. Imagery in therapy: an information processing analysis of fear. Behav. Ther. 8, 862–886 (1977).

Hafner, C., Schneider, J., Schindler, M. & Braillard, O. Visual aids in ambulatory clinical practice: experiences, perceptions and needs of patients and healthcare professionals. PLoS One 17, e0263041 (2022).

Boland, L. et al. An experimental investigation of the effects of perspective-taking on emotional discomfort, cognitive fusion and self-compassion. J. Context. Behav. Sci. 20, 27–34 (2021).

Beck, A. T. Thinking and depression. II. Theory and therapy. Arch. Gen. Psychiatry 10, 561–571 (1964).

Brasfield, C. Cognitive-behavioral treatment of borderline personality disorder. Behav. Res. Ther. 32, 899 (1994).

Hayes, S. C., Strosahl, K. D. & Wilson, K. G. Acceptance and commitment therapy: an experiential approach to behavior change. (Guilford Publications, 1999).

Moor, M. et al. Foundation models for generalist medical artificial intelligence. Nature 616, 259–265 (2023).

Christens, B. D., Collura, J. J. & Tahir, F. Critical hopefulness: a person-centered analysis of the intersection of cognitive and emotional empowerment. Am. J. Community Psychol. 52, 170–184 (2013).

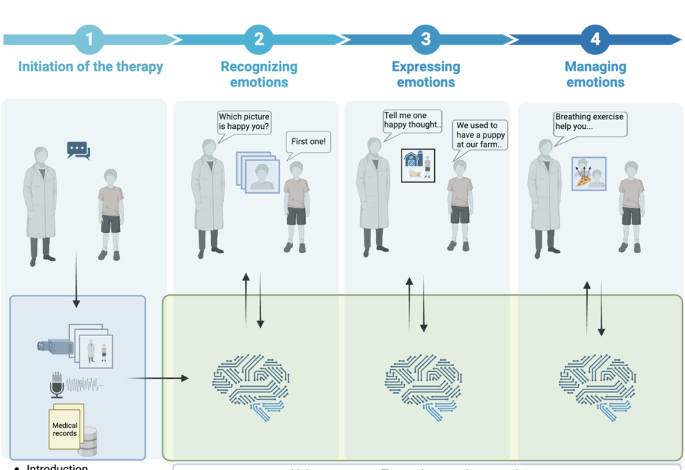

Kotsou, I., Mikolajczak, M., Heeren, A., Grégoire, J. & Leys, C. Improving emotional intelligence: a systematic review of existing work and future challenges. Emot. Rev. 11, 151–165 (2019).

Lewis, G. J., Lefevre, C. E. & Young, A. W. Functional architecture of visual emotion recognition ability: a latent variable approach. J. Exp. Psychol. Gen. 145, 589–602 (2016).

Brackett, M. A., Rivers, S. E. & Salovey, P. Emotional intelligence: implications for personal, social, academic, and workplace success. Soc. Personal. Psychol. Compass 5, 88–103 (2011).

Morie, K. P., Crowley, M. J., Mayes, L. C. & Potenza, M. N. The process of emotion identification: considerations for psychiatric disorders. J. Psychiatr. Res. 148, 264–274 (2022).

Bobek, E. & Tversky, B. Creating visual explanations improves learning. Cogn. Res Princ. Implic. 1, 27 (2016).

Cheng, V. W. S., Davenport, T., Johnson, D., Vella, K. & Hickie, I. B. Gamification in apps and technologies for improving mental health and well-being: systematic review. JMIR Ment. Health 6, e13717 (2019).

Xie, H. A scoping review of gamification for mental health in children: uncovering its key features and impact. Arch. Psychiatr. Nurs. 41, 132–143 (2022).

Nicolaidou, I., Aristeidis, L. & Lambrinos, L. A gamified app for supporting undergraduate students’ mental health: a feasibility and usability study. Digit Health 8, 20552076221109059 (2022).

stabilityai/stable-diffusion-2-1 · Hugging face. https://huggingface.co/stabilityai/stable-diffusion-2-1.

Stanton, A. L. & Low, C. A. Expressing emotions in stressful contexts: benefits, moderators, and mechanisms. Curr. Dir. Psychol. Sci. 21, 124–128 (2012).

CSEFEL: center on the social and emotional foundations for early learning. https://csefel.vanderbilt.edu/resources/family.html.

Gross, J. J. The emerging field of emotion regulation: an integrative review. Rev. Gen. Psychol. 2, 271–299 (1998).

Moltrecht, B., Deighton, J., Patalay, P. & Edbrooke-Childs, J. Effectiveness of current psychological interventions to improve emotion regulation in youth: a meta-analysis. Eur. Child Adolesc. Psychiatry 30, 829–848 (2021).

Tang, W. & Kreindler, D. Supporting homework compliance in cognitive behavioural therapy: essential features of mobile apps. JMIR Ment. Health 4, e20 (2017).

Azuaje, G. et al. Exploring the use of AI text-to-image generation to downregulate negative emotions in an expressive writing application. R. Soc. Open Sci. 10, 220238 (2023).

Vial, T. & Almon, A. Artificial intelligence in mental health therapy for children and adolescents. JAMA Pediatr. 177, 1251–1252 (2023).

Harvey, W. & Wood, F. Visual chain-of-thought diffusion models. arXiv https://arxiv.org/abs/2303.16187 (2023).

Weisz, J. D. et al. Design principles for generative AI applications. arXiv https://arxiv.org/html/2401.14484v1 (2024).

Cachat-Rosset, G. & Klarsfeld, A. Diversity, equity, and inclusion in artificial intelligence: an evaluation of guidelines. Appl. Artif. Intell. 37, 2176618 (2023).

Lee, E. E. et al. Artificial intelligence for mental health care: clinical applications, barriers, facilitators, and artificial wisdom. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 6, 856–864 (2021).

Sezgin, E., Sirrianni, J. & Linwood, S. L. Operationalizing and implementing pretrained, large artificial intelligence linguistic models in the US health care system: outlook of generative pretrained transformer 3 (GPT-3) as a service model. JMIR Med. Inf. 10, e32875 (2022).

Timmons, A. C. et al. A call to action on assessing and mitigating bias in artificial intelligence applications for mental health. Perspect. Psychol. Sci. 18, 1062–1096 (2023).

Boch, S., Sezgin, E. & Lin Linwood, S. Ethical artificial intelligence in paediatrics. Lancet Child Adolesc. Health 6, 833–835 (2022).

Interim guidance on government use of public generative AI tools – November 2023. https://architecture.digital.gov.au/guidance-generative-ai.

European Parliament-SpokespersonGuillot, J. D. EU AI Act: first regulation on artificial intelligence. https://www.europarl.europa.eu/pdfs/news/expert/2023/6/story/20230601STO93804/20230601STO93804_en.pdf (2023).

International community must urgently confront new reality of generative, artificial intelligence, speakers stress as Security Council debates risks, rewards. https://press.un.org/en/2023/sc15359.doc.htm (2023).

The White House. Executive order on the safe, secure, and trustworthy development and use of artificial intelligence. The White House https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/ (2023).

Gomez Rossi, J., Rojas-Perilla, N., Krois, J. & Schwendicke, F. Cost-effectiveness of artificial intelligence as a decision-support system applied to the detection and grading of melanoma, dental caries, and diabetic retinopathy. JAMA Netw. Open 5, e220269 (2022).

Hendrix, N., Veenstra, D. L., Cheng, M., Anderson, N. C. & Verguet, S. Assessing the economic value of clinical artificial intelligence: challenges and opportunities. Value Health 25, 331–339 (2022).

Allen, M. R. et al. Navigating the doctor-patient-AI relationship – a mixed-methods study of physician attitudes toward artificial intelligence in primary care. BMC Prim. Care 25, 42 (2024).

Mirsky, Y. & Lee, W. The creation and detection of deepfakes: a survey. ACM Comput. Surv. 54, 1–41 (2021).

Brundage, M. et al. The malicious use of artificial intelligence: forecasting, prevention, and mitigation. arXiv https://arxiv.org/ftp/arxiv/papers/1802/1802.07228.pdf (2018).

Janssen, M., Brous, P., Estevez, E., Barbosa, L. S. & Janowski, T. Data governance: organizing data for trustworthy artificial intelligence. Gov. Inf. Q. 37, 101493 (2020).

Tanner, B. A. Validity of global physical and emotional SUDS. Appl. Psychophysiol. Biof. 37, 31–34 (2012).