Can Generative AI Help With Quicker Threat Detection?

Artificial Intelligence & Machine Learning

,

Next-Generation Technologies & Secure Development

,

Security Operations

Gartner’s Kevin Schmidt on Using AI for Secure Coding and Threat Detection

Generative AI tools such as ChatGPT, GitHub Copilot and Amazon CodeWhisperer are quickly gaining traction. But security professionals are evaluating how this emerging technology can both enhance and potentially undermine cybersecurity practices.

Despite the potential benefit of using AI for use cases, including security-oriented paired programming, is it possible to program AI assistants and tools to respond to security alerts autonomously?

In an interview with Information Security Media Group, Kevin Schmidt, director analyst at Gartner who supports the GTP secure infrastructure team in security operations including the SOC, monitoring and vulnerability assessment, discussed how and which AI tools have the potential to lift the burden of overwhelmed developers, CISOs and SOC analysts.

Schmidt researches generative AI and LLMs and their usage in security operations. In this Q&A, he explored the potential benefits of using generative AI for secure coding, threat detection and security operations, while also acknowledging the risks and organizational challenges of responsibly adopting these powerful but imperfect tools.

Edited excerpts follow:

Everybody talks about DevSecOps and shift left. To what extent can generative AI help in terms of security by design?

There are many ways, and we’re starting to see some of it. Unfortunately, right now, a lot of the examples come in two categories: tasks achievable with ChatGPT, and what Microsoft is doing with Copilot.

In terms of shift left, you start talking about helping to identify bugs quicker in source code. There are SaaS tools and code analysis tools that can help with all that, but they are not perfect. Technology with [accurate] generative AI can help organizations find bugs sooner. Developers can write code faster as well.

It has implications with security monitoring too. Traditionally, in a monitoring tool like a SIEM, you build your detection rules. If you want to detect somebody trying to brute-force logging into an account, there’s a rule in SIEM that detects that kind of behavior.

There’s a [shift left] concept called detection engineering, where instead of building the rules natively in your SIEM platform, you build them more in a code developer sort of sense [code base]. Either write them in Python, or high-level language, where they get converted into detection rules that then get pushed into your tool.

By doing that, you can then apply the same DevSecOps procedures and workflows on that detection code as you would with a regular piece of software. Generative AI can help by generating those detections quicker as well. It’s not just about finding bugs in code, but also detecting them more quickly with security monitoring tools.

You mentioned most of the vendors are using AI today, and that they often oversell on the basis of AI. How can a CISO use this technology to defend their organization?

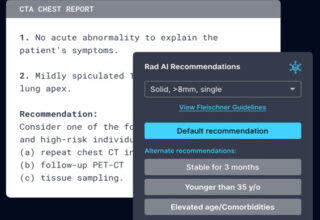

One example is Microsoft Security Copilot. It provides a chat interface through which security analysts can interact with the tool and ask for explanations about alerts. They can ask questions such as how do I investigate this? What are the remedial steps?

Microsoft Security Copilot is still in preview, and Microsoft will announce general availability in the next few months. When organizations can get their hands on this, they will understand how useful and impactful this tool is.

What are the limitations of ChatGPT, and where does it fall short in the secure coding process?

You can get decent results out of ChatGPT if you do prompt engineering and are explicit when you write your prompts. But it is a general model. Whereas GitHub Copilot and Amazon CodeWhisperer are tailored more toward coding. In the long run, you’re going to get better results. These are domain-specific implementations.

Can you comment on how the nature of threats has changed over the years?

The threat is the same, but the difference is the speed at which hackers can generate a phishing email or some malicious code they can use against you. The end result is that you still need to have all of your systems in place, including endpoint protection, content filtering, SOC and SIEM, to deflect the threats. The other difference is that now anybody can be a script kiddie [an individual who uses scripts or programs developed by others, primarily for malicious purposes]. It’s become easy.

How do you see AI tools lifting the burden of overwhelmed SOC analysts and CISOs?

ChatGPT can help to some extent with security operations. Microsoft Security Copilot can reduce the load on SOCs. Tools such as Dropzone – an autonomous SOC analysis platform – can look at a phishing alert and take responsive action, with no code, and you don’t have to write any playbooks for this. It just analyzes [the threat] and takes the required action. That class of tool is where organizations are going to be able to scale. From a people standpoint, organizations are having trouble hiring or retaining SOC personnel. These tools are going to take a lot of that load off the people and allow them to focus on more important things.

Do you see organizations implementing policies to ban generative AI? For instance, Samsung banned its employees from using it. What are your clients saying about this?

A year ago, I talked to clients who were banning it outright until they could figure out a policy. I see that a lot of organizations have opened it up to their employees or they only allow senior people, and they filter it at the proxy level.

Organizations are crafting generative AI acceptable-use policies. All their employees have to read and sign it. Some organizations are taking it a step further and trying to provide training, just as companies have an annual, basic cyber awareness course.

When I ask vendors about training, they either make generative AI training part of cyber training or have separate training. People take the policy, they read it and then they have the training so they understand what’s expected of it.