Copilot Recall Is ‘Dumbest Cybersecurity Move In A Decade’

A new Microsoft Windows feature dubbed Recall planned for Copilot+ PCs has been called a security and privacy nightmare by cybersecurity researchers and privacy advocates.

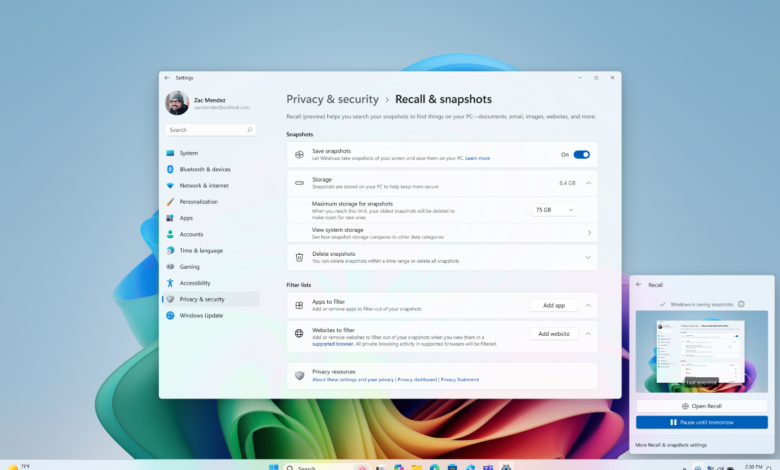

Copilot Recall will be enabled by default and will capture frequent screenshots, or “snapshots,” of a user’s activity and store them in a local database unique to the user account. The potential for exposure of personal and sensitive data through the new feature has alarmed security and privacy advocates and even sparked a UK inquiry into the issue.

Copilot Recall Privacy and Security Claims Challenged

In a long Mastodon thread on the new feature, Windows security researcher Kevin Beaumont wrote, “I’m not being hyperbolic when I say this is the dumbest cybersecurity move in a decade. Good luck to my parents safely using their PC.”

In a blog post on Recall security and privacy, Microsoft said that processing and storage are done only on the local device and encrypted, but even Microsoft’s own explanations raise concerns: “Note that Recall does not perform content moderation. It will not hide information such as passwords or financial account numbers. That data may be in snapshots that are stored on your device, especially when sites do not follow standard internet protocols like cloaking password entry.”

Security and privacy advocates take issue with assertions that the data is stored securely on the local device. If someone has a user’s password or if a court orders that data be turned over for legal or law enforcement purposes, the amount of data exposed could be much greater with Recall than would otherwise be exposed. And hackers and malware will have access to vastly more data than they would without Recall.

Beaumont said the screenshots are stored in a SQLite database, “and you can access it as the user including programmatically. It 100% does not need physical access and can be stolen.”

He posted a video (republished below) he said was of two Microsoft employees gaining access to the Recall database folder with apparent ease, “with SQLite database right there.”

Does Recall Have Cloud Hooks?

Beaumont also questioned Microsoft’s assertion that all this is done locally. “So the code underpinning Copilot+ Recall includes a whole bunch of Azure AI backend code, which has ended up in the Windows OS,” he wrote on Mastodon. “It also has a ton of API hooks for user activity monitoring.

“It opens a lot of attack surface. … They really went all in with this and it will have profound negative implications for the safety of people who use Microsoft Windows.”

Data May Not Be Completely Deleted

And sensitive data deleted by users will still be saved in Recall screenshots.

“There’s no feature to delete screenshots of things you delete while using your PC,” Beaumont said. “You would have to remember to go and purge screenshots that Recall makes every few seconds. If you or a friend use disappearing messages in WhatsApp, Signal etc, it is recorded regardless.”

One commenter said Copilot Recall seems to raise compliance issues too, in part by creating additional unnecessary data that could survive deletion requests. “[T]his comprehensively fails PCI and GDPR immediately and the SOC2 controls list ain’t looking so good either,” the commenter said.

Leslie Carhart, Director of Incident Response at Dragos, replied that “the outrage and disbelief are warranted.”

A second commenter noted, “GDPR has a very simple concept: Data Minimization. Quite simply, only store data that you actually have a legitimate, legal purpose for; and only for as long as necessary. Right there, this fails in spectacular fashion on both counts. It’s going to store vast amounts of data for no specific purpose, potentially for far longer than any reasonable use of that data.”

It remains to be seen if Microsoft will make any modifications to Recall to quell concerns before it officially ships. If not, security and privacy experts may find themselves busier than ever.