Data architecture with Databricks Across different cloud | by Sushreeta | Apr, 2024

If you found this article insightful, stay connected! Follow me on Twitter and LinkedIn for more data-driven insights and updates.

Data has become an essential resource for enterprises in the current digital era, guiding strategic choices and stimulating creativity for many businesses. However, maintaining data across various cloud providers poses a considerable challenge. Scalability and efficiency are frequently hampered by difficult integrations, disjointed tools, and siloed data. Organizations are using unified analytics solutions like Databricks to simplify data operations between AWS, GCP, and Azure to overcome these obstacles. In this post, we’ll look at how building a unified data platform with Databricks may facilitate smooth data processing, machine learning, and visualization amongst various cloud environments.

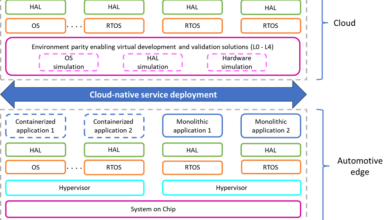

While utilizing the distinctive capabilities and services provided by each cloud provider, it is crucial to guarantee flawless integration and interoperability while creating a data architecture for a Databricks data platform that spans AWS, GCP, and Azure.

Data Architecture for a Databricks Data Platform on Azure

Developing scalable and effective data platforms can be made easier by utilizing the capabilities of Databricks on Azure.

With Azure Data Factory for data ingestion and orchestration, and Azure Blob Storage for data storage, enterprises can ingest and process enormous volumes of data with ease. Scalable data processing with Apache Spark is made possible by Databricks clusters that are provisioned on Azure Virtual Machines, while data consistency and stability are guaranteed by Delta Lake. Advanced AI and machine learning capabilities can be achieved by integrating Azure Machine Learning with Databricks, and high-performance analytics and ad hoc querying can be achieved by combining Azure Synapse Analytics with Databricks SQL Analytics. By utilizing Azure’s IAM services and encryption technologies in conjunction with strong data governance and security protocols, enterprises can create a compliant and safe data platform that facilitates data-driven innovation and decision-making.

Data Architecture for a Databricks Data Platform on Google Cloud Platform

Google Cloud Platform (GCP) offers a powerful suite of services for storing, processing, and analyzing data, making it an ideal choice for building a Databricks data platform.

The native products from GCP, include Google BigQuery for data warehousing and Google Cloud Storage (GCS) for data storage. Tools for data integration and ingestion such as Google Cloud Dataflow allow ETL procedures to be efficiently scaled and streamlined. A range of services designed specifically for building, honing, and implementing machine learning models are available through GCP’s AI Platform. Organizations can use their current data pipelines for model training and inference by integrating Databricks with the AI Platform in a seamless manner. Organizations can utilize GCP’s fully managed serverless data warehouse, Google BigQuery, for their data warehousing and analytics needs. With its SQL Analytics feature, Databricks makes it easy to query and analyze data stored in BigQuery. GCP includes encryption both in transit and at rest, IAM roles, permissions, and thorough audit logging. Organizations can use these tools to maintain compliance and data governance rules inside their Databricks data platform.

Building a Scalable Data Platform on AWS Using Databricks

Businesses are increasingly using cloud platforms like Amazon Web Services (AWS) to store, process, and analyze their data in today’s data-driven environment.

Organizations can create a strong basis for data intake, processing, and transformation by utilizing AWS’s broad range of services, including Glue for ETL, S3 for data storage, and EC2 for scalable computing. Scalable Spark clusters on EC2 instances may be created thanks to Databricks’ easy integration with various AWS services, enabling distributed data processing and analytics at scale. Additionally, by utilizing features like ACID transactions and schema enforcement, enterprises may use Databricks Delta Lake to guarantee data consistency and reliability. Organizations can leverage the scalability, stability, and performance of AWS cloud infrastructure while harnessing the full potential of their data by architecting their data platform on AWS with Databricks. This will provide actionable insights and innovation.

Using a well-defined data architecture to construct a Databricks data platform across AWS, GCP, and Azure, enterprises can take advantage of the advantages offered by each cloud provider while guaranteeing smooth integration and interoperability for their data operations. Through the use of this strategy, businesses may drive business innovation across different cloud environments and realize the full potential of their data assets.