Despite guidance, generative AI remains a challenge for educators

Key points:

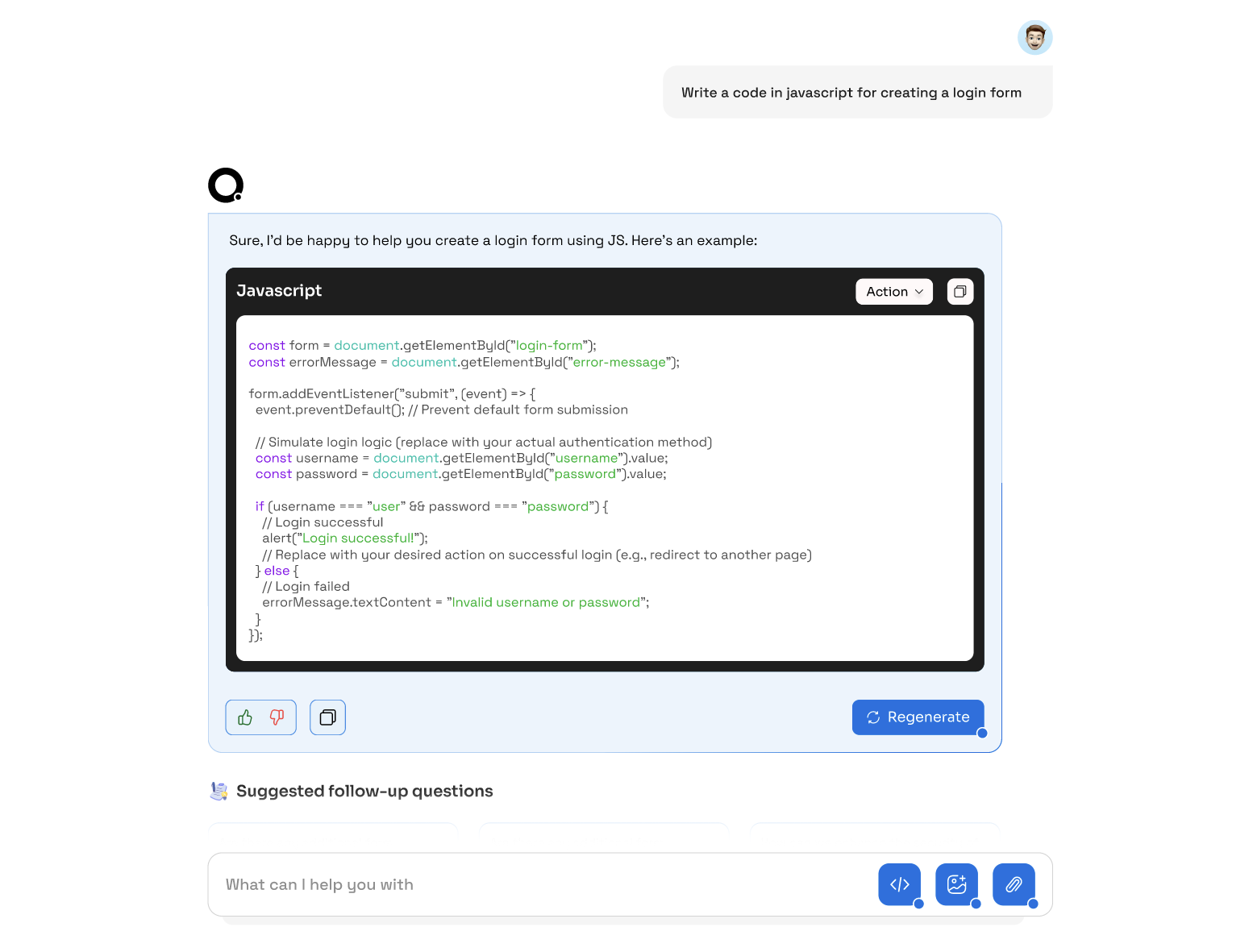

Teachers are still struggling with generative AI use even with policy-setting and training around the technology, according to new survey research from the Center for Democracy & Technology (CDT).

The survey reveals that educators still must navigate many questions around responsible, safe student use of AI on their own, leading them to become overly reliant on ineffective generative AI content detection tools, contributing to increased disciplinary action among students, and cultivating persistent distrust towards students.

CDT’s new survey research reveals that:

Fifty-nine percent of teachers report that they are certain one or more of their students have used generative AI for school purposes, and 83 percent of teachers say they themselves have used ChatGPT or another generative AI tool for personal or school use.

Although 80 percent of teachers report receiving formal training about generative AI use policies and procedures, and 72 percent of teachers say that their school has asked them for input about policies and procedures regarding student use of generative AI, only 28 percent of teachers say that they have received guidance about how to respond if they suspect a student has used generative AI in ways that are not allowed, such as plagiarism.

Sixty-eight percent of teachers report using an AI content detection tool regularly, despite known efficacy issues that disproportionately affect students who are protected by civil rights laws.

Sixty-four percent of teachers say that student(s) at their school have gotten in trouble or experienced negative consequences for using or being accused of using generative AI on a school assignment, a 16 percentage-point increase from last school year; and,

Fifty-two percent of teachers agree that generative AI has made them more distrustful of whether their students’ work is actually theirs, and this figure is even higher in schools that ban the technology.

“In our research last school year, we saw schools struggling to adopt policies surrounding the use of generative AI, and are heartened to see big gains since then,” said CDT President and CEO Alexandra Reeve Givens. “But the biggest risks of this technology being used in schools are going unaddressed, due to gaps in training and guidance to educators on the responsible use of generative AI and related detection tools. As a result, teachers remain distrustful of students, and more students are getting in trouble.”

“Generative AI tools in the education sector are not going away, and schools are responsible for providing detailed guidance to educators on not just the technology’s benefits, but more importantly on its shortcomings and how to mitigate them responsibly,” Givens added.

“Since generative AI caught the education sector off-guard last school year, there have been plenty of think pieces about whether usage is a good or a bad thing. What we know for sure is that generative AI, and the tools used to detect it, require schools to provide better training and guidance to educators,” says Elizabeth Laird, Director of the Equity in Civic Technology Project at CDT.

Laird added, “Education leaders should not stop at addressing generative AI, as it is only one example of AI use in schools right now. We look forward to the U.S. Department of Education fulfilling its commitment to releasing guidance on safe, responsible, and nondiscriminatory uses of AI in education, as detailed in the Biden Administration’s executive order. In the meantime, schools should continue preparing teachers to make day-to-day decisions that support safe, responsible use of all AI-driven tools.”

Protected classes of students remain at disproportionate risk of disciplinary action due to generative AI use. One such group is students with disabilities; 76 percent of licensed special education teachers are more likely to use an AI content detection tool regularly, compared to 62 percent of non-licensed special education teachers. As previously reported by CDT, students with an IEP and/or a 504 plan use generative AI more than their peers, potentially placing them at increased risk of negative consequences due to their use of generative AI.

This press release originally appeared online.