Does Generative AI Content Have a Place in Social Media?

While generative AI is the trend of the moment, and every tech-related company is trying to catch the AI wave, I remain unconvinced that the current slew of gen AI offerings being foisted upon us are beneficial, useful, or even that interesting, in a social media context.

For example, Meta recently added its latest AI chatbot to search on Facebook, Instagram, WhatsApp and Messenger.

So now, when you go to search for something in any of these apps, you’re confronted with a range of “helpful” suggestions of other things that you might want to look for.

Like (and these are real examples):

- Write a food truck business plan

- 5 tips for grooming dogs

- Strawberry shortcake recipe

- Camping packing list

These are just some of the suggestions that Instagram pushed to me when I checked right now, and I have approximately zero interest in any of them. So they’re seemingly not targeted, not personalized to my interests, and, in the case of the first one, so ridiculously niche that I have no idea why it would even be displayed.

But also, who goes to the search tab with no idea of what they’re searching for anyway? Why would these suggestions be displayed to a user who’s clearly already headed to search to look for something specific?

Is this an expected evolution in user behavior, that users with nothing to do will just start floating over to the search tab, and hope that it shows them a topic they might be interested in? And isn’t that what the Explore tab does already?

I don’t know, it seems like a pretty hackneyed way of jamming in an AI assistant, which I don’t think anybody was asking for.

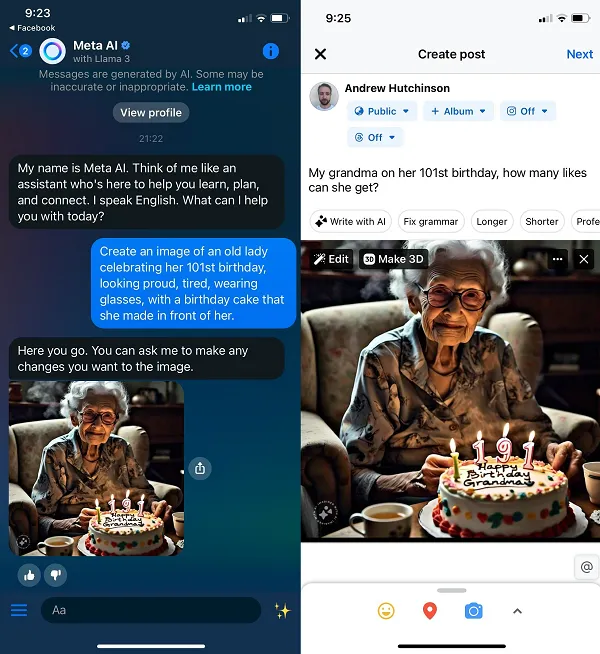

Though that’s before you consider the expanded capacity of Meta’s new AI assistant, which can also now generate images for you in-stream.

And there are suggested prompts for that too, including:

- Imagine an orchestra

- Imagine a dragon

- Imagine a neon frog

- Imagine animals at brunch

Why? Why do these prompts even exist? Who’s scrolling so mindlessly through an app that they just go “oh, cool, a neon frog, let’s see what that looks like?”

It’s like a randomization engine for stoners, though even in that context, it likely wouldn’t be valuable, because these suggestions are displayed between other AI search prompts that are likely to trigger paranoia and fear.

So seemingly not a heap of value. And that’s before you even get to the images themselves.

Over the last week, for example, Meta’s Facebook Page has been sharing “Movie Mashup Challenge” AI generations, in order to showcase the fun of its image generation tools.

What is that? How would an image of haunting, hyperreal muppets around a disfigured old man promote the use of these tools?

Or this:

These sort of clumsy mash-ups of real and fake end up looking so obviously AI-generated that they’re off-putting, and are becoming increasingly more so as a rising number cheap websites and outlets expand their use of AI to create their visuals.

Also, which way is the wind even blowing in this image?

Now, I get that this is still relatively early days for AI generation, and the fact that these systems are able to create images this good is an amazing technical achievement. And they’ll only get better.

This week, for example, indie band “Washed Out” became the first to release a fully AI-generated video clip, created via OpenAI’s Sora.

That would have been an unthinkable project even a year ago, but even so, there’s also something immensely disconcerting about the odd characters and shifting body shapes that stare out at you from these computer-simulated scenes.

Such tools will get better, giving people more capacity to come up with all new ways to use AI across the digital spectrum. But the question remains: Are these tools actually “social”, and do they belong on platforms that were designed to help connect humans through shared experiences?

I’ll tell you what Facebook’s tools do facilitate, easy engagement bait:

Facebook is currently awash with these sorts of rubbish AI images, with users fishing for engagement. And many of them are generating thousands, even millions of engagements, despite the image being completely and obviously fake.

Though “obvious” in this context is relative, as some Facebook users are clearly not as digitally literate, and are happy to tap that thumbs up on any image that plays the right notes.

I didn’t actually post this image, but you can see how, if anything, Meta’s making it easier to facilitate these types of scams by incorporating its AI tools in-stream.

Meta has added new rules to help catch out AI content, and as you can see in the above creation, there is also a Meta watermark in the bottom left corner. Though that’s pretty easy to get around, and I don’t really know that providing these options in its apps is driving any real benefit, or at least, not to the level that would outweigh the negatives.

In essence, I still don’t think we’ve found a killer use case for AI in social apps, and outside of improved algorithms, and maybe conversational search, I’m not sure that there is one.

But every platform is jumping on the AI trend either way, in fear of being left behind in case it does become a transformative element.