Event Driven Architecture using Amazon EventBridge – Part 1

This post is co-authored with Andy Suarez and Kevin Breton (from KnowBe4).

For any successful growing organization, there comes a point when the technical architecture struggles to meet the demands of an expanding and interconnected business environment. The increasing complexity and technical debt in legacy systems create pain points that constrain innovation. To overcome these challenges, many companies are exploring event-driven architectures (EDA) and leveraging robust cloud services.

In this blog post, we will walk through the transformation journey to EDA, highlighting the obstacles faced and strategic decisions made. It focuses on how KnowBe4, a leading security awareness training provider, implemented EDA on Amazon Web Services using Amazon EventBridge to enable flexible and scalable software engineering. This decoupled event-driven approach allows KnowBe4 to develop, deploy and maintain components independently, react to changes quickly, and improve the efficiency and agility of their software engineering. By detailing KnowBe4’s experience, this post provides insights applicable to any organization looking to evolve its architecture for continued growth.

KnowBe4’s event driven architecture vision

KnowBe4 has embarked on a journey to adopt an event-driven architecture strategy with the goals of increasing development velocity, decoupling services, enhancing resiliency, leveraging event replay capabilities, scalability through asynchronous processing and easily onboarding new teams and services. Event-driven architecture is an effective way to create loosely coupled communication between different applications. Loosely coupled applications are able to scale and fail independently, increasing the resilience of the system. Development teams can build and release features for their application quickly, without worry about the behavior of other applications in the system. In addition, new features can be added on top of existing events without making changes to the rest of the applications.

Figure 1 depicts a conceptual diagram of an event-driven architecture visualized by KnowBe4. It has a central event bus that connects publishers and consumers. Publishers generate events and publish them to the event bus. Consumers subscribe to the event bus and specify filters to receive relevant events. The event bus routes published events to the appropriate consumers based on their filters, enabling the decoupled communication between publishers and consumers.

Figure 1 Event Driven Architecture to decouple publisher and subscriber

KnowBe4 needed events to be replayable without producing unintended side effects. This property is called idempotency, which is often required in distributed systems. When a service is down, events need to be replayed once it becomes healthy. Subscribers need to ensure that receiving an event multiple times does not cause any adverse side effects. Schema validation is also a requirement. Events have a published JSON schema and must be backwards compatible.

Challenges

KnowBe4 has engineering teams across different geographies, working on different codebases, products, and solutions. The main question was, “How can we accelerate and improve our business by adopting EDA?”. The participation of key members from different teams was crucial in developing the overall event-driven design. Their involvement fostered a sense of ownership and motivated them to promote the project within their respective teams. Teams used a mechanism to publish or subscribe to events without spending a significant amount of ramp up time, thus creating a low entry barrier for architecture adoption.

Low barrier of entry

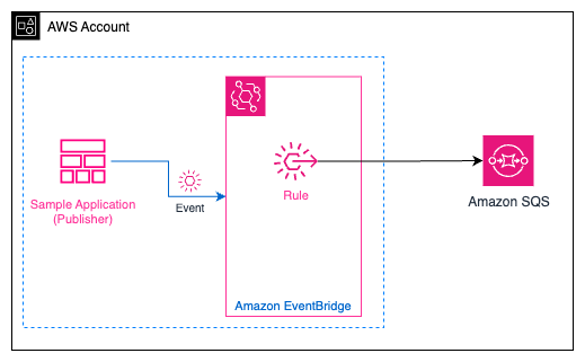

A key concept to adoption of EDA is to simplify the creation of publishers and subscribers. Using Infrastructure as Code (IaC) reduced the level of effort, promoted consistency and supportability. IaC addressed challenges with cross account resource policies, shared secrets, Amazon EventBridge rules, forwarding events and cataloging of events. Building a plug and play blueprint reference application was created to demonstrate how to publish an event and subscribe to one. The sample application published an event to a bus and created a rule targeted to an Amazon Simple Queue Service (Amazon SQS) queue. Proof of concepts (Figure 2) were iterated several times and feedback captured.

Figure 2 Sample Application to publish events

Bus Topologies

At KnowBe4, multiple development teams work across multiple AWS accounts. EventBridge uses a bus-to-bus delivery mechanism for cross-account delivery. A bus topology was chosen because it fit well with KnowBe4’s organizational structure and team dynamics.

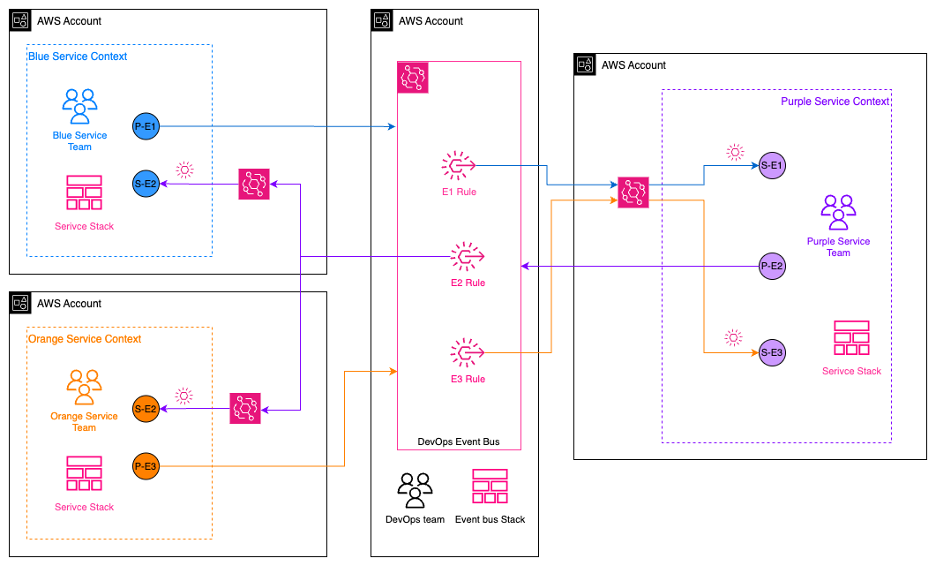

Topology: Single-bus, multi-account pattern

The single-bus, multi-account pattern (Figure 3) requires all publishers to publish events to a single core bus. Subscribers place one rule on this bus to forward events to a bus in their AWS Account.

Figure 3 Single-bus, Multi-account Pattern (Reference: Steven Liedig, AWS Re:Invent 2022/API307)

Considering the easy supportability of the single-bus, multi-account pattern, it was decided as the preferred EDA pattern. Subscribers place one generic rule per team on the core bus to forward events to a bus in their account. The subscriber then places detailed rules on their local bus to invoke their target. An event bus archive on the core bus was also included. This provided the ability to replay events in case of a service outage or other reasons.

Defining standard event schemas for consistency and clarity

If the system allowed any type of event to be published, then it could quickly get out of hand and become chaotic. Subscribers could have a hard time figuring out what is published and what is in the event payload.

The events and their associated schemas were documented to help anyone, including a new team, to see what events are published and what data each event includes. All events inherit from a base schema that is defined with commonly used fields consistent across products.

Event payloads

In an event-driven architecture, event payloads contain the relevant data and metadata associated with each event that is published to the event bus.

Basic event payload structure

The minimum required event data that publishers need to provide:

{

"detail": {},

"detail-type": "my.event",

"source": "service1",

"time": "2024-03-18T17:55:26+00:00"

}Where:

| detail | Custom event data |

| detail-type | The event name |

| source | Publisher service |

| time | When the event occurred |

Additional Data / Metadata Schema

Publishers can use the detail section of the message to send additional information. To standardize commonly used fields across all events, a popular practice of adding a data and metadata section to the detail section was leveraged. More details of this pattern can be found in the article, The power of Amazon EventBridge is in its detail by Sheen Brisals.

{

"detail": {

"data": {

"id": "12345"

},

"metadata": {

"principal": "krn:env-svc1:us-east-1:ksat/acc/ab34/user/xy1",

"resource": "krn:env-svc1:us-east-1:ksat/acc/ab34/user/c1024",

"context_id": "89b69f1229174053a10b16220cd02cb7",

"parent_context_id": "",

"external_ip_address": "128.19.10.1",

"idempotency_key": "b7ba9f4b6a4c359943e00c",

"schema_version": "2024-03-04",

"timestamp": "2024-03-24T15:26:31-04:00"

}

},

"detail-type": "my.event",

"source": "svc1",

"time": "2024-03-18T17:55:26+00:00"

}Where:

| detail.data | Contains specific event information |

| detail.metadata.principal | Who performed the action |

| detail.metadata.resource | What it happened to |

| detail.metadata.context_id | uuid4 for relating multiple events |

| detail.metadata.parent_context_id | uuid4 for relating multiple events in hierarchy |

| detail.metadata.external_ip_address | If caused by an end user, their IP address |

| detail.metadata.idempotency_key | uuid4 for subscribers to identify if the event has already been processed |

| detail.metadata.schema_version | Date of the schema version |

| detail.metadata.timestamp | EventBridge truncates milliseconds. This field is more granular |

Large payloads

EventBridge has an event limit of 256KB. Although an event larger than 256KB is rare, the architecture should still have the capability to handle them.

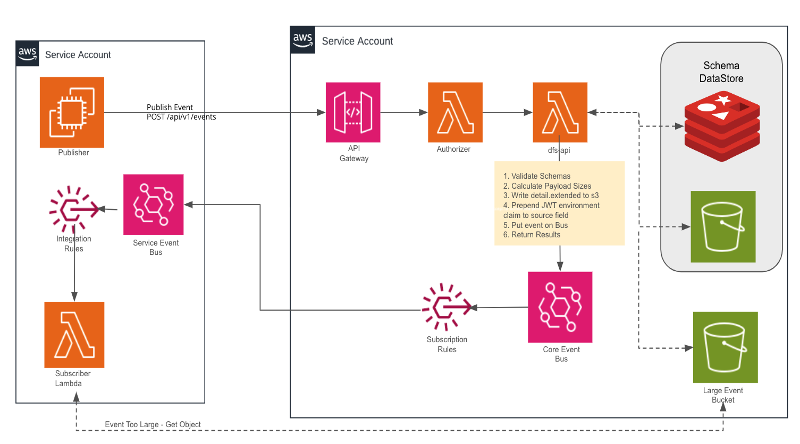

A section called extended was added to the event payload. The extended section is designed to hold unbounded arrays, lists or anything else that might increase the event payload size. This is an implementation of the claim check pattern. Developers must be cognizant of this pattern when writing code to publish an event, as it allows large amounts of data to be stored externally while still providing a reference to it from the event payload.

{

"detail": {

"extended": {

"groups": ["a","b","c" ]

}

}

}When an event is published, the Amazon Lambda named dfs-api (Figure 4) calculates the event size. If it is over 256KB, then the detail.extended section will be written to Amazon Simple Storage Service (Amazon S3) and its contents replaced with the Amazon S3 URL. This field cannot be targeted by a rule as it is not guaranteed to be in the event.

{

"detail": {

"extended": {

"s3url": "s3://<file>"

}

}

}Schema enforcement

Each event is required to have a JSON schema file that describes the required and optional fields. This file is deployed to an Amazon S3 bucket when the publisher is deployed. The Publish Event API reads this file and verifies that the JSON data in the event is valid. If the schema validation fails, then the event is not published, and a 400 client-side error is returned along with the output from the schema validation.

To make event-driven architectures work effectively, it is crucial to document the event schemas, publishers, and subscribers thoroughly. Clear documentation enables developers to easily discover, comprehend, and leverage the relationships between events, publishers, and subscribers across the architecture. The KnowBe4 team created an event catalog using an open-source solution called EventCatalog. This allowed team members to view the domains, services, publishers, subscribers, events, and schemas all in one place.

Publishers register the events and schemas of their services they publish using the IaC modules. Subscribers also use the IaC modules to subscribe to these events. These IaC modules insert the event metadata into Amazon DynamoDB.

The KnowBe4 team built an Event Catalog using Event Catalog Plugin Generator to read from DynamoDB and to create the markdown pages for the event catalog. A pipeline job runs nightly to build the event catalog and keep it up to date.

Publishing an event API

The JWT authorizer and the HTTP POST method handler are Lambdas written in Rust, a language known for its concurrency capabilities and built-in safety features. Quicker event processing in an ecosystem where every millisecond counts, Rust’s performance advantages become pivotal.

This API is responsible for validating the event schema. It validates schema from Amazon ElastiCache for Redis or Amazon S3 on a cache miss. It also calculates each event payload size. If the event size is over 256KB then the detail.extended section is written to Amazon S3 and the detail.extended section is replaced with an S3 URL to this data. The API prepends the source field with the JWT environment claim and then posts the event to the bus.

Example HTTP POST payload:

{

"bus": "bus-1",

"events": [

{

"detail": {

"data": {

"id": "12345"

},

"metadata": {

"principal": "krn:env1-svc1:us-east-1:ksat/acc/ab34/user/xy1",

"resource": "krn:env1-svc1:us-east-1:ksat/acc/ab34/user/c1024",

"context_id": "89b69f1229a10b16220cd02cb7",

"external_ip_address": "1.19.10.1",

"idempotency_key": "b7ba9f44b1c74c359943e00c",

"parent_context_id": "",

"schema_version": "1",

"timestamp": "2024-03-24T15:26:31-04:00"

}

},

"detail-type": "my.event",

"resources": [],

"source": "svc1",

"time": "2024-03-18T17:55:26+00:00"

}

]

}Putting it all together

The event-driven architecture implemented by KnowBe4 provides a cohesive framework for publishing, routing, and consuming events across the organization.

Event Driven Architecture

To publish events to the core bus, an Amazon API Gateway REST API was created. Publishers were prohibited from publishing events directly to the core bus. The REST API is secured by AWS WAF and a JWT authorizer to control access. The overall system architecture (Figure 4) shows how the Amazon API Gateway REST API serves as the secure entry point for publishing events to the core event bus.

Figure 4 Event Driven Architecture

Event Publishing Performance

Now that everything is built and running in AWS, the following strategy was used to determine the throughput of the system.

- Custom Datadog Dashboards leveraging Amazon CloudWatch metrics.

- Datadog alerts such as FailedInvocations and InvocationsSentToDLQ.

A load test scenario was created to cover 90% of regular events, 9% of large payloads that would offload some of the event payload to Amazon S3, 0.5% missing a schema file and 0.5% with a payload that fails schema validation.

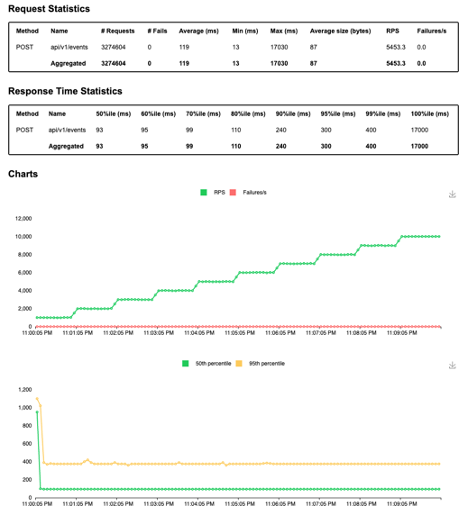

The load test ramps up to 1,000 requests per minute. The image in Figure 5 is a screen shot from the load test that reached 10,000 requests per second.

Figure 5 Load test results

The system was able to reach approximately 20,000 requests per second before encountering any errors.

Performance

Figure 6 shows the performance metrics of AWS services during load testing. It shows Lambda invocations, duration, concurrency, API Gateway requests and latency graphs and EventBridge invocations over the duration of the test.

Figure 6 Performance metrics of AWS Services during load test

Conclusion

With teams at KnowBe4 now releasing features a quarter early by leveraging the sample application to kick start their development, the adoption of event-driven architecture has proven to be a success. There are 235 event types currently being published with over 3.6 billion events published in the last four months. The features leveraging EDA have published a quarter ahead of schedule with 99.99% uptime and increased fault tolerance with retries and replays. The services have easily achieved asynchronous processing and have started to decouple their dependencies from other services.

Part 2 of this series will walk through an audit log implementation, one of the first event subscribers at KnowBe4.