Exploring How ChatGPT Might Design A Robot

Robot looking at his hand. 3D illustration

It was only a matter of time before we started to think about how robots can design – other robots.

In fact, I’ve been having these conversations with people ever since the arrival of ChatGPT, as we imagine a future where autonomous entities self-replicate. To some, it’s worth a shiver down the spine: to others, it’s valuable theory.

Now, our MIT teams are working on this kind of challenge, partly to see what’s possible.

I wanted to showcase some of the work going on in our MIT robotics lab, where researchers are finding out the limits of what they call “robotic soft co-design” with AI.

Let’s start with the criteria: what components do you need to consider in robotic design? According to some of our lab people presenting on the issue, creators should plan for aspects like:

· The robot’s shape

· Actuator and sensor placement

· Material properties (what it’s made of, textures, etc.)

Then you also have to plan for functionality: if you want to see how this works, take a look at this article from New Atlas about Figure’s new walking robot and how it responds to physical challenges.

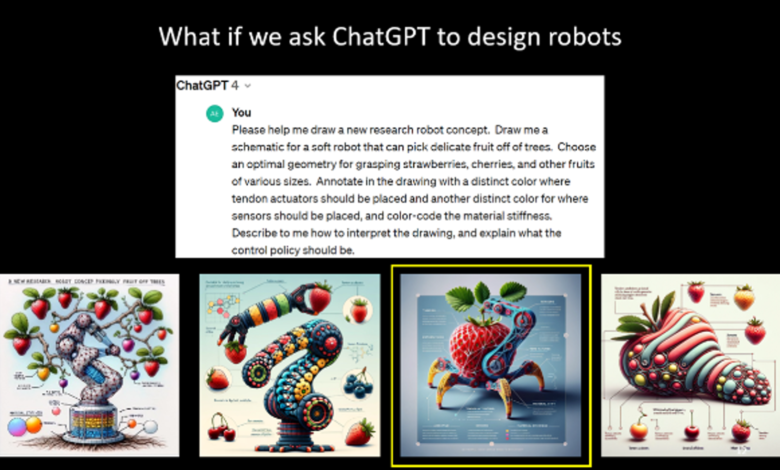

Now from there, I want to show off how the team asked ChatGPT to come up with robot designs. This is really next-level stuff! Here’s the promp

What if we ask ChatGPT to design robots

Look at what the program came up with!

What if we ask ChatGPT to design robots

It’s fast, creative (?!), BUT is it enough for robot design?

To me, it’s strange that AI dreamed up such aesthetic designs – and that the berry-picking robot has a body like a strawberry! Why? Who knows?

Design researchers have pointed out another strange thing that actually suggests the AI is not yet ready to design its peers: the picking arm on the top of the strawberry does not seem designed to actually work, according to the laws of physics. Absent some kind of crazy strong composite, that looks like a fatal design flaw.

Based on the presentations of top researchers, though, we can start to address this using “diffusion models” where the process separates out the “noise” (presumably, the kinds of noise that will drive design flaws) and just leave the right stuff in place.

“Imagine a robot is growing from noise to a structured body throughout diffusion; this embedding is like the ‘gene’ of the generated robot, and the optimization is modifying the gene that drives the generated robots toward better physical utility.” – Johnson Tsun-Hsuan Wang, MIT EECS PhD

Tsun-Hsuan Wang presenting at CSAIL+IIA AI Summit, June 7, 2024

Experts actually talk about “breeding” robots with augmented diffusion models. As they continue to work, they are looking for advances in things like editability, and the capacity to inject human-designed elements into what a genAI may be doing.

Showing off a “walking teapot” design, for instance, Wang describes how all of us may one day be robot makers.

I think this whole thing is very instructive. As we see how AI can create, we learn more about its capacity in our world. And remember, as my friend Jeremy Wertheimer said, we are not ‘building’ these systems, we are discovering them, and applying their power to projects. There’s a big difference.

In any case, I found this all really fascinating, as we try to figure out what AI is really capable of. Will it be only a short time until AI is “breeding” robots? And what does that mean for our society? Those are open questions, for students, for researchers, and for the rest of us, too.

Tsun-Hsuan Wang presenting at CSAIL+IIA Summit, June 7, 2024

Protrait of Tsun-Hsuan Wang