Fujitsu to provide the world’s first enterprise-wide generative AI framework technology to meet changing needs of companies

TOKYO, June 4, 2024 – (JCN Newswire) – Fujitsu Limited today announced that, to promote the use of generative AI in enterprises, it has developed a generative AI framework for enterprises that will flexibly respond to the diverse and changing needs of companies, and allow for the easy compliance with the vast amount of data and laws and regulations that companies possess. Fujitsu will make available a generative AI framework for enterprises globally as part of the Fujitsu Kozuchi lineup starting July 2024.

In recent years, in addition to general-purpose interactive large language models (LLMs), various specialized generative AI models have been developed. In the enterprise, in particular, there have been barriers to the use of these models. These barriers include difficulties with handling the large scale of data required by companies, the inability of generative AI to meet various requirements, such as cost and response speed, and the need to comply with corporate rules and regulations.

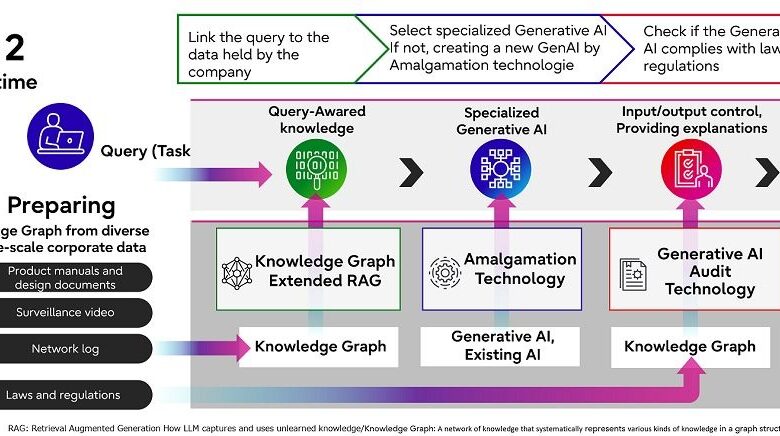

Fujitsu has developed a generative AI framework for enterprises to strengthen specialized AI that is able to solve these issues for companies. The new framework consists of knowledge graph extended retrieval-augmented generation (RAG), generative AI amalgamation technology and the world’s first generative AI auditing technology. The knowledge graph extended RAG uses knowledge graphs to link the relationships between large-scale data that companies possess and enhance the data input to the generative AI. The generative AI amalgamation technology selects the model with the highest performance from multiple specialized generative AI models based on the input task or it automatically generates by combining the models. The world’s first generative AI auditing technology enables explainable output which will adhere to compliance with laws and company regulations.

The technology framework

1. Knowledge graph extended RAG that overcomes the weaknesses of generative AI that cannot accurately reference large-scale data

Existing RAG technology (1) for referencing documents relevant to generative AI has the issue of being unable to accurately reference large scale data. To solve this issue, Fujitsu developed its knowledge graph extended RAG. This technology advances existing RAG technology and is able to expand the amount of data references by LLMs from the conventional hundreds of thousands or millions of tokens to more than ten million tokens by automatically generating knowledge graphs that are built on vast amounts of data, such as laws and company regulations, company manuals, and videos. This allows for knowledge based on the relationships from the knowledge graph to be accurately given to the generative AI, which can then make logical inferences and show the basis for its output.

Fujitsu, over many years, has gathered technology related to knowledge graphs, such as methods for selecting and retaining input data. By developing an LLM that dynamically generates and utilizes knowledge graphs, it has achieved the world’s highest accuracy (2) in a multi-hop QA benchmark (3).

2. Generative AI amalgamation technology is able to automatically generate specialized generative AI models to meet the diverse needs of companies data

Fujitsu has developed its own unique generative AI amalgamation technology that can easily and quickly generate AI models suitable for its own business for tasks input into in generative AI without prompt engineering or fine tuning. This combines existing machine learning models such as a technology that automatically generates specialized generative AI and machine learning models which are best suited for the tasks input to generative AI, and technology for interactively optimizing decisions. By predicting the suitability of each AI model and then automatically selects and generates the model with the best performance, it is able to quickly generate high-performance specialized generative AI that meets a company’s needs in the span of a few hours to a few days. This technology enables the adoption of small to medium-scale (4) and lightweight models by combining the most suitable models according to the input to maximize their characteristics. It is anticipated that this technology will reduce the consumption of electricity and computational resources, and lead to the development of sustainable AI.

Fujitsu used this technology to automatically mix its large-scale language model Fugaku-LLM, which is the Japanese-specialized generative AI learned by the supercomputer Fugaku, and a model suitable for input out of the publicly available Japanese-specialized LLMs and then output answers. This resulted in the technology receiving the highest level of accuracy (5), with an average score of MT-Bench (6), which is a standard benchmark for measuring Japanese language performance, compared to publicly available existing small to medium-sized Japanese-specialized models.

3. The world’s first generative AI auditing technology that achieves generative AI that is in compliance with corporate and legal regulations

Fujitsu’s generative AI auditing technology is the world’s first technology that audits the compliance of generative AI responses with corporate and legal regulations. This auditing technology comprises of generative AI explainable technology, which extracts and presents the basis for its answers from analyzing the internal operating status of the generative AI, and hallucination determining technology that verifies the consistency between answers and the basis for those answers, while presenting discrepancies in an easy-to-understand manner. Both technologies are able to target not only text, but multimodal input data, such as knowledge graphs and text, and combining them with knowledge graph extended RAG allows for more reliable utilization of generative AI.

This auditing technique was applied to the task of detecting situations of traffic violations from images of traffic. It successfully showed what the generative AI had focused on the input traffic law knowledge graphs and images of traffic as the basis for the generative AI’s answer.

Fujitsu is currently conducting a verification test using its generative AI framework for enterprises. It is expected to achieve a 30% reduction in manhours for contract compliance verifications, a 25% improvement in support desk work efficiency, and a 95% reduction in the time it takes to plan optimal driver allocation in the transportation industry. Fujitsu is also in the process of confirming that a generative AI framework for enterprises is able to solve issues in various business and improve productivity through achieving the application of generative AI to create quality assurance materials from product manuals that are approximately 10 million characters in length, analysis of mobile network connectivity issues, analysis of employee fatigue levels at work sites, and analysis of large-scale genome data.

Future Plans

Fujitsu will continue to add and expand its lineup of enterprise specialized AI models for a wide variety of applications, including the Japanese language and generation of coding. In addition, Fujitsu’s proposal for developing an LLM specializing in the generation and utilization of knowledge graphs was accepted by GENIAC, a project by the Japanese Ministry of Economy, Trade and Industry to strengthen development capabilities for generative AI in Japan. In this project, developing an LLM that generates lightweight knowledge graphs will enable the newly developed knowledge graph extended RAG technology to be used in a secure on-premise environment.

Fujitsu will continue to respond to the diverse needs of customers, advance the development of research technologies to solve issues in specialized business areas, and provide strong support for the use of generative AI in business.

[1] RAG technology :Retrieval-Augmented Generation. A technology that extends the capabilities of generative AI in combination with external data sources.

[2] Achieved the world’s highest accuracy :HotpotQA benchmarks show a 2.4% improvement over other modern methods. It was selected for ACL 2024, the world’s premier international conference in the field of AI (to be announced in August 2024).

[3] Multi-hop QA benchmark :HotpotQA (https://hotpotqa.github.io/). Benchmarks for complex question answering in generative AI.

[4] Small to medium-scale models :Generative AI models with 1.3 billion to 13 billion parameters.

[5] Highest level of accuracy :Highest level of accuracy refers to the model scoring 2.9% better (an improvement of 7.353 from 7.138) compared to the Mixtral 8x7B model, a publicly available existing medium-sized Japanese-specialized model.

[6] MT-Bench :Benchmark that quantifies the accuracy of answers in complex questions that have a certain amount of text and need to be answered in writing.

About Fujitsu

Fujitsu’s purpose is to make the world more sustainable by building trust in society through innovation. As the digital transformation partner of choice for customers in over 100 countries, our 124,000 employees work to resolve some of the greatest challenges facing humanity. Our range of services and solutions draw on five key technologies: Computing, Networks, AI, Data & Security, and Converging Technologies, which we bring together to deliver sustainability transformation. Fujitsu Limited (TSE:6702) reported consolidated revenues of 3.7 trillion yen (US$26 billion) for the fiscal year ended March 31, 2024 and remains the top digital services company in Japan by market share. Find out more: www.fujitsu.com.

Press Contacts

Fujitsu Limited

Public and Investor Relations Division

Inquiries