Generative AI in the limelight- Interesting Engineering

Artificial intelligence (AI) has undoubtedly had a strong impact on many industries over the last decade. In particular, generative AI has seen a significant uptake in popularity and use.

This includes chatbots based on large language models (or LLMs), such as OpenAI’s ChatGPT and Google’s Gemini, image generation based on Generative Adversarial Networks (or GANs) like OpenAI’s Dall-E, and audi o generation models.

The entertainment industry has embraced generative AI tools in various ways, such as creating AI-generated content and automating labor-intensive tasks. However, this adoption also poses challenges, including potential job losses, the creation of content that lacks a human touch or perspective, and concerns about trust and privacy.

So, is AI adoption potentially stagnating in this industry? If so, can AI be integrated in a way that addresses these challenges and concerns?

In this article, we take a deeper look at the use of AI in the entertainment industry, how it is currently used, the challenges, and what can be done to address them. We will also examine more ethical ways of integrating AI well and how the industry could avoid the potential stagnation of AI use.

An overview of generative AI

As the name suggests, generative AI is a class of AI algorithms or models that can generate different kinds of output (text, audio, images) by learning patterns from various inputs. The models are trained to generate content that resembles those produced by humans.

LLMs

Let’s understand this by taking a look at LLMs. LLMs are the most popular generative AI (thanks to the likes of ChatGPT). They are capable of generating coherent and contextually relevant outputs in response to user input or prompts.

The models are trained on text data from thousands of sources, such as books, blogs, and any other text on the Internet. They learn the patterns in how words are put together, generating a wide range of outputs.

GANs

In addition to LLMs, we also have GANs, which generate image and audio content. GANs are a bit more unconventional than LLMs.

They consist of a generator network that produces the output content. For example, a GAN trained on human faces can produce an output that looks like an actual person.

The key to these models, however, is training the generator to produce outputs such that it can fool another network in the model called the discriminator. The discriminator’s job is to evaluate how realistic the outputs produced by the generator are, which means both networks are trying to outsmart the other.

During the entire process, both networks are updated simultaneously, but such that they oppose each other.

Others

We also have variational autoencoders (VAEs). In simple terms, these condense data to points, where each point represents a characteristic or variation in the data.

The condensed version of the data is used to train the model, letting it capture underlying patterns in the data. It can then generate new data points that share similarities with the input data.

What’s happening now?

Generative AI is still relatively new. This means its applications in various industries, including entertainment and media, still need to be fully understood.

Interesting Engineering (IE) spoke to Tim Levy, founder and CEO of Twyn, a Media-Tech startup. Twyn is a platform that allows users to have real-time AI-enabled video conversations with celebrities, such as Lionel Messi.

Instead of using AI to generate content, Twyn records a vast archive of content with these celebrities and stores it. When users interact with the platform, AI delivers these content snippets in a manner that simulates a real-time conversation.

The AI selects content slices to present based on the user’s input or engagement, creating the illusion of a live conversation or FaceTime call with the celebrity.

“Right now, I think there’s huge untapped potential for generative AI to deliver real value to the industry. I think AI-enhanced content, not AI-generated content, will be the next big trend in the rapidly evolving entertainment space,” Levy told IE.

Currently, AI is utilized in this industry in two primary ways: automating labor-intensive tasks such as email marketing and translation, and generating machine-created content like scripts. While the automation of tasks is beneficial due to AI’s efficiency, the creation of machine-generated content has raised concerns among industry professionals and the public.

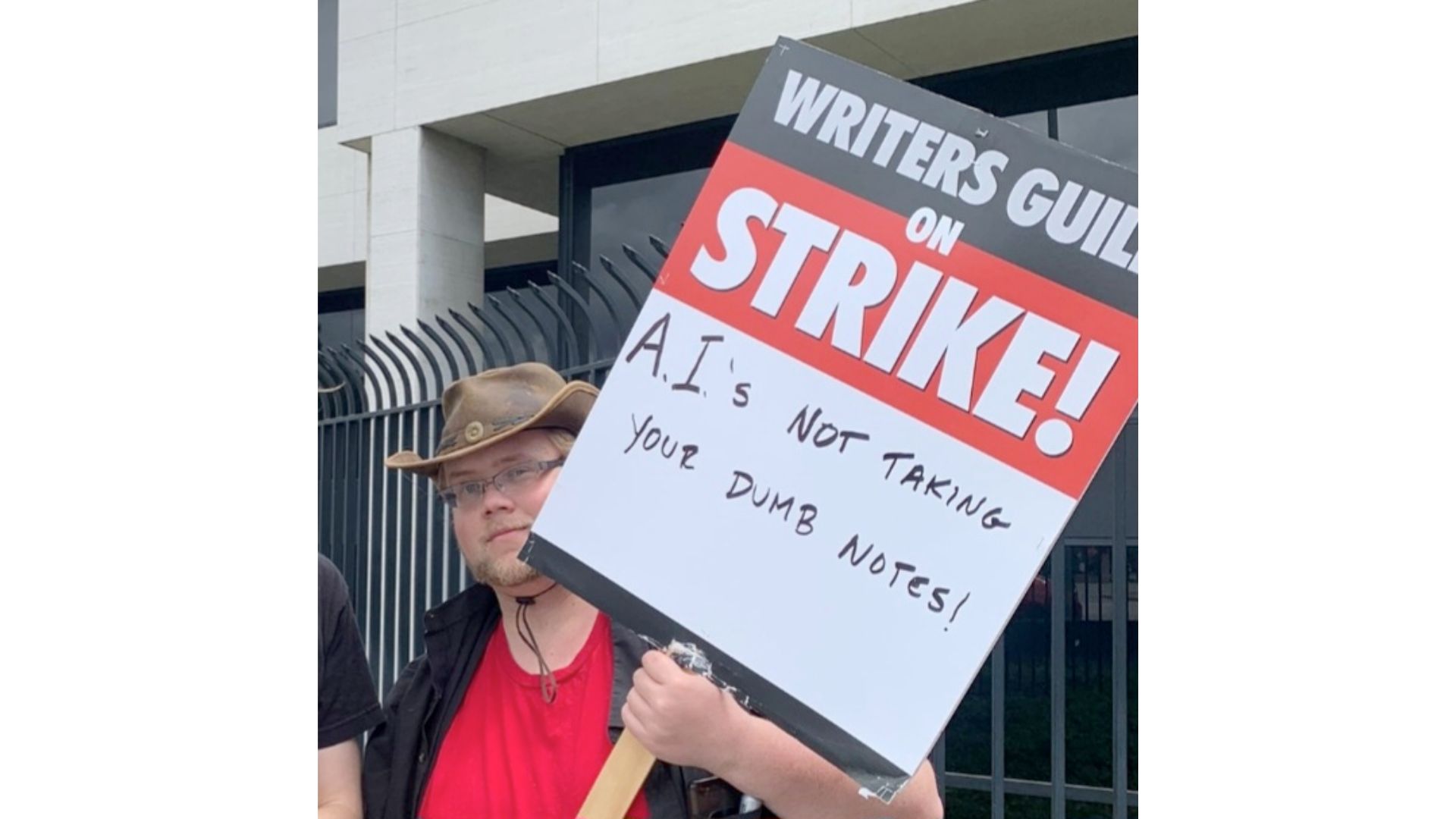

The Writers’ strike in 2023

In 2023, the entertainment industry expressed its concerns about AI usage, among other issues, with writers and actors going on strike. Both strikes together lead to the loss of nearly 45,000 jobs as well as a loss of over 6 billion USD to Southern California’s economy.

For writers, the main concern was residuals, the money creatives receive when their work is reused or rebroadcasted. They worried that studios would reduce their residuals, claiming that AI-generated content does not require the same compensation as those generated by humans.

This was in response to the increase in AI-generated content across all media. Further, writers wanted assurances that AI, such as ChatGPT, would only be used as a tool, not a replacement.

Actors also had similar concerns about AI potentially replicating them. Since AI can create audio and images, studios could use the technology to create entire performances without requiring the actors. This means no additional compensation or residuals for the actors either.

The use of AI to create fake videos, or deepfakes, as they are colloquially called, also relates to ethical and public concerns over AI misuse.

Deepfakes and public perception

The rise of deepfakes and AI-generated content imitating celebrities is one of the main reasons for public distrust and worry about the use of AI. As Levy pointed out, is AI-enhanced content the solution to this? Perhaps. However, it’s crucial to clearly indicate that the content is not fake.

Most celebrities and individuals fear that, similar to deepfakes, their AI versions might say something inappropriate or harmful. However, using pre-recorded content, as Twyn does, can help prevent this.

Levy explained, “We want to share their real thoughts. Not AI-generated content. The AI is simply used to understand the user and select the right content slice to respond with.”

A study by Karim Nader and team found that people who perceived AI as realistically depicted in entertainment and media were likelier to see AI as emotional companions or threatening entities. This is in contrast to seeing AI as a job replacement or a surveillance tool.

This means getting the public’s trust is essential. This could include distinguishing between AI-generated and AI-enhanced content and educating the public about AI use in the entertainment and media industry. Transparency is essential.

Is this enough to overcome the fear that is held by not only the public but also people working within the industry? Levy thinks not.

“The near-exclusive focus on the negative or nefarious uses of AI in the industry is suffocating its potential to add real social and economic value to the industry,” he said.

He believes that more spokespeople within the industry need to make a positive case for Gen-AI’s value to overcome the negative image of AI.

Where do we go from here?

While we have seen a few successful use cases of AI and generative AI in media and entertainment (mainly video games and other immersive experiences), for the most part, it is still met with uncertainty.

As studies and experts have pointed out, public perception is key to a better relationship with AI in media and entertainment, and AI-enhanced content, like the ones offered by Twyn’s platform, maybe the way to go.

These are still early days, and there is much to be learned, but transparency is necessary to ensure a smoother transition to the acceptance of AI.

Levy also pointed out how accepting innovation could help Hollywood’s faltering economic prospects. By accepting innovation in the form of AI, Hollywood could drive up consumer engagement, and once Hollywood is on board, the rest of the media and entertainment industry will likely follow.

“It’s all about shifting the narrative from one of fear and replacement to one of opportunity and enhancement. If we don’t start educating stakeholders now, generative AI’s PR crisis will only spiral further out of control, and the industry will be worse off for it,” he added.

The path forward doesn’t seem like an easy one, but according to industry experts, the acceptance of AI is needed to keep innovation and economic growth alive in media and entertainment.

ABOUT THE EDITOR

Tejasri Gururaj Tejasri is a versatile Science Writer & Communicator, leveraging her expertise from an MS in Physics to make science accessible to all. In her spare time, she enjoys spending quality time with her cats, indulging in TV shows, and rejuvenating through naps.