Generative AI Operational Risks | Pipeline Magazine

By: Mark Cummings, Ph.D., Zoya Slavina

There is a lot of pressure to use Generative AI (GenAI) in operations. But there are also risks. Hallucinations and GenAI cybersecurity attacks are real problems. Organization leadership and

operations staff have three prudent responses available. First, do a risk reward analysis and if the rewards don’t justify the risks, don’t do it. If the decision is to go ahead, include a

simulation test before fielding. Whether or not organizations do the first two, they need to do the third — start partnering with innovators that can help harden networks to better withstand

GenAI problems while providing encapsulation tools that filter out hallucinations.

Since GenAI came to prominence in Spring 2023, there has been a lot of talk about using AI in operations (computing, networking, cybersecurity, etc.). Service providers and end users said that

they were going to do it. Vendors said that they were going to offer products that did it. It got so bad that any company that mentioned AI in its quarterly report had their stock go up.

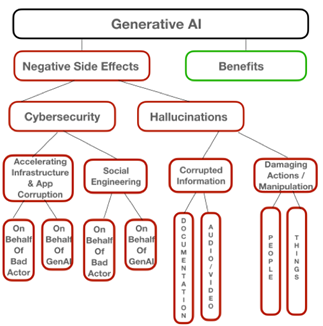

Although GenAI has significant benefits, it also has unfortunate negative side effects. These side effects pose serious risks, especially for high stakes operations. This presents a challenging

situation for organizations. Both leadership and supporting operations staffs need to find a path through the challenges, seek to maximize the benefits, and minimize the risks of the technology.

The first step is to understand the potential problems associated with GenAI. Then, based on that understanding, develop some basic approaches and tools that can assist decision-making.

The negative side effects of GenAI are summarized in Illustration #1

Illustration #1 Negative Side Effects of Generative AI

Hallucinations are a real problem for operations. Hallucination is a term that has come into common usage as a label for a particular set of GenAI negative side effects. It is not an exactly

accurate description. However, it gives a general sense of meaning that can be helpful. In humans, hallucinations are perceptions of sensory data (images, sounds, tastes, smells, etc.) that do

not actually exist. Despite the fact that they don’t exist, the person having the hallucination experiences something that appears to be actual perception. Or very close to actual perception. For

simplicity sake, let us call this “high fidelity”. This high fidelity is what is similar with GenAI hallucinations.

GenAI creates outputs that are undesired in that they have false information, contain instructions to do counterproductive actions, etc. When these are created, they too have very high fidelity.

So high that they can be very convincing. It is this property that has led to these kinds of outputs being called hallucinations.