Generative AI: Shaping a New Future for Fraud Prevention

Transcript

Narkhede: I want to give you some context on why I picked this topic for today’s keynote. As part of my Kafka and Confluent experience, I got a chance to witness a really wide variety of very interesting applications built using event streaming data. One of the most meaningful and the fastest growing one was in the fraud and risk space, in fraud detection. When I observed and helping Kafka users and Confluent customers build a modern fraud detection system, we noticed that they go through a number of hurdles. The first one is cobbling together distributed systems to get data together, ETL it together, then plugging all that into an analytic system, so you can slice and dice your data and really understand where the fraud trends are coming from. Then tie that together with an MLOps platform, so you can train the right machine learning models. You notice that, at the end of the day, cobbling together different distributed systems with MLOps platforms, companies still struggle to use machine learning effectively for detecting fraud. More than two years ago, I started Oscilar with my co-founder who built a bunch of data infrastructure systems that supported Facebook’s risk systems, which are probably one of the world’s leading systems out there. Therefore, based on that experience, and the work I’m currently doing, I want to share a vision on generative AI and how it is shaping the future for fraud prevention.

Why Is Fraud Increasing so Rapidly?

All of us have made purchases online, or have bank accounts online, and we’ve experienced some kinds of fraud. The reality is that apps and websites have a treasure trove of valuable financial, personal, health data. That convenience has come with a downside, it has given rise to a really vast digital landscape that is now susceptible to fraud. Today, we observe really complex forms of fraud, like synthetic identities, deep fakes, online payment fraud, and so on. The problem is that the financial implications of fraud are actually really large. Consumers and businesses, cumulatively lose hundreds of billions of dollars every year to fraud, if not trillions of dollars. The question is, why is fraud increasing so rapidly? According to TransUnion’s Global Fraud Trends report, fraud is increasing, as you can see at these numbers, at an unprecedented scale. I think that there are two reasons for this. One is that fraud actually increases as economic activity evolves. As economies shift, and consumer buying behaviors change, fraudsters see card potential weaknesses in the systems. Between 2019 and 2021, during the pandemic, there was actually a 52% increase in the rate of digital fraud, which is highest in decades. If you break down where the trends are coming from, there’s a particularly increased fraud rate in the travel and leisure industry, as travel resumed post the pandemic, and also, in the financial services industry, as online banking became the new norm. The second reason for why fraud is increasing so rapidly is because, all of our expectations of digital experiences have really accelerated. All of us consumers, we expect these digital experiences to be fast, secure, and really convenient. In fact, if these expectations are not met, consumers actually pretty frequently switch services. The statistics say that about two out of three consumers say that they would switch companies for a much better digital experience. Those were some of the reasons why fraud is increasing so rapidly.

Top Fraud Trends in the Industry

Now let’s take a look at some of the top fraud trends in the industry to know why the technology that we’re now developing is the right path forward. The first is that automation is making fraud really scalable. Fraudsters are using various kinds of software, bots. In fact, there are tools that are shared across different Discord channels that use generative AI to perpetrate fraud. The reality is that because of all this automation, the losses are much higher than would have been possible manually, which was what was happening till date in terms of how fraud was perpetrated. An example of this is credential stuffing. This is an act of testing stolen or leaked credentials from the dark web, to see if they actually work on the selected set of accounts. This whole activity today is actually much more scalable with bots than with human intervention, which is how it was done before. The second trend is that fraud costs are escalating. According to the Federal Trade Commission, consumers lose more than $8.8 billion annually, and this is just in the United States. According to extensive research done, it is noted that global fraud losses amount to north of $5 trillion. These numbers are eye popping, they’re disappointing, and need immediate addressing. The third trend, which is also concerning, is synthetic identity fraud is one of the fastest growing forms of fraud. In fact, it comprises of more than 85% of all identity fraud, which itself runs losses in the tens of billion dollars every year. The problem with synthetic identities is, it’s first of all powered by generative AI, making it much harder to detect using traditional techniques, mostly because traditional techniques lack sufficient training data, especially when it comes to synthetic identity fraud.

The next trend is that companies need to balance consumer friction with fraud losses. All of us expect really seamless digital experiences, so companies can’t always deploy the strictest verification procedures, which is what makes fraud prevention really difficult. In fact, they need to balance the opportunity cost of lost customers with the fraud losses. Fraud losses are typically considered as cost of doing business, and a fine balance needs to be struck between fraud prevention losses and the customer friction. The last trend is that there’s a huge proliferation of point solutions. Point solutions are, maybe one tool gives you device fingerprinting data, and hence device risk. Or another tool does IP profiling and hence gives you IP risk. The problem is that now there are a lot of these points solutions. Each solution focuses on some part of the customer journey, and gives you the risk of the user only at that point in the customer journey. As you can imagine, that doesn’t tell the whole story. It doesn’t give you a 360-degree view of the user’s risk. For example, if you were a user that were trying to make a purchase online, if you browse the catalog, that would actually lead to a different risk signal than if you went straight to the purchase and purchased a whole number of high value items, possibly from a different account. The other problem is that each point solution wants to have or claims to have the best and the most complete data out there. In my experience, I found that no one tool has the best data. The conclusion is that data needs to be integrated from all of these point solutions into what I would call a risk operating system where all that data can come together in real-time. You can orchestrate decisioning workflows to figure out what to do with a particular transaction in real-time. Those were some of the fraud trends in the industry.

Evolution of Fraud Detection: The 3 Generations of Fraud and Risk Technology

Now let’s take a look at how fraud technology has evolved over the years to know where it is going next. I see them in three generations. Each one of them have learned from the strengths of the previous generation, as well as the weaknesses. The first systems which I call risk 1.0 systems are the static rule-based platforms. What does rules mean? It means if this, then do that, kind of logic. That is commonly used to detect previously seen trends. Once you see a new feature and a new trend, you go in some code base and change the code to add these conditions, and then go live after testing it. The upside, as you can imagine, is it’s very convenient, and it’s pretty easy to observe some feature and go and add a rule. The downside is that it just doesn’t scale beyond a few dimensions. In fact, it very quickly gets out of hand beyond maybe 80 to 100 rules. An example of rules is, let’s say we’re trying to detect these kinds of fast and expensive transactions, so rules that might flag credit card transactions that maybe exceed a certain amount which is atypical of your past behavior of purchases, within a very brief timeframe. In this example, it’s easy to add a rule which checks for the transaction amount in a brief timeframe, which are basically just two features. Then, studying the entire customer behavior leading up to the transaction, which requires a lot of data and a lot of tracking of features beyond just these two: customer transaction value and brief timeframe.

To counter the downside of lack of scalability of risk 1.0 systems, there is 2.0 systems evolved to incorporate traditional machine learning along with rules. This technique as well is used to detect previously known kinds of fraud. Traditional machine learnings obviously helps with dealing with high dimensional data. It comes with a downside as well, is it requires a lot of training data to accurately predict risk, which at times can take months. A good example of that is chargeback fraud. Typically, companies need to wait for about 60 to 90 days to get the labels for chargeback fraud. One example of trying to detect chargeback fraud then is, let’s say you have a lot of diverse transactions coming from typically stolen cards from a common device farm, but originating from multiple IP addresses, multiple ZIP codes. The list of features actually is pretty long. This is where traditional machine learning models can typically excel.

I think the latest generation of risk systems, risk 3.0 systems will use generative AI in combination with traditional machine learning to detect complex and emerging forms of fraud, which most importantly have not been seen before, and do that while dramatically reducing the false positive rate. Which is actually a big problem in the fraud detection industry, because of striking a balance between customer friction and fraud losses. A good example of the complexity that this approach is able to handle is first party fraud. This is where an individual might use their actually own identity to fraudulently obtain goods or services without any intention to pay for them. This is really challenging because, A, the behavior varies per account holder, B, it is really hard to capture the feature of intent to pay. Conventional anomaly detection methods actually miss certain subtle behavioral changes, like let’s say duress during the purchasing process. That is what leads to a high number of false positives. Here is where generative AI excels, is it analyzes a ton of unstructured and structured data from the past and present to understand intricate user behaviors. Most importantly, put the user behavior in context.

That is why it’s able to identify anomalies without actually requiring explicit labels. The other thing about generative AI is it adapts quickly and it learns from the latest signals. I’ll explain why it’s able to do these things. Of greater significance is the fact that risk 3.0 systems allow risk operators to formulate 1.0, 2.0, or even third generation techniques without really requiring a lot of in-depth knowledge. It comes with that in-depth knowledge and the world knowledge. It comes with a lot of intelligence and proactive recommendations, so risk operators’ jobs are actually much more simplified.

Drawbacks of Existing Fraud Detection Methods

Let’s now take a look at drawbacks of existing fraud detection methods to understand the advantages of generative AI and how it is going to change fraud prevention. The first drawback is limited scalability. Risk 1.0 and 2.0 systems, they often struggle to keep up with the increasing complexity of transactions. Today they are now powered by generative AI so they’ve become really complex and subtle. These generations require constant manual updates to adapt to these new fraud techniques, which effectively make them less efficient. You need to change code, to change rules, you need to retrain traditional machine learning models to help them to detect new kinds of fraud, which is happening very quickly and changing forms very quickly. Even machine learning models may struggle to scale efficiently as transaction complexity further grows beyond hundreds of features. The second drawback is simply, feature engineering overhead. The reality is that risk 2.0 methods require manual feature engineering, like most traditional machine learning methods, which itself is a time-consuming process, requires data ETL cleaning, and it may still not capture all the relevant information that is required for accurate fraud detection. The third limitation is data imbalance. This we know about machine learning. The thing about fraud is that fraudulent transactions are actually typically rare compared to legitimate ones, despite the huge amount of fraud dollars that are lost every year. This creates imbalance in labeled training data, which can skew a traditional machine learning model’s ability to accurately detect fraud. This is not only a problem for fraud, it is a problem for a whole host of other problems, but it typically has a very large impact in fraud detection.

The fourth limitation is lack of context. This is a big one, is previous generation methods may not incorporate a wide range of variables, and simply does not understand the context. It understands features. It understands what’s happening, but cannot put it in context. This is what limits its effectiveness in detecting more complex or subtle fraud schemes like first party fraud. The next limitation is need for human oversight. This is where a lot of cost in risk operations and fraud operations comes in, is despite the automation in an MLOps platform that you might build, or despite the testing processes that you might build for rules. Previous generation methods often require a lot of human intervention to do a whole host of things, like model tuning, or model updates, or simply to verify flagged transactions. Typically, in fraud detection, if you can’t quite determine the risk of a particular transaction, and if it’s a high value transaction, you flag it for manual review so a team of human reviewers actually takes a look at all the data before they would release, approve, or deny that transaction. These manual updates, and this manual verification is very resource intensive, and that’s a big problem for a lot of companies. The last limitation is lack of adaptability. Static rule-based systems as well as risk 2.0 systems, they suffer from a lack of adaptability, and that is what makes them less agile, is you need to detect the new fraud pattern by manually observing trends, and need to go and do feature engineering and then come back and deploy the trained models. This requires frequent manual updates or retraining of models to address fast-evolving fraud challenges. Those were some of the drawbacks of existing fraud detection methods.

The Generative AI Advantages

Now let’s tee up to generative AI. Generative AI is a significant leap forward that we made mostly in the last year, and that is opening up incredible opportunities for everything, including fraud and risk management. In fact, it will completely change how we do fraud and risk management in the modern world. Let’s take a look at some of the advantages of generative AI to know how we can put it in practice for fraud detection. The first big advantage is adaptive learning. Unlike traditional methods that are static, generative AI models continuously learn from the data that is coming in, and the labels that are coming in, that allows them to adapt to new patterns, so it’s pretty flexible and adaptive. These models, they also integrate human feedback loops more easily. Traditional machine learning methods also can be made to integrate human feedback loops, but that process is more manual and requires much more label training data. In this case, operators are able to confirm or refute fraud predictions through that manual review process that I’d mentioned. This ends up enhancing model accuracy much faster than traditional methods. Most importantly, beyond recognizing known fraud types, generative AI can actually predict new fraud techniques, and that is what helps them be an early warning system. Adaptive learning offers a very fast-evolving and real-time solution for modern fraud detection.

The second advantage is data augmentation, which will be pretty approachable to understand. Generative AI can generate synthetic data that mimics real transactions. This is what allows us to enhance and enrich training datasets, which effectively then improves model performance, like we know. This synthetic data also eliminates the need for another problem that a lot of companies get flagged for, which is compliance. Avoiding the need to use sensitive real data is a big deal and helps in staying compliant. Generative AI also tackles the problem that we talked about, about imbalanced datasets, because it generates or is able to generate examples of those rare fraudulent transactions. This technology can also automatically identify and actually create features that are relevant for fraud detection. It has world knowledge, which I will talk about, which allows it to just know what are the critical features for a certain kind of fraud that is happening. Using that, and the documentation techniques, it’s able to train new models that effectively are more accurate than the previous methods. Data augmentation offers a very robust and privacy compliant way to train fraud detection models.

The next advantage is anomaly detection. Generative AI, it trains on really diverse datasets, and has this world knowledge that understanding truly constitutes, it knows what constitutes an anomaly versus a legitimate but slightly unusual transaction. It also considers a really large variety of variables like transaction amounts, or most importantly, historical and current customer behavior, and the context in which it is happening, which is what makes it really robust against sophisticated fraud schemes. The model also dynamically can adjust thresholds as it learns from the new data and new labels that are coming in, which allows it to predict future anomalies even more effectively. Generative AI offers a very proactive and a very real-time approach to anomaly detection, which is a problem that has a high number of false positives with traditional methods. Which leads me to the last advantage worth mentioning, which is reducing false positives, like I mentioned, is a huge problem in fraud detection. It uses sophisticated algorithms. We don’t have a very deep understanding of exactly how they work, as we know, to accurately distinguish between legitimate anomalies and actual fraud. All the advantages of data augmentation and its ability to do anomaly detection more accurately, ends up helping in reducing false positives. It has a layer of contextual intelligence, which it gets from its world knowledge that ultimately minimizes false alerts. Those were some of the advantages of generative AI.

AI Risk Decisioning

Let me now turn to an approach which I’m calling AI risk decisioning, which is powered by generative AI. It uses that in combination with traditional machine learning, and forms I think a very new foundation for protecting online transactions. I’d like to talk about what I mean by AI risk decisioning, how and why it’s powered by generative AI, and the various pillars of how it’s going to change fraud detection. I’d like to start by mentioning that this approach is not just an incremental improvement or feature improvement. It’s a foundational shift in how we think about and how we approach fraud detection. In summary, I would like to mention that AI risk decisioning, it’s uniquely equipped to combat fraud because it combines the power of generative AI, the power of traditional machine learning methods, and has a layer of understanding which we never had before, or across virtually unlimited data sources. These are the six pillars of this approach that might give you a much better understanding of the design of AI risk decisioning systems, and the pillars that it needs to consider to create a well-formed AI risk decisioning platform.

1. Knowledge: A 360-Degree Knowledge Fabric

The first pillar is knowledge, which I’ve been referring to as fraud world knowledge, which is a 360-degree view, and a knowledge fabric that can be used to power all the other techniques and pillars of the platform. It does that by integrating a large variety of internal data sources that are unique to the company, like transaction records, customer profiles in real-time, and marry that with the world knowledge that it has from consortium databases, open source intelligence databases, even academic research and publications. It takes all that data and not only integrates it to create a holistic view of all the data, which we’re able to do with real-time stream processing methods. It adds a layer of intelligence and understanding, and most importantly, reasoning on top of this, to effectively form a cognitive core for risk management that we never had before. Let me take an example to try to explain this concept and the impact of this knowledge fabric, let’s say, the synthetic payment fraud that is happening. Synthetic payment fraud is basically a type of fraud where scammers use AI to create fake identities and fake amounts or fake accounts. Then they change transaction data a little bit to commit complex fraud, like money laundering. They make fake payment accounts to hide these fraudulent, or illicit funds, and just make them look like real transactions.

Up until now, we’ve been using traditional methods to try to differentiate between money laundering or unusual transactions that are legitimate. The problem is that it has trouble differentiating between the two, mostly because it’s unfamiliar with this pattern. Remember that synthetic payment fraud is a pretty complex kind of fraud that itself is powered by generative AI, and changes shape and form very quickly. Traditional machine learning methods struggle because, as we know, they use past data, and simply don’t have enough training data quickly enough to be able to detect this fraud. This is where generative AI comes in. It changes the approach. It has been analyzing a lot of unstructured data in the background, and has been learning to find new patterns. It forms this adaptive knowledge fabric that simply knows the critical features to flag payment fraud. Features like account dormancy before activity, or how new the account is, or if the account information was changed, and a whole host of other variables. The important thing is it knows these features in advance, because it’s learned from a lot of data and a lot of trends in the past. It becomes particularly good at learning the characteristics of good behavior from fraudulent behavior as it is happening in real-time. Coming to the AI risk decisioning approach then, it combines traditional machine learning techniques, which I’ll explain as well, with generative AI, along with this fraud knowledge fabric, and continuously updates its models by learning from the real-time data of transactions and labels that are coming in. Effectively, the coolest thing is that this cognitive core is the real power. It actually powers all the other pillars, and all the other ways in which you can use generative AI for modern fraud detection.

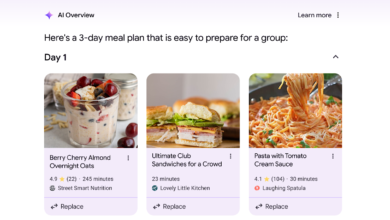

2. Creation: A Natural Language Interface

The second pillar of the AI risk decisioning approach is creating fraud rules or models through a natural language interface. This is approachable to us, as we’ve all been using ChatGPT, is the natural language interface is basically the ability to create customized workflows, I’ll show you an example, models, everything through a natural language interface. No coding expertise is required, which is a big change from how things are done today. The most powerful thing is no real understanding of the underlying shape of data is required, or even no real deep analytical skills are required. An example of this is, let’s say you wanted to create a model to detect account takeovers. You might specify features, or it might already know the features if you choose to use that for account takeover, like track suspicious login behavior, or track deviations from the user’s previous login behavior. The natural language copilot then translates those requirements into a model automatically. It can then create, test that model to give you the model performance, like I’ll show in an example. Then it’s able to allow you to make that final decision. You might ask it to create this account takeover model. It first of all knows what account takeovers mean, and what is important in detecting account takeovers. It’s able to create a machine learning model as well as this decisioning workflow to detect it. You can ask it to add device intelligence. It knows where to get that device intelligence from, and it knows which point in the model you should be able to add it. Then you can ask it to backtest it, which is basically scenario analysis on how it would have performed if it ran in the past. You can ask it to, say, run this model on the past three months of data, and it gives you the results of that scenario analysis. That is effectively what allows those teams to make a final decision. We’re not removing the human from the loop, we’re actually making the human much more equipped with risk insights. The natural language interface, the real power is it not only makes fraud programs more scalable, but it democratizes risk management. It allows a much broader team with a much broader skill set to tackle fraud.

3. Recommendation: Auto-recommendations

The third pillar of the AI risk decisioning approach is making automatic recommendations. A really powerful capability of this approach is, this is all about making real-time and automatic recommendations for effective risk management. These are examples of the recommendations that it might make, is it might auto-monitor transactions and detect certain trends in the transactions that are happening or certain anomalies. It might actually suggest relevant features for risk models, like it can do in the example of account takeover fraud. It can conduct scenario analysis on its own so you’re equipped with the results you need to actually deploy a model with confidence. Most importantly, it can recommend the next best action to optimize performance, which is something that risk teams really struggle with, and require a ton of experience to be able to do this, and a lot of back and forth and testing.

Here’s an example of recommendations and its power. Let’s say we come to synthetic identity fraud, where fraudsters assemble parts of real identities and create a fake identity, which we call as a synthetic identity to commit fraud. The AI risk decisioning approach, it quickly assimilates the unique characteristics, it learns the unique characteristics of this fraud, as it happens, and can train a specialized machine learning model. This model will have key features to detect synthetic identity fraud, such as detecting anomalies in application data, or tracking the rate of those credit applications in this case, or flagging transactions with high-risk entities and so on. Then it deploys this advanced model, and makes recommendations on other features you should add to your decisioning workflow to detect these complex and subtle discrepancies. Here’s an example. Let’s say you had a simplistic synthetic identity model. It, first of all, detects a trend proactively. It explains the features that are involved in synthetic identity fraud. It might go ahead and actually make a recommendation of adding a model as well as adding two more features that are relevant for synthetic identity fraud. Then what you’re doing is you’re mostly testing those recommendations, or you might ask it to remove a certain feature and then give you that A/B test or backtest scenario analysis results. Automatic recommendations, it really streamlines this risk model iteration. Remember that risk decisioning and fraud detection is a game of balance, and a lot of iteration, because very few features, and very few things about the fraud trends are actually obvious to the eye. This is what reduces the fraud mitigation time from weeks, which is where it’s today, down to hours, and even in some cases, minutes.

4. Understanding: Human-Understandable Reasoning

The fourth pillar of the AI risk decisioning approach is about understanding, which is what I’m calling human-understandable reasoning. This isn’t as complex as it might sound. It is basically how we can make it understand and explain every decision, or any recommendation or insight that is provided by the AI. The problem is that risk experts do have the ability to understand the different factors that influence risk assessments, because it is able to reason about things, it’s able to give you a rundown of factors of what might be happening. With that knowledge and their past experience, then they are able to spot new patterns or build necessary defenses or even explain what’s going on to a broader team so you can collaborate more effectively. While this might be underrated, this is what fosters confidence and trust, which is effectively what brings down the model iteration time, because you simply know what to expect, because you have the scenario analysis results, and you have a deep understanding of why something might be happening. By shedding light on the why, it really elevates risk management from a cat and mouse game where you’re just chasing various fraud trends or risk trends, to much more of a proactive and a strategic function, because of all these recommendations and proactive anomaly detection that it is able to do.

I want to take an example of reasoning as well. Let’s say that there’s a 12% increase in the chargeback rate. Let’s also say that there’s a jump in the false positive rate. Let’s say that this was linked to the launch of a new credit card, a new virtual card, an AI risk decisioning platform will quickly conduct an exhaustive root cause analysis. This is because it is able to reason about things. It then quickly gives you a rundown of risk factors that generally might be applicable in this scenario, like let’s say, study alterations in customer behavior, or study distinctive transaction patterns, which then allows experienced risk operators to understand the key factors that might be involved. Maybe the customer buying behavior has truly changed, or maybe there is, in fact, a new technique that is taking advantage of this new credit card application process. It is able to generate that explanation, and, of course, it is able to marry that explanation with proactive recommendations. An example is, let’s say you’re watching your dashboard for a particular fraud trend, you’re able to ask it to explain what might be happening in just plain natural English. It’s going to be able to do that and tell you that there’s an increase in the chargeback rate, looks like the model experienced a drift. It recommends that you train a new model, which then you can proceed to ask it like, which features should I train the model on, or what should be the different thresholds? Then you can ask it to give you the model performance metrics, which, with the Python agent, it is actually able to do pretty easily.

5. Guidance: Augmenting Risk Experts

Now let’s look at the fifth pillar, which is about guidance, which is about augmenting risk experts. We’re not removing the human from the loop, we’re simply making them a lot more efficient. The problem is that even the most experienced risk experts, they’re overwhelmed. They’re overwhelmed because there are increasingly complex fraud patterns that are happening today. There is just enormous amounts of data that is involved in digital transactions. Imagine all the kinds of transaction data that you have to deal with, in addition to the data that comes in when you first create an account, or first you log in, and studying that with your browsing behavior, and then studying that and marrying that with the transaction data at the final transaction point, which is where the decision will happen. You can only imagine the amount of real-time training data they have to deal with. Here is where AI risk decisioning serves as an invaluable copilot. It does a couple of things. It first of all gives you that real-time intelligence of what might be happening, that root cause analysis. It has specialized knowledge so it can marry that with the knowledge it has about that particular fraud trend, and suggest relevant features or some model that it needs to train. It gives you an understanding of the contextual data. Like we saw in explaining that particular fraud trend, it’s able to explain and give you a rundown of factors of what might be happening. These three things put together, allow you to make much better and informed decisions.

I’d like to take an example here as well, is, let’s say you’re triaging suspicious ACH transactions. Today, what we have to do is we manually collect the data, maybe from some data warehouse, which is possible, but it’s a little more error-prone. You marry that with existing trends that you know from the cases that you’ve been handling. All the human reviews that have happened, you marry that, with that data that you have from the human reviews, and literally, eyeball associated entities that might be performing a similar transaction, which effectively means you’re detecting collusion. An AI risk decisioning platform actually keeps on analyzing transactions in the background, and it quickly identifies a complex series of irregularities, in this case, in the ACH transaction case, which is actually one of the more easier examples, is, let’s say there’s a lack of connection between the beneficiary and the customer. Or let’s say there are high transaction values happening from unknown IP addresses or unknown devices, it is able to do that root cause analysis. Based on that root cause analysis, it is going to proceed to recommend that you block the transaction. Not only that, it’s going to proceed to proactively identify and do that graph analysis on the related entities, so you’re able to detect that collusion as well. You might be able to, first of all, instead of eyeballing the manual review case, you can ask it to explain why that case was formed in the first place. Because you have knowledge of your own business and knowledge of the fraud trend, with that understanding, you’re able to make a decision. It then proactively does this graph analysis, which today involves a lot of manual review, and then give you a list of entities that you might want to make a decision on there and there itself, so you’re making fraud detection a lot more scalable, because it’s able to do this kind of graph analysis and quick explanation to augment risk experts on what they do train. The AI risk decisioning approach, as you can imagine, it’s empowering risk experts to be more strategic, and much more proactive based on these kinds of reliable insights, that it’s able to give you, an explanation that is able to give you.

6. Automation: Risk Automation

The last pillar is about automation. This is probably the easiest pillar to understand, is the problem with a risk expert is they spend a lot of time and effort on repetitive tasks, which are important. You do need to look at fraud trends, and you really need to look at visual performance summaries. Generative AI, as we know, can automate a lot of tasks regarding reporting with the Python agents and stuff like that. It can consolidate a lot of this information, because it’s been analyzing it in the background. It’s good at generating reports. An example is, let’s say you have to compile monthly reports on summaries and visual performance reviews. Traditionally, it involves collecting data from a data warehouse or spreadsheet, and then manually crunching the numbers, and either using a BI tool or Excel to plot different reports. You can imagine how time consuming and frankly boring that task is. AI risk decisioning completely automates that approach. You can ask it to generate a trends report based on the performance trends. First of all, it gives you a rundown of the performance trends in the last quarter, which is what you asked for, before creating the report, and then goes ahead and creates the report. If you find the report useful, you can ask it to create it at a regular cadence. Easy to understand, but an important thing that it does to elevate risk management. Those were six pillars.

Recap

Finally, I’d like to conclude by saying that generative AI is not a silver bullet. A misconception in the industry is that it’s going to replace machine learning methods for fraud detection. That’s simply not true. I think generative AI and traditional machine learning, they actually offer complementary strengths for fraud and risk management in particular. Traditional machine learning excels in pattern recognition based on historical data. It is great at flagging known kinds of fraud that have been previously encountered, so you don’t want to forget what has happened in the past. It does struggle with fast-evolving forms of risk. It just doesn’t have inherent knowledge. Note that machine learning models are trained from scratch, so they don’t remember anything and simply don’t have the ability to reason about things, it can simply only predict things. With generative AI, it shines when there’s limited, labeled data or this fast-evolving forms of risk. It is uniquely equipped to generate new data or new labels, from minimal examples. It analyzes vast amounts of data, structured, unstructured, and creates this knowledge fabric. This knowledge fabric is adaptive. That is what allows it to keep recognizing new patterns and anomalies, and learn from it. An AI risk decisioning platform must integrate insights from traditional models as well as generative AI to form a more comprehensive understanding. This approach is what allows it to be much more effective than a traditional approach, rather than using either technology in isolation.

Conclusion

It’s useful, and this approach has a 10x impact on fraud and risk operations. It improves the accuracy of models, reduces false positives. It dramatically makes humans much more efficient. It speeds up risk operations from weeks and months, to what it takes today, to hours. That is what I’ve been working on at Oscilar is building the first AI risk decisioning platform. Hopefully, based on that experience, you took away some insights about generative AI and how it changes fraud prevention.

See more presentations with transcripts