Get Acquainted with Open Source Platforms for Generative AI

Generative AI is the future and developers can choose from many open source platforms to build and deploy AI models. In fact, they may even face the problem of plenty.

Generative AI comprises a set of models that describe what we want, visualising and generating content to match prompts. It accelerates ideation, brings vision to life, and frees us up to spend more time on being creative. It creates a wide variety of data such as images, videos, audio, text and 3D models.

Generative AI is powered by foundation models (large AI models) that can perform out-of-the-box tasks including summarisation, Q&A, classification, and more. It helps in faster product development, enhanced customer experience and improved employee productivity.

Industry adoption of generative AI

According to Gartner, “Any organisation in any industry, especially those with very large amounts of data, can use AI for business value.” Generative AI is primed to make an increasingly strong impact on enterprises over the next five years.

- By 2025, 30% of enterprises will have implemented an AI-augmented development and testing strategy, up from 5% in 2021. – Gartner

- By 2026, generative design AI will automate 60% of the design effort for new websites and mobile apps. – Gartner.

- By 2027, nearly 15% of new applications will be automatically generated by AI without a human in the loop. This is not happening at all today. – Gartner.

- Generative AI coding support can help software engineers develop code 35 to 45 per cent faster, refactor code 20 to 30 per cent faster, and perform code documentation 45 to 50 per cent faster. – McKinsey

- Forty-four per cent of the workers’ core skills are expected to change in the next five years. Training employees to be able to leverage generative AI is going to be critical. – World Economic Forum

Key characteristics of generative AI technology

- Text management: Generative AI can complete a given text in a coherent manner. It can translate text from one language to another, summarise it into a shorter and concise form, and generate text that mimics human writing.

- Contextual understanding: Generative AI has a strong ability to understand the context.

- Natural language processing (NLP): It can perform various NLP tasks — it can understand and process human language, allowing users to ask questions in a conversational manner.

- Question answering: Generative AI can answer questions based on the knowledge base.

- Personalisation: Generative AI can be fine-tuned for specific use cases.

- Multi-language support: Generative AI can perform NLP tasks in multiple languages.

- Advanced semantic search: It enables semantic search through large volumes of structured and unstructured data, including databases, documents, etc. It also supports auto data indexing using custom AI/ML based data processing.

- Content moderation: It has smart content filtering and a moderation engine for query and response, which is trainable on enterprise data.

- Integration: Generative AI can be integrated with various applications/data sources within an organisation, including CRM, ERP, and other proprietary systems, to access and analyse data via API.

- Role-based access control: The system can be set up with role-based access control, ensuring that users can access and query only data that they are authorised to view.

- Software coding: It helps with code generation, translation, explanation and verification.

Generative AI architectures and datasets

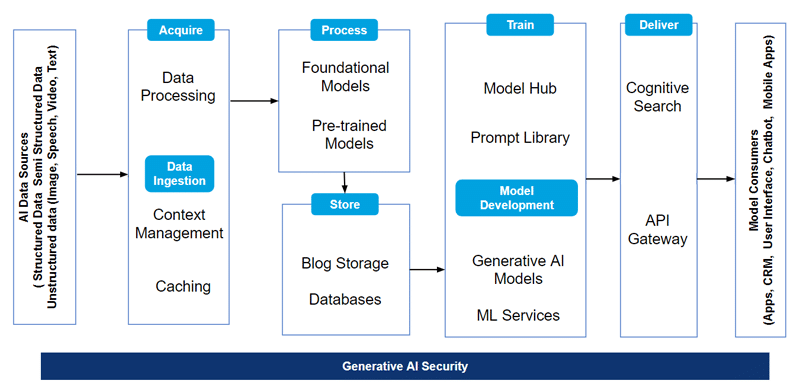

Figure 1 shows the contextual architecture of generative AI with the various stages involved — acquire, process, store, train and deliver.

AI data sources: The data sources provide the insight required to solve business problems. They are structured, semi-structured, and unstructured, and come from many sources.

Acquire: In this stage, AI-based solutions support processing of all types of data from a variety of sources. Context management provides the models with relevant information from enterprise data sources. Caching enables faster responses.

Process: Generative AI uses foundation models. These are trained on huge quantities of unstructured and unlabelled data to perform specific tasks and act like a platform for other models. To process large amounts of unstructured text, foundation models leverage large language models (LLMs). Foundation models are fine tuned for domain adoption and to perform specific tasks better using short periods of training on labelled data. The process of further training a pre-trained model on a specific task or dataset to adapt it for a particular application or domain is called fine-tuning.

Store: Data is stored in this stage.

Train: This stage primarily consists of model hub, blog storage, databases, and a prompt library. Model hub consists of trained and approved models that can be provisioned on demand. It acts as a repository for model checkpoints, weights, and parameters.

Prompt engineering is a process of designing, refining, and optimising input prompts to guide a generative AI model towards producing the desired outputs.

Deliver: This stage covers cognitive search. The model provides access to the right data at the right time to produce accurate output. Stakeholders use API gateway channels to interact with enterprises. This is a single point of entry for consumers to access back-end services.

AI security: This helps in establishing strong security. AI security must cover strategy, planning and intellectual property. The generative AI platform needs to provide robust encryption, multi-level user access, authentication, and authorisation.

Model consumers: Various stakeholders, both internal and external, are part of this layer. They are the primary users of the systems.

Use cases of generative AI

Healthcare and pharma

Generative AI based applications help healthcare professionals be more productive, identifying potential issues upfront, providing insights to deliver interconnected health and improve patient outcomes. It helps in:

- Processing claims, scheduling appointments, and managing medical records

- Writing patient health summaries

- Accelerating the speed and quality of care. It can also improve drug adherence

- Developing individualised treatment plans based on a patient’s genetic makeup, medical history, lifestyle, etc

- Building healthcare virtual assistants

Manufacturing

Generative AI enables manufacturers to create more with their data, leading to advancements in predictive maintenance and demand forecasting. It also helps in simulating manufacturing quality, improving production speed, and materials efficiency.

Retail

Generative AI helps in personalising offerings, brand management, and optimising marketing and sales activities.

- Personalised offerings: Enables retailers to deliver customised experiences, offerings, pricing, and planning. It also helps in modernising the online and physical buying experience.

- Dynamic pricing and planning: It helps predict demand for different products, providing greater confidence for pricing and stocking decisions.

Banking

Generative AI applications help in delivering a personalised banking experience to customers. It enables risk analytics and fraud prevention, risk mitigation and portfolio optimisation, as well as customer pattern analysis and customer financial planning.

Insurance

GenAI’s ability to analyse and process large amounts of data helps in accurate risk assessments and effective claims processes. Various data categories are customer feedback, claims records, policy records, etc.

Education

Generative AI helps to connect teachers and students. It also enables collaboration between teachers, administrators and technology innovators to help educational institutions provide better education.

Telecommunications

Generative AI adoption by the telecom industry improves operations efficiency and network performance. In this industry, generative AI can be used to:

- Analyse customer purchasing patterns

- Offer personalised recommendations of services

- Enhance sales

- Manage customer loyalty

- Give insights into customer preferences

- Improve data and network security, enhancing fraud detection

Public sector

The goal of digital governments is to establish a connected government and provide better citizen services. Generative AI enables these citizen services to be delivered more effectively while protecting confidential information.

- Smart cities: Generative AI helps in toll management, traffic optimisation, and sustainability.

- Better citizen services: It helps to provide citizens with easier access to connected government services through tracking, search, and conversational bots.

Open source platforms for generative AI

There are numerous open source LLMs available for generative AI. Here is a brief introduction to a few of them.

TensorFlow: This is Google’s end-to-end library for performing low level and high-end frameworks for generative AI. It provides a robust platform for developing and deploying generative AI models that can create realistic and diverse content, such as images, text, audio, and video. It can handle deep neural networks for image recognition, handwritten image/writing classification, recurrent neural networks, NLP, word embeddings and PDE (partial differential equation).

Falcon LLM: Released in September 2023, this is a foundational large language model (LLM) with 180 billion parameters trained on 3500 billion tokens. It generates human-like text, translates languages, and answers questions.

OpenMMLab: This computer vision algorithm system is a multimodal advanced, generative, and intelligent creation toolbox. It provides high-quality libraries to reduce the difficulties in algorithm reimplementation. It creates efficient deployment toolchains targeting a variety of backends and devices. OpenMMLab has released 30+ vision libraries, implemented 300+ algorithms, and contains 2000+ pretrained models.

Llama-2: Meta AI developed Llama-2 in July 2023. This foundation language model is trained on a trillion tokens with publicly available datasets. It is optimised for natural language generation and programming.

Flan-T5: Released in October 2022 by Google, this open source LLM is available for commercial usage. This collection of datasets is used to improve model performance. As many as 1.8K tasks were phrased as instructions and used to finetune the model.

Mistral 7B: Released in September 2023, this is a pretrained generative text model with 7 billion parameters. The model is used in mathematics, code generation, and reasoning. It is suitable for real-time applications where quick responses are essential. Mistral is multilingual, which makes it a flexible model for a range of applications and users.

PaLM2: Released in May 2023, this language model performs multilingual and reasoning capabilities.

Code Llama: Released in August 2023 by Meta, it is designed for general code synthesis and understanding. It’s a 34 billion parameter language model.

Phi-2: Released by Microsoft Research Lab in June 2023, this 2.7 billion parameter language model can be prompted using a QA format, a chat format, and the code format.

Bloom: Released in 2022, this is an autoregressive LLM that generates text by extending a prompt using large amounts of textual data. The model was trained on 176B parameters, and can write in 46 languages and 13 programming languages.

GPT-3 AI: This NLP model developed by OpenAI is a generative pretrained transformer that uses deep learning to generate human-like text. It can generate text without any prior training or input. It is useful for a variety of tasks, such as natural language processing, machine translation, question answering, summarisation, etc. GPT-3 is highly scalable, meaning it can handle large amounts of data quickly and accurately.

Bert: Bert stands for Bidirectional Encoder Representations from Transformers. Released by Google in 2018, this 340 million parameter LLM leverages a transformer-based neural network to understand and generate human-like language. It performs various NLP tasks like task classification, question answering, and named entity recognition.

Other open source LLMs include OPT 175B, XGen-7B, GPT-NeoX, and Vicuna-13B.

In summary, it is very important to choose the right set of LLM models, architecture, and open source frameworks for generative AI for building industry solutions. Since there is so much choice, this can be a challenging task.

Benefits of open source LLMs include:

- Enhanced data security and privacy

- Better RoI

- Reduced vendor dependency

- Code transparency and language model customisation

- Active community support and fostering innovation

However, LLM technology is still quite new and not well understood yet. It continues to evolve.