Giving Household Robots Common Sense

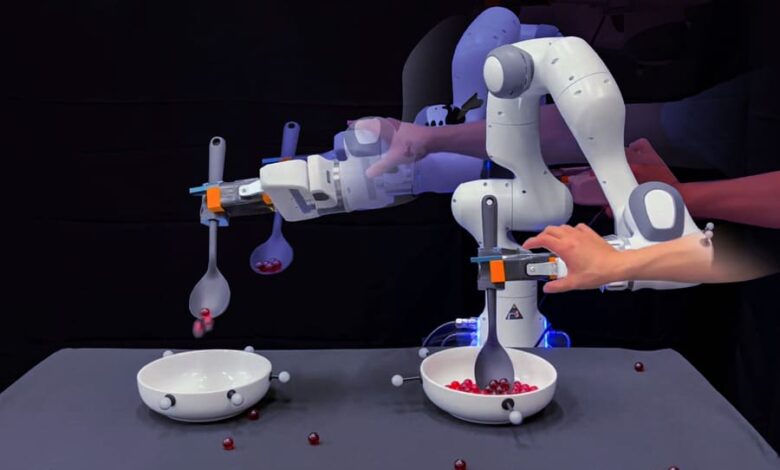

From wiping up spills to serving up food, robots are being taught to carry out increasingly complicated household tasks. Many such home-bot trainees are learning through imitation; they are programmed to copy the motions that a human physically guides them through.

It turns out that robots are excellent mimics. But unless engineers also program them to adjust to every possible bump and nudge, robots don’t necessarily know how to handle these situations, short of starting their task from the top.

Now, MIT engineers are aiming to give robots a bit of common sense when faced with situations that push them off their trained path. They’ve developed a method that connects robot motion data with the “common sense knowledge” of large language models (LLMs).

Their approach enables a robot to logically parse many given household tasks into subtasks, and to physically adjust to disruptions within a subtask so that the robot can move on without having to go back and start a task from scratch — and without engineers having to explicitly program fixes for every possible failure along the way.

“Imitation learning is a mainstream approach enabling household robots. But if a robot is blindly mimicking a human’s motion trajectories, tiny errors can accumulate and eventually derail the rest of the execution,” said Grad Student Yanwei Wang. “With our method, a robot can self-correct execution errors and improve overall task success.”

Here is an exclusive Tech Briefs interview — edited for length and clarity — with Wang.

Tech Briefs: What was the biggest technical challenge you faced while developing this algorithm?

Wang: I think one thing is you need to know for sure is how to prompt your large number of models to generate discrete plans. You need to have some expertise in how to prompt the large anchor model to give you the task specification in terms of different steps. That’s one challenge. The other challenge is to ask humans to give us a couple of successful demonstrations, and on top of that, we need a way to perturb those demonstration replays to learn the mode boundaries.

That requires a reset mechanism. For example, when you scoop the marbles and if you have some perturbations, the robot might drop the marbles and then fail the task. After that, you need to somehow reset the robot back to a state where, for example, it picks up the marbles and then does the task again. That requires a reset mechanism, which requires some engineering.

Tech Briefs: Can you explain in simple terms how it works?

Wang: So, there are two big ideas. One idea is about how to collect human demonstrations, telling the operating robot to do different tasks. However, those demonstrations are typically not labeled. The demonstrator is only giving you one continuous motion demonstration without telling you for example, ‘This part corresponds to reaching something, this part corresponds to scooping something, and that part corresponds to transporting the thing that you just scooped.’

Should a disruption occur, which is very typical in machine learning, the robot starts doing the right thing but then gradually drifts from the trajectory it is trying to imitate. Then, without labels, without segmenting the demonstration into discrete steps, you won’t be able to replan and correct yourself. For example, while transporting the marbles, if you spill them, blindly cloning or imitating the motion demonstration will take the spoon to the goal, but it will not drop any marbles into the target bowl because you already lost them.

The correct behavior would be that the moment you realize you dropped the marbles, you should go back and rescoop; that replanning capability — that common sense — is not there in the motion demonstration. But large language models have a lot of common-sense knowledge about how to react and replan should something bad happen. We want to tap into the knowledge of large language models to help the motion demonstration be more robust.

The issue with using a large language model for planning is that the knowledge is in the language space, it’s not grounded in the physical space. So, when large language models say, ‘When you drop something, you should go back and rescoop,’ what does the word rescoop mean? Our work is trying to ground that language plan in a continuous physical space so when the large language model says those things, we can translate that into part of the demonstration. That is way we build a bridge between the language model and the demonstration space.

Tech Briefs: Where does the team go from here? What’s next for you?

Wang: Right now, the scooping task, although it’s very simple, really captures the multi-step aspect that’s common in household tasks. The next step for me is to enable the technology to do those household tasks. So, we’re building some mini-kitchens in the lab and then trying to figure out how to get our robot to do various tasks. For example, put dishes in a dishwasher or open the fridge and take some food items, to do the kinds of tasks that are typical in a household environment. We’re trying to scale it up to show that capability.

Tech Briefs: You may have just answered this, but what are your next steps? Do you have any plans for further research work, etc.?

Wang: There’s still a lot to be done. Grounding language models in the physical space is an open research area. I think my method provides one way to go about it, but it’s far from being solved. Another big bottleneck is collecting lots of human demonstrations in the real world in a realistic environment, like the one I just mentioned.

Collecting data in real environments and then deploying the robot. There’s a gap between collecting the data and then assuming that suddenly the robot will just be able to do the task vs. what happens in reality when you execute the robots in those environments from the data you collected. So, we’re just trying to see how big the gap is, using our method, and then finding actual ways to improve it.

Tech Briefs: Do you have any advice for engineers or researchers aiming to bring their ideas to fruition, broadly speaking?

Wang: First think about what kinds of tasks you want to solve. Then you decide what is the core challenge of that task. Next scale it down to a minimally viable problem that still captures its core.

An example would be the grand vision that motivated our researchers to bring robots into household environments so that they can do chores for humans. But we didn’t immediately tackle that head-on. We identified that the core challenge of those tasks has a multi-step structure. So, we had to decide how to simplify the problem and scale it down without sacrificing the core problem of having a multi-step structure — that’s why we focused on the grouping task. It’s simple enough to quickly iterate on, and, at the same time, it has this multi-step structure.