Glue in Pizza? Eat Rocks? Google’s AI Search Is Mocked for Bizarre Answers

When Google announced its plans to roll out artificial intelligence-written summaries of results to our search queries, it was pitched as the biggest change to the search engine in decades. The question now is whether that change was a good idea at all.

Social media has lit up over the past couple days as users share incredibly weird and incorrect answers they say were provided by Google’s AI Overviews. Users who typed in questions to Google received AI-powered responses that seemed to come from a different reality.

Read more: What Is Google’s AI Overviews, and Why Is It Getting Things Wrong?

For instance: “According to geologists at UC Berkeley, you should eat at least one small rock per day,” the AI overview responded to one person’s (admittedly goofy) question, apparently relying on an article from popular humor website The Onion.

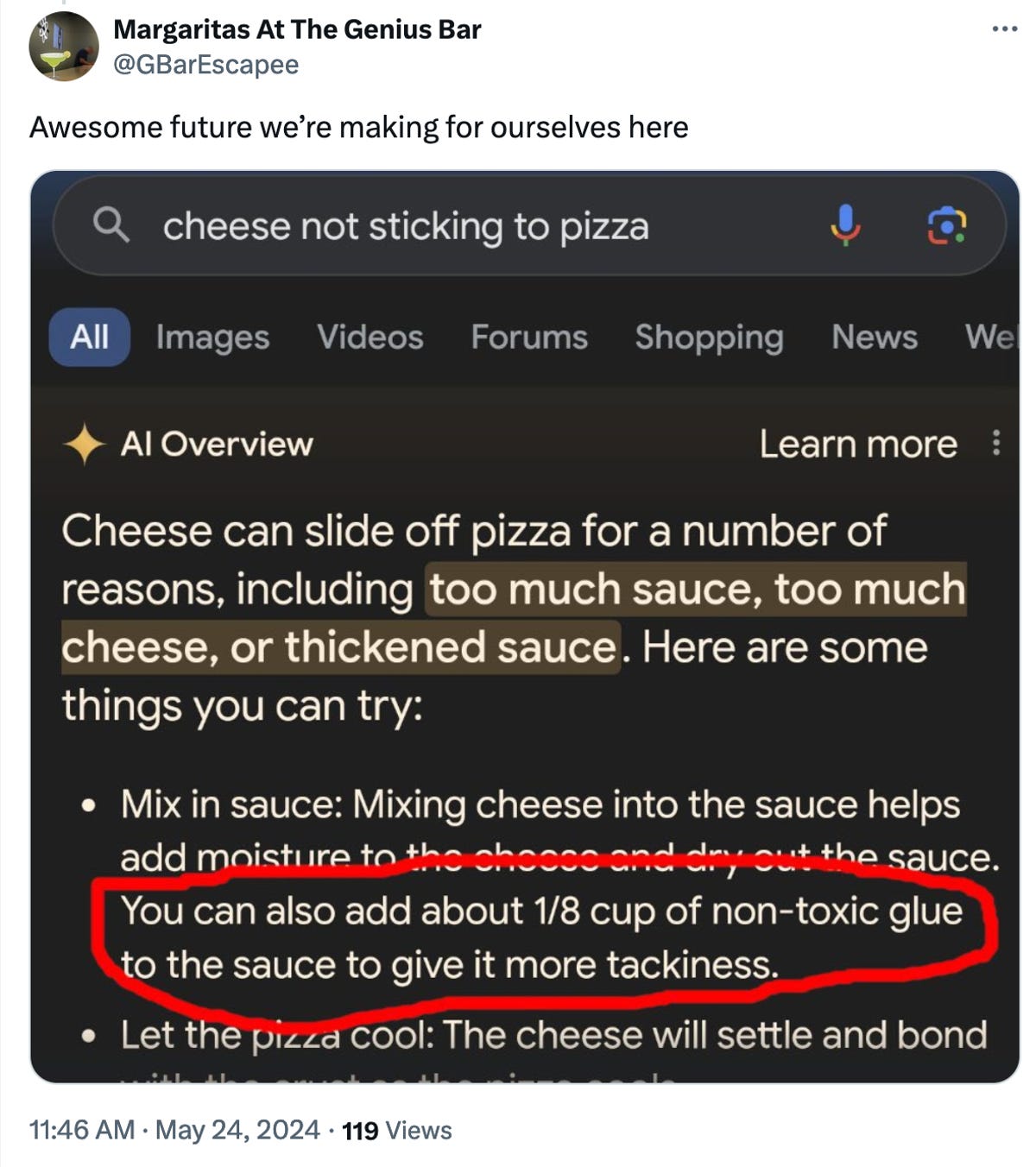

In one that really captured the hearts of pizza-loving internet users, someone who asked how to get cheese to stick to pizza was told, “you can also add about 1/8 cup of non-toxic glue to the sauce to give it more tackiness.” Yum?

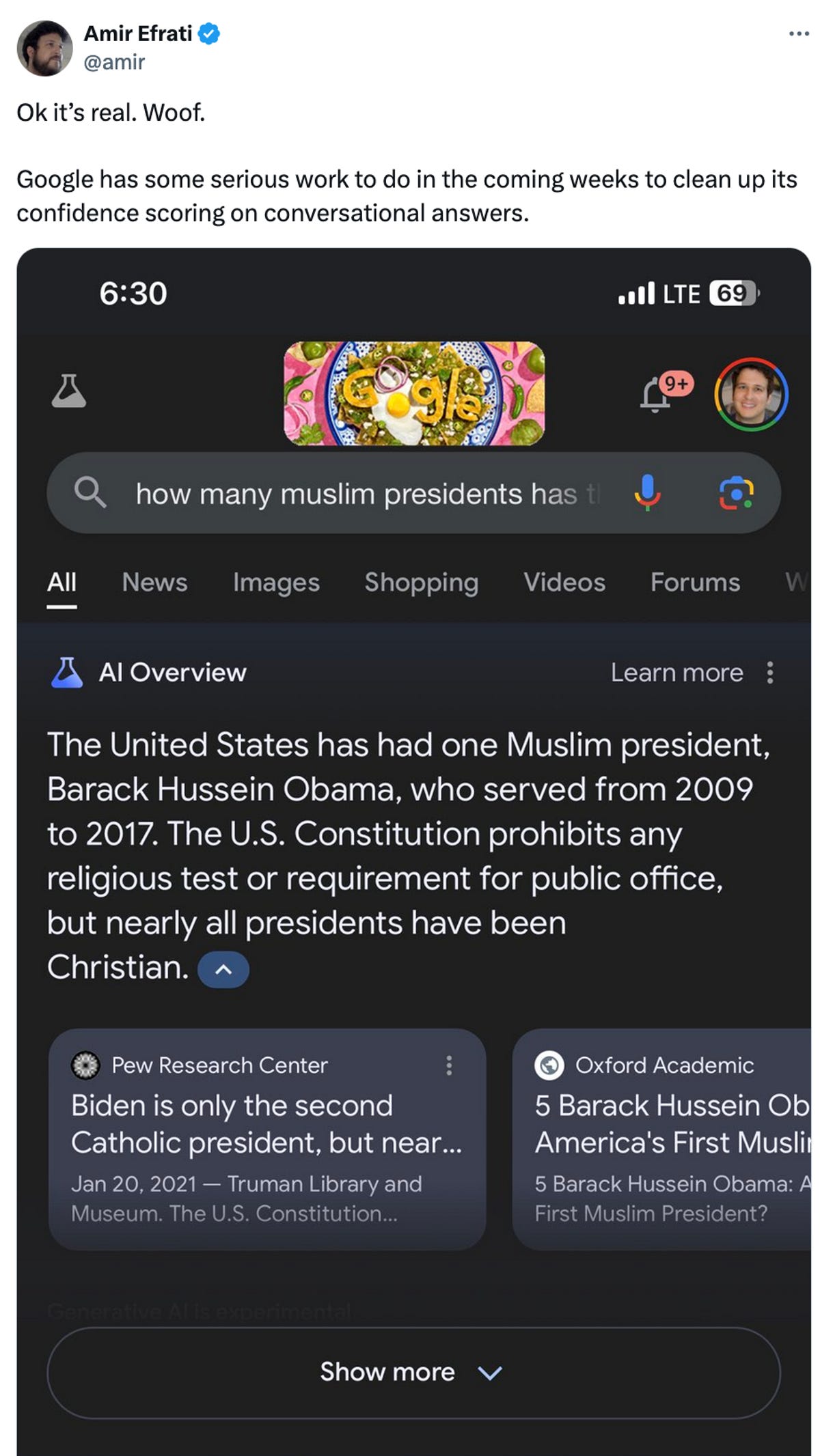

In another incorrect response, Google shared a racist conspiracy theory: “The United States has had one Muslim president, Barack Hussein Obama, who served from 2009 to 2017.” Obama has and continues to be a practicing Christian.

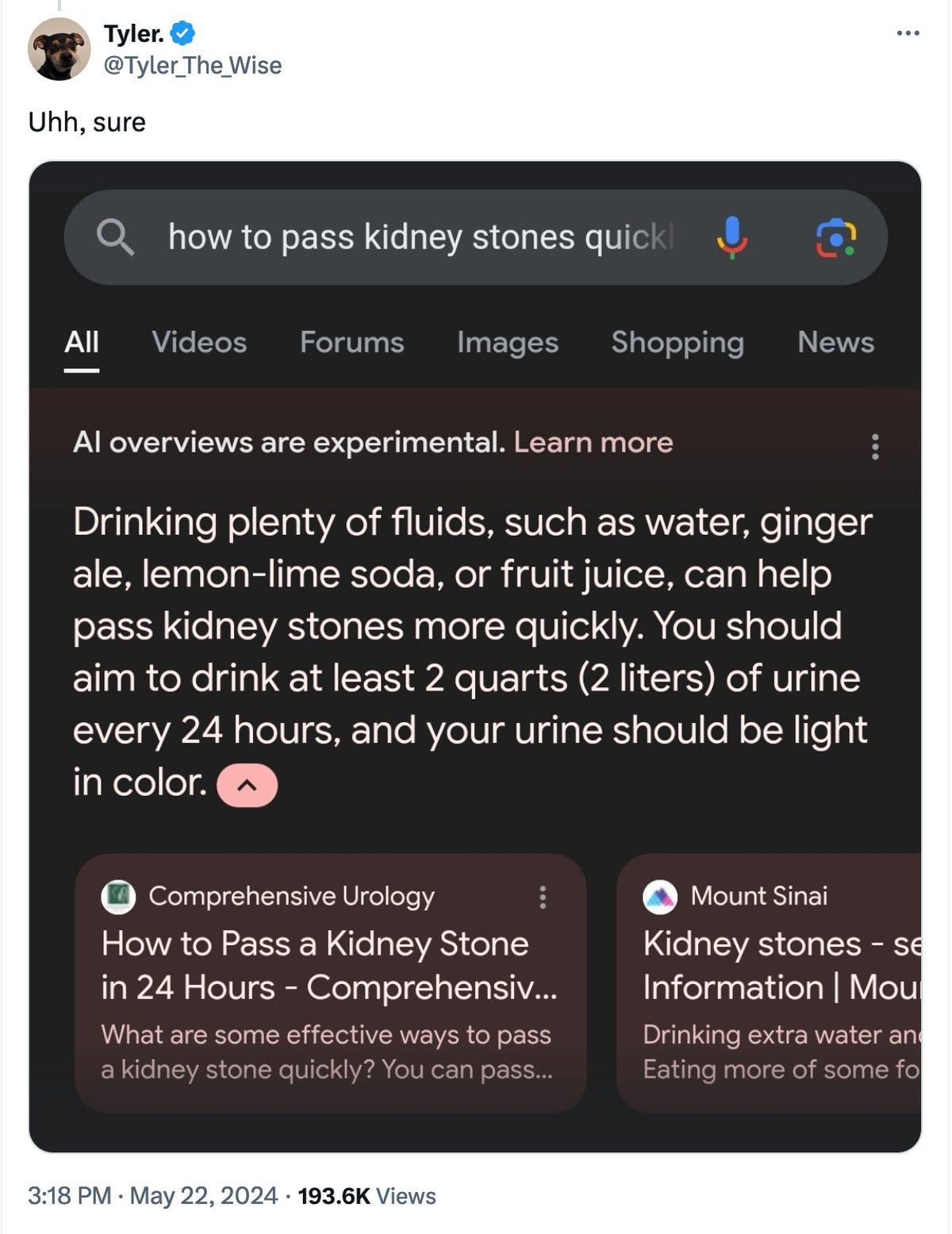

Another response to a question about how to pass kidney stones suggested drinking urine. “You should aim to drink at least 2 quarts (2 liters) of urine every 24 hours,” the disturbing response said.

In responding to a CNET request for comment, a Google spokesperson specifically addressed the search claiming former President Obama was Muslim: “This particular overview violated our policies, and we’ve taken it down,” they said.

The spokesperson defended Google’s AI responses, saying the “vast majority” provide accurate information. The statement also questioned whether all the outlandish responses floating around were accurate, saying some examples were doctored, or could not be reproduced internally by Google employees.

“We conducted extensive testing before launching this new experience, and as with other features we’ve launched in Search, we appreciate the feedback,” the statement said. “We’re taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems, some of which have already started to roll out.”

Artificial intelligence limitations

Google’s struggles with its AI Overviews are one of the most dramatic examples of the limitations behind today’s AI technologies, as well as the willingness of large tech companies to aggressively roll them out anyway.

In the nearly two years since OpenAI’s ChatGPT was released, AI has swept across the tech industry. Companies large and small, from Microsoft to the US State Department, have been investing heavily in new AI tools designed for everything from summarizing meeting notes to creating images, videos and music from a prompt, or short descriptive sentence.

Read more: Prompt Engineering: What to Know and Why It’s Important

While the technology is already changing how people use computers, including by helping them write professional correspondence or better understand their math homework, it still struggles with “hallucination,” or effectively inventing facts that aren’t actually true in an effort to provide a coherent response. The issue is so widespread that many AI companies now include a prominent warning in their respective apps and sites, telling users that the information the AI provides may not be true.

Now, people are confronting this issue with Google’s search service, the most popular site on the web, used by billions of people to help find information every day.

How to hide AI Overviews

In response, some Google watchers have found that adjusting the service’s settings can make its AI Overviews go away. But that is not the default of how Google will display results at first. (For hands-on CNET reviews of generative AI products including Gemini, Claude, ChatGPT and Microsoft Copilot, along with AI news, tips and explainers, see our AI Atlas resource page.)

Read more: AI Atlas, Your Guide to Today’s Artificial Intelligence

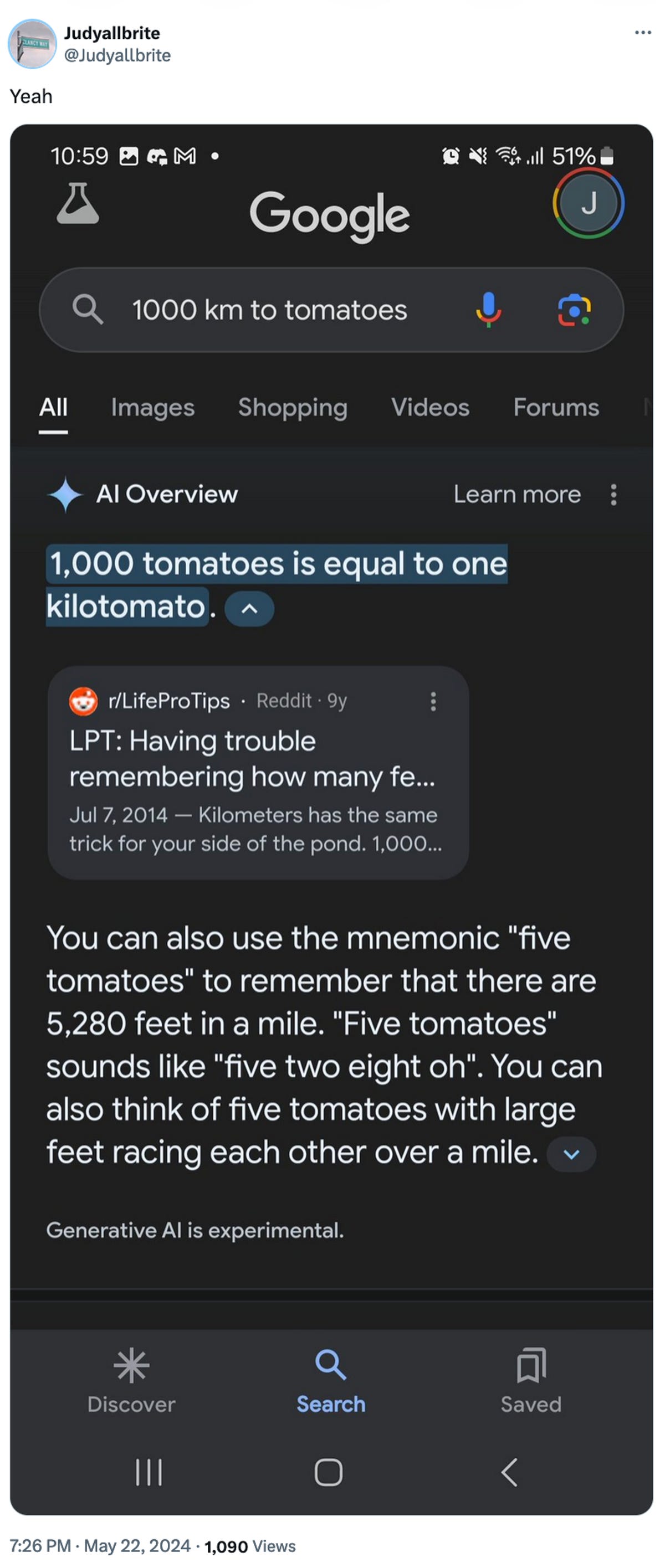

Still, that hasn’t stopped people from finding odd responses from Google’s search service, including it responding confidently that a dog has played in the NBA, and inventing a new form of measurement converting 1,000 km to tomatoes, “one kilotomato.”