How to control something with no set form?

The world was introduced to the concept of shape-changing robots in 1991, with the T-1000 featured in the cult movie Terminator 2: Judgment Day. Since then (if not before), many a scientist has dreamed of creating a robot with the ability to change its shape to perform diverse tasks.

And indeed, we’re starting to see some of these things come to life – like this “magnetic turd” from the Chinese University of Hong Kong, for example, or this liquid metal Lego man, capable of melting and re-forming itself to escape from jail. Both of these, though, require external magnetic controls. They can’t move independently.

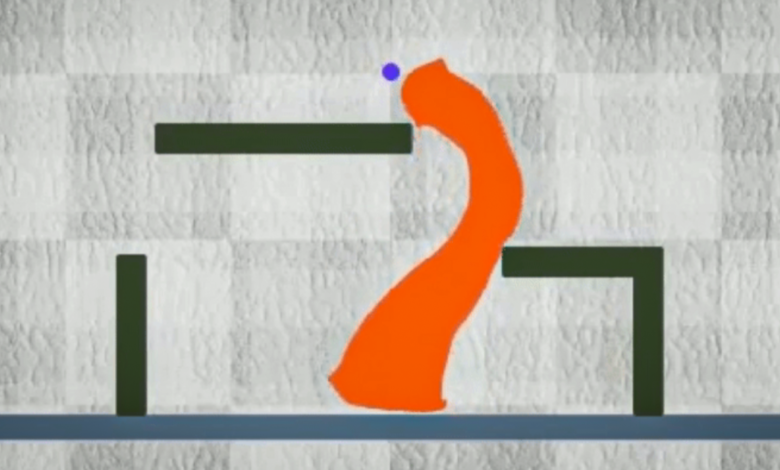

But a research team at MIT is working on developing ones that can. They’ve developed a machine-learning technique that trains and controls a reconfigurable ‘slime’ robot that squishes, bends, and elongates itself to interact with its environment and external objects. Disappointed side note: the robot’s not made of liquid metal.

TERMINATOR 2: JUDGMENT DAY Clip – “Hospital Escape” (1991)

“When people think of soft robots, they tend to think about robots that are elastic, but return to their original shape,” said Boyuan Chen, from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and co-author of the study outlining the researchers’ work. “Our robot is like slime and can actually change its morphology. It is very striking that our method worked so well because we are dealing with something very new.”

The researchers had to devise a way of controlling a slime robot that doesn’t have arms, legs, or fingers – or indeed any sort of skeleton for its muscles to push and pull against – or indeed, any set location for any of its muscle actuators. A form so formless, and a system so endlessly dynamic… These present a nightmare scenario: how on Earth are you supposed to program such a robot’s movements?

Clearly any kind of standard control scheme would be useless in this scenario, so the team turned to AI, leveraging its immense capability to deal with complex data. And they developed a control algorithm that learns how to move, stretch, and shape said blobby robot, sometimes multiple times, to complete a particular task.

MIT

Reinforcement learning is a machine-learning technique that trains software to make decisions using trial and error. It’s great for training robots with well-defined moving parts, like a gripper with ‘fingers,’ that can be rewarded for actions that move it closer to a goal—for example, picking up an egg. But what about a formless soft robot that is controlled by magnetic fields?

“Such a robot could have thousands of small pieces of muscle to control,” Chen said. “So it is very hard to learn in a traditional way.”

A slime robot requires large chunks of it to be moved at a time to achieve a functional and effective shape change; manipulating single particles wouldn’t result in the substantial change required. So, the researchers used reinforcement learning in a nontraditional way.

Huang et al.

In reinforcement learning, the set of all valid actions, or choices, available to an agent as it interacts with an environment is called an ‘action space.’ Here, the robot’s action space was treated like an image made up of pixels. Their model used images of the robot’s environment to generate a 2D action space covered by points overlayed with a grid.

In the same way nearby pixels in an image are related, the researchers’ algorithm understood that nearby action points had stronger correlations. So, action points around the robot’s ‘arm’ will move together when it changes shape; action points on the ‘leg’ will also move together, but differently from the arm’s movement.

The researchers also developed an algorithm with ‘coarse-to-fine policy learning.’ First, the algorithm is trained using a low-resolution coarse policy – that is, moving large chunks – to explore the action space and identify meaningful action patterns. Then, a higher-resolution, fine policy delves deeper to optimize the robot’s actions and improve its ability to perform complex tasks.

MIT

“Coarse-to-fine means that when you take a random action, that random action is likely to make a difference,” said Vincent Sitzmann, a study co-author who’s also from CSAIL. “The change in the outcome is likely very significant because you coarsely control several muscles at the same time.”

Next was to test their approach. They created a simulation environment called DittoGym, which features eight tasks that evaluate a reconfigurable robot’s ability to change shape. For example, having the robot match a letter or symbol and making it grow, dig, kick, catch, and run.

MIT’s slime robot control scheme: Examples

“Our task selection in DittoGym follows both generic reinforcement learning benchmark design principles and the specific needs of reconfigurable robots,” said Suning Huang from the Department of Automation at Tsinghua University, China, a visiting researcher at MIT and study co-author.

“Each task is designed to represent certain properties that we deem important, such as the capability to navigate through long-horizon explorations, the ability to analyze the environment, and interact with external objects,” Huang continued. “We believe they together can give users a comprehensive understanding of the flexibility of reconfigurable robots and the effectiveness of our reinforcement learning scheme.”

DittoGym

The researchers found that, in terms of efficiency, their coarse-to-fine algorithm outperformed the alternatives (e.g., coarse-only or fine-from-scratch policies) consistently across all tasks.

It’ll be some time before we see shape-changing robots outside the lab, but this work is a step in the right direction. The researchers hope that it will inspire others to develop their own reconfigurable soft robot that, one day, could traverse the human body or be incorporated into a wearable device.

The study was published on the pre-print website arXiv.

Source: MIT