How to spot AI misinformation – Deseret News

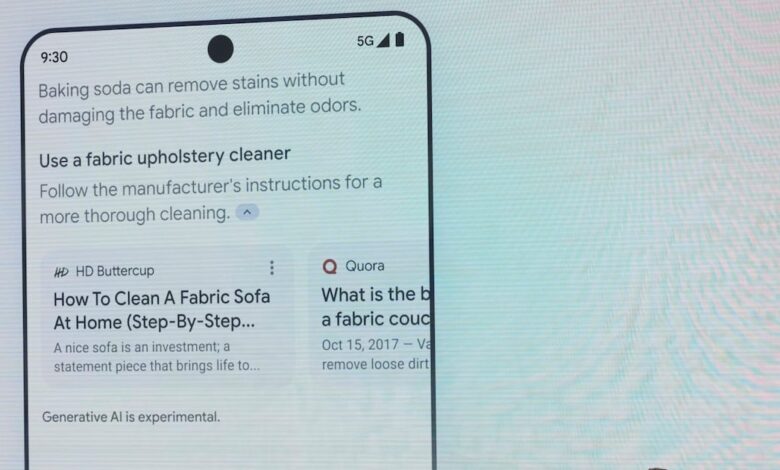

One of Google’s latest technological developments is an artificial intelligence system referred to as “generative AI,” a tool that allows users to view AI-generated summaries in response to their searches.

The project comes at a time when artificial intelligence is becoming widespread in the workplace, at school and at home.

This new AI project is meant to take “more of the work out of searching.” According to Google, “With this powerful new technology, we can unlock entirely new types of questions you never thought Search could answer, and transform the way information is organized, to help you sort through and make sense of what’s out there.”

Unfortunately for Google, the AI-generated search answers may or may not be correct. According to NBC News, the artificial intelligence system is facing “social media mockery.”

Is Google AI reliable?

Per NBC News, social media users have been posting AI responses that are just plain wrong. One user googled “1000 km to lego” and was told that the length equated to “a 1,000 km bike ride to bring LEGO pieces to a hospital.”

When NBC News put the AI system to the test — searching for “how many feet does an elephant have” — Google’s AI replied, “Elephants have two feet, with five toes on the front feet and four on the back feet.”

A Reddit user pointed out that an 11-year-old Reddit comment was likely used by Google AI’s response to the search “cheese not sticking to pizza.” The program suggested adding “about 1/8 cup of nontoxic glue to the sauce.”

Despite the problems, Google reassured in a written statement that “the vast majority of AI Overviews provide high-quality information, with links to dig deeper on the web,” per The Associated Press.

Google’s AI tool was tested by The Associated Press also. When they asked “what to do about a snake bite,” Google produced a detailed answer.

Some concerns, however, go deeper than cheese not sticking to pizza. Emily M. Bender, a linguistics expert, told The Associated Press about her concerns. If a user asks Google an “emergency question,” an incorrect answer could be dangerous.

Additionally, Bender and her colleague Chirag Shah “warned that such AI systems could perpetuate the racism and sexism found in the huge troves of written data they’ve been trained on.”

“The problem with that kind of misinformation is that we’re swimming in it,” Bender told The Associated Press. “And so people are likely to get their biases confirmed. And it’s harder to spot misinformation when it’s confirming your biases.”

According to Euronews, AI works by predicting “what words would best answer the questions asked of them based on the data they’ve been trained on.” This means that the systems will make up information — creating a problem known as hallucination.

How can I know whether AI information is correct?

Artificial Intelligence isn’t a bad thing. But falling for misinformation can be detrimental. Julia Feerrar, a digital literacy educator at Virginia Tech, offered a few suggestions.

- Firstly, fake news of any kind is usually “designed to appeal to our emotions.” Feerrar proposed that users stop and think for a moment if online information sparks an emotional reaction.

- Fact-check your sources by verifying your information. Look for trusted and legitimate news sources.

- AI-generated photos or images typically have strange appearances. Make sure you look closely for errors.