Inside Barnard’s pyramid approach to AI literacy

Among Barnard College workshops on neurodiversity, academic integrity and environmental justice, a new offering debuted in the spring of 2023: a session dubbed “Who’s Afraid of ChatGPT?”

The workshop came soon after the generative AI tool’s launch, a change that rippled into classrooms and generated concern among faculty and students. An internal Barnard survey found over half of faculty and more than a quarter of students had not used generative artificial intelligence (AI) at that time.

Melanie Hibbert, Barnard’s director of instructional media and academic technology services, knew that needed to change.

“It just became clear to me there needed to be a conceptual framework on how we are approaching this because it can feel [like] there’s all these separate things going on,” she said.

The college turned to a pyramid-style literacy framework first used by the University of Hong Kong and updated for Barnard’s students, faculty and staff. Instead of jumping headfirst into AI, as some institutions have done, the pyramid approach follows a gradual lean into the technology, ensuring a solid foundation before moving to the next step.

Barnard’s current focus is on level one and two at the base of the pyramid, creating a solid understanding of AI.

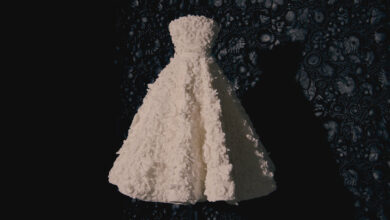

The Barnard College pyramid approach to AI literacy.

Melanie Hibbert, Barnard College

Level one is as simple as understanding the definitions of “artificial intelligence” and “machine learning” and recognizing the benefits and drawbacks of the technology. Level two builds on that, going beyond just trying out generative AI tools and advancing to the refining of AI prompts and recognizing bias, hallucinations and incorrect answers.

The college hosts workshops and sessions for students and faculty to tackle these first two levels, allowing them to tinker with AI and bringing in guest speakers to educate the campus community.

“I think we should be proactive and have a judgment-free zone to test it out and talk about it,” Hibbert said, noting that the ultimate goal is to move up the pyramid. “In a couple years we can say almost everyone [at our institution] has used some AI tool, has some familiarity with it.”

“Then we can become a leader with AI,” she said. “Being creative on how to use it in liberal arts institutions.”

That stage will come at level three and four of the pyramid, with level three encouraging students and faculty to look at AI tools in a broader context, including ethical considerations. Level four is the pinnacle of AI adoption, allowing students and faculty to build their own software to leverage AI and think of new uses of the technology.

While Barnard has its pyramid, many institutions still grapple with creating policies and implementing AI frameworks.

Lev Gonick, Arizona State University’s CIO, advised institutions to not specifically zero in on AI and instead treat it like any other topic.

“I think that policy conversation, we’ve got to get over it,” Gonick said. “Engaging the faculty is what all of us can and should be doing.”

“Whether it’s questions around short cutting and cheating, that’s called academic integrity,” he said during the Digital Universities conference co-hosted by Inside Higher Ed last month. “We don’t need to have a new policy for AI when we went systematically through all of the kinds of ‘gotchas’ that are out there.”

Hal Abelson, a professor of computer science and engineering at the Massachusetts Institute of Technology, said tackling AI frameworks should be similar, for example, to creating policies within the English or history departments with a focus on consistency.

“You want something coherent and consistent within the university,” he said. “Take English composition—some [institutions] will say there are rules up to individual faculty and some will say there’s a centralized policy.”

He added that universities’ policies will obviously differ, but should reflect an institution’s guiding pillars. MIT, for example, does not have an “ease into it” type policy with AI and instead encourages faculty, students and staff to dive headfirst into the technology in a trial-and-error type format, essentially jumping straight to levels three and four of the Barnard pyramid.

“We put tremendous emphasis on creating with AI but that’s the sort of place that MIT is,” he said. “It’s about making things. Other places have a very different view of this.”

A systematic review of AI frameworks, published in the June 2024 issue of ScienceDirect, looked at 47 articles focused on boosting AI literacy through frameworks, concepts, implementation and assessments.

The researchers behind the review—two from George Washington University and one from King Abdulaziz University in Saudi Arabia—found six key concepts of AI literacy: recognize; know and understand; use and apply; evaluate; create; and navigate ethically.

Those six pillars, regardless of whether they end up in a pyramid form or not, could serve as the blueprint for institutions still grappling with their own frameworks, the review found. The pillars “can be used to analyze and design AI literacy approaches and assessment instruments,” according to the paper.

But the most important thing is just having an AI policy, Hibbert and Abelson agreed.

Even if a university is not currently using AI, “at some point, it’s a powerful tool that’s going to be used,” Abelson said. “It’s important to have something.”